In my previous post, I looked at how the BizTalk Server 2013 beta supports the receipt of messages through REST endpoints. In this post, I’ll show off a couple of scenarios for sending BizTalk messages to REST service endpoints. Even though the BizTalk adapter is based on the WCF REST binding, all my demonstrations are with non-WCF services (just to prove everything works the same).

Scenario #1 Consuming “GET” Service From an Orchestration

In this first case, I planned on invoking a “GET” operation and processing the response in an orchestration. Specifically, I wanted to receive an invoice in one currency, and use a RESTful currency conversation service to flip the currency to US dollars. There are two key quirks to this adapter that you should be aware of:

- Consumed REST services cannot have an “&” symbol in the URL. This meant that I had to find a currency conversion service that did NOT use ampersands. You’d think that this would be easy, but many services use a syntax like “/currency?from=AUD&to=USD”, and the adapter doesn’t like that one bit. While “?” seems acceptable, ampersands most definitely are not.

- The adapter throws an error on GET. Neither GET nor DELETE requests expect a message payload (as they are entirely URL driven), and the adapter throws an error if you send a GET request to an endpoint. This is a problem because you can’t natively send an empty message to an adapter endpoint. Below, I’ll show you one way to get around this. However, I consider this an unacceptable flaw that deserves to be fixed before BizTalk Server 2013 is released.

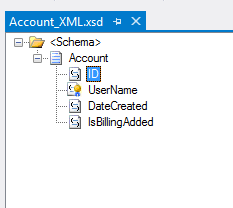

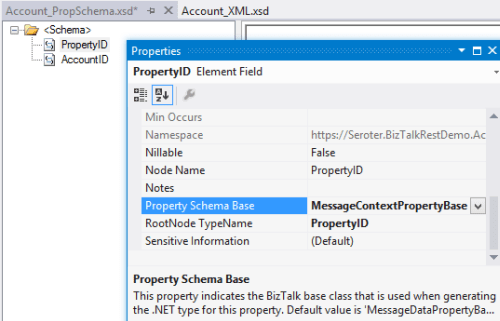

For this demonstration, I used the adapter-friendly currency conversion service at Exchange Rate API. To get started, I created a new schema for “Invoice” and a property schema that held the values that needed to be passed to the send adapter.

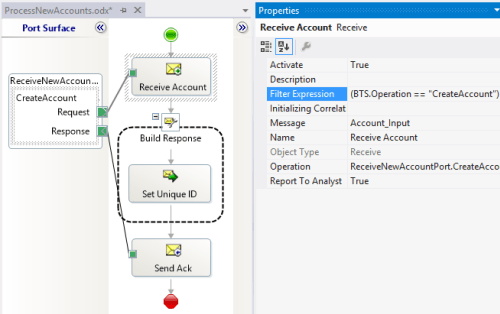

Next, I built an orchestration that received this message from a (FILE) adapter, routed a GET request to the currency conversion service, and then multiplied the source currency by the returned conversion rate. In the orchestration, I routed the original Invoice message to the GET service, even though I knew I’d have to strip out the body before completing the request. Also, the Exchange Rate API service returns its result as text (not XML or JSON), so I set the response message type as XmlDocument. I then built a helper component that took in the service response message and returned a string.

public static class Utilities

{

public static string ConvertMessageToString(XLANGMessage msg)

{

string retval = "0";

using (StreamReader reader = new StreamReader((Stream)msg[0].RetrieveAs(typeof(Stream))))

{

retval = reader.ReadToEnd();

}

return retval;

}

}

Here’s the final orchestration.

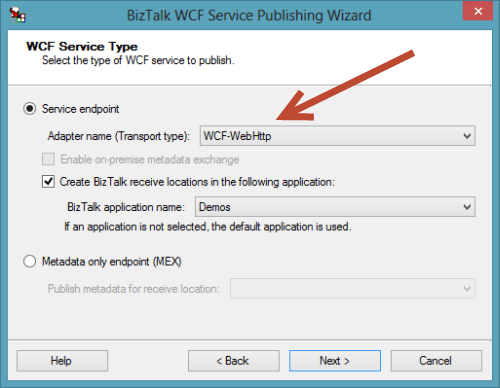

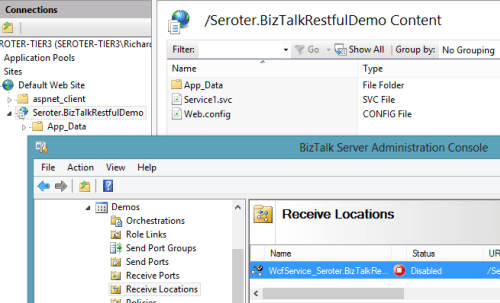

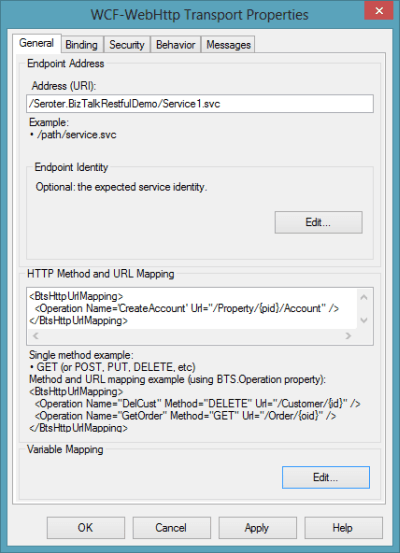

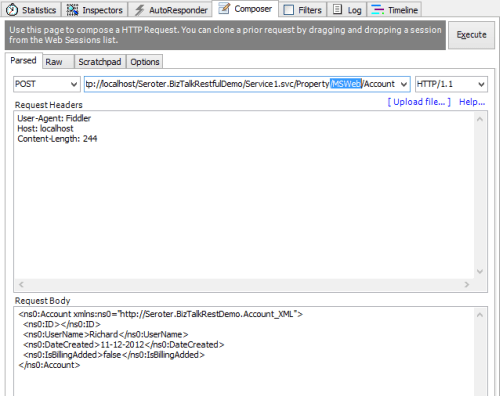

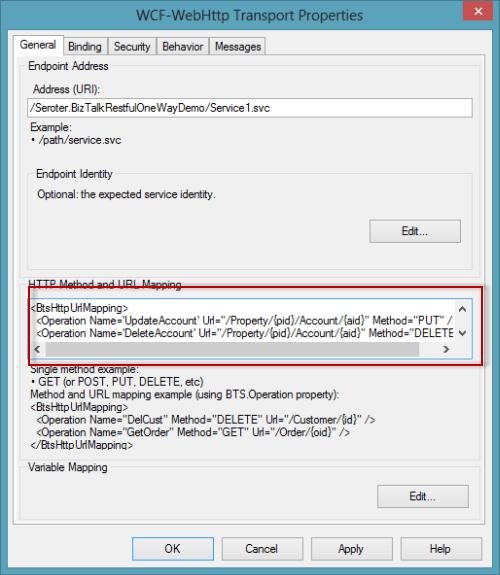

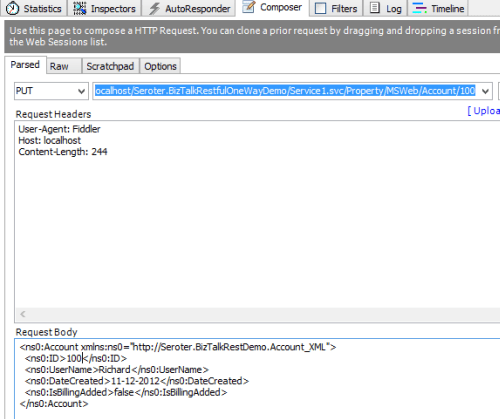

After building and deploying this BizTalk project (with the two schemas and one orchestration), I created a FILE receive location to pull in the original invoice. I then configured a WCF-WebHttp send port. First, I set the base address to the Exchange Rate API URL, and then set an operation (which matched the name of the operation I set on the orchestration send port) that mapped to the GET verb with a parameterized URL.

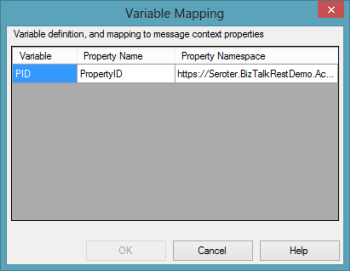

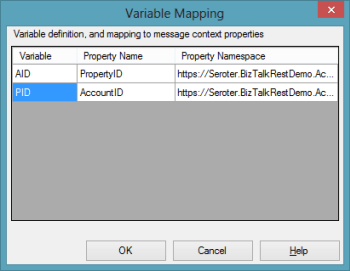

I set those URL parameters by clicking the Edit button under Variable Mapping and choosing which property schema value mapped to each URL parameter.

This scenario was nearly done. All that was left was to strip out the body of message so that the GET wouldn’t fail. Fortunately, Saravana Kumar already built a simple pipeline component that erases the message body. I built the pipeline component, added it to a custom pipeline, and deployed the pipeline.

Finally, I made sure that my send port used this new pipeline.

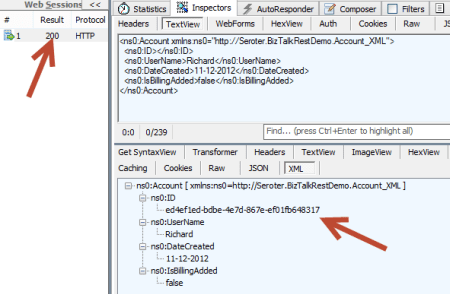

With all my receive/send ports created and configured, and my orchestration enlisted, I dropped a sample file into a folder monitored by the FILE receive adapter. This sample invoice was for 100 Australian dollars, and I wanted the output invoice to translate that amount to U.S. dollars. Sure enough, the REST service was called, and I got back a modified invoice.

<ns0:Invoice xmlns:ns0="http://Seroter.BizTalkRestDemo"> <ID>100</ID> <CustomerID>10022</CustomerID> <OriginalInvoiceAmount>100</OriginalInvoiceAmount> <OriginalInvoiceCurrency>AUD</OriginalInvoiceCurrency> <ConvertedInvoiceAmount>103.935900</ConvertedInvoiceAmount> <ConvertedInvoiceCurrency>USD</ConvertedInvoiceCurrency> </ns0:Invoice>

So we can see that GET works pretty well (and should prove to be VERY useful as more and more services switch to a RESTful model), but you have to be careful on both the URLs you access, and the body you (don’t) send.

Scenario #2 Invoking a “DELETE” Command Via Messaging Only

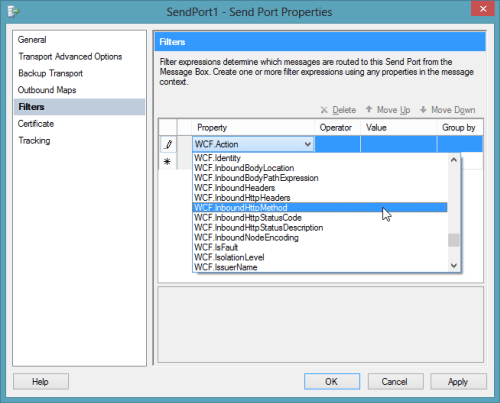

Let’s try a messaging-only solution that avoids orchestration and calls a service with a DELETE verb. For fun, I wanted to try using the WCF-WebHttp adapter with the “single operation format” instead of the XML format that lets you list multiple operations, verbs and URLs.

In this case, I wrote an ASP.NET Web API service that defines an “Invoice” model, and has a controller with a single operation that responds to DELETE requests (and writes a trace statement).

public class InvoiceController : ApiController

{

public HttpResponseMessage DeleteInvoice(string id)

{

System.Diagnostics.Debug.WriteLine("Deleting invoice ... " + id);

return new HttpResponseMessage(HttpStatusCode.NoContent);

}

}

With my REST service ready to go, I created a new send port that would subscribe directly on the input message and call this service. The structured of the “single operation format” isn’t really explained, so I surmised that all it included was the HTTP verb that would be executed against the adapter’s URL. So, the URL must be fixed, and cannot contain any dynamic parameter values. For instance:

To be sure, the scenario above make zero sense. You’d never really hardcode a URL that pointed to a specific transaction resource. HOWEVER, there could be a reference data URL (think of lists of US states, or current currency value) that might be fixed and useful to embed in an adapter. Nonetheless, my demos aren’t always about making sense, but about flexing the technology. So, I went ahead and started this send port (WITHOUT changing it’s pipeline from “passthrough” to “remove body”) and dropped an invoice file to be picked up. Sure enough, the file was picked up, the service was called, and the output was visible in my Visual Studio 2012 output window.

Interestingly enough, the call to DELETE did NOT require me to suppress the message body. Seems that Microsoft doesn’t explicitly forbid this, even though payloads aren’t typically sent as part of DELETE requests.

Summary

In these two articles, we looked at REST support in the BizTalk Server 2013 (beta). Overall, I like what I see. SOAP services aren’t going anywhere anytime soon, but the trend is clear: more and more services use a RESTful API and a modern integration bus has to adapt. I’d like to see more JSON support, but admittedly haven’t tried those scenarios with these adapters.

What do you think? Will the addition of REST adapters make your life easier for both exposing and consuming endpoints?