Just a quick FYI that my new book, Applied Architecture Patterns on the Microsoft Platform, can now be pre-ordered on Amazon.com. I’m reviewing final prints now, so hopefully we’ll have this out the door and in your hands shortly.

Category: General Architecture

-

Cloud Provider Request: Notification of Exceeded Cost Threshold

I wonder if one of the things that keeps some developers from constantly playing with shiny cloud technologies is a nagging concern that they’ll accidentally ring up a life-altering usage bill. We’ve probably all heard horror stories of someone who accidentally left an Azure web application running for a long time or kept an Amazon AWS EC2 image online for a month and were shocked by the eventual charges. What do I want? I want a way to define a cost threshold for my cloud usage and have the provider email me as soon as I reach that value.

Ideally, I’d love a way to set up a complex condition based on various sub-services or types of charges. For instance, If bandwidth exceeds X, or Azure AppFabric exceeds Y, then send me an SMS message. But I’m easy, I’d be thrilled if Microsoft emailed me the minute I spent more than $20 on anything related to Azure. Can this be that hard? I would think that cloud providers are constantly accruing my usage (bandwidth, compute cycles, storage) and could use an event driven architecture to send off events for computation at regular intervals.

If I’m being greedy, I want this for ANY variable-usage bill in my life. If you got an email during the summer from your electric company that said “Hey Frosty, you might want to turn off the air conditioner since it’s ten days into the billing cycle and you’ve already rung up a bill equal to last month’s total”, wouldn’t you alter your behavior? Why are most providers stuck in a classic BI model (find out things whenever reports are run) vs. a more event-driven model? Surprise bills should be a thing of the past.

Are you familiar with any providers who let you set charge limits or proactively send notifications? Let’s make this happen, please.

-

Updated Ways to Store Data in BizTalk SSO Store

One of my more popular tools has been the BizTalk SSO Configuration Data Storage Tool. At the time I built that, there was no easy way to store and manage Single Sign On (SSO) applications that were used purely for secure key/value pair persistence.

Since that time, a few folks (that I know of) have taken my tool and made it better. You’ll find improvements from Paul Petrov here (with update mentioned here), and most recently by Mark Burch at BizTorque.net. Mark mentioned in his post that Microsoft had stealthily released a tool that also served the purpose of managing SSO key/values, so I thought I’d give the Microsoft tool a quick whirl.

First off, I downloaded my own SSO tool, which I admittedly haven’t had a need to use for quite some time. I was thrilled that it worked fine on my new BizTalk 2010 machine.

I created (see above) a new SSO application named SeroterToolApp which holds two values. I then installed the fancy new Microsoft tool which shows up in the Start Menu under SSO Application Configuration.

When you open the tool, you’ll find a very simple MMC view that has Private SSO Application Configuration as the root in the tree. Somewhat surprisingly, this tool does NOT show the SSO application I just created above in my own tool. Microsoft elitists, think my application isn’t good enough for them.

So let’s create an application here and see if my tool sees it. I right-click that root node in the tree and choose to add an application. You see that I also get an option to import an application and choosing this prompts me for a “*.sso” file saved on disk.

After adding a new application, I right-clicked the application and chose to rename it.

After renaming it MicrosoftToolApp, I once again right-clicked the application and added a key value pair. It’s nice that I can create the key and set its value at the same time.

I added one more key/value pair to the application. Then, when you click the application name in the MMC console, you see all the key/value pairs contained in the application.

Now we saw earlier that the application created within my tool does NOT show up in this Microsoft tool, but what about the other way around? If I try and retrieve the application created in the Microsoft tool, sure enough, it appears.

For bonus points, I tried to change the value of one of the keys from my tool, and that change is indeed reflected in the Microsoft tool.

So this clearly shows that I am a much better developer than anyone at Microsoft. Or more likely, it shows that somehow the applications that my tool creates are simply invisible to Microsoft products. If anyone gets curious and wants to dig around, I’d be somewhat interested in knowing why this is the case.

It’s probably a safe bet moving forward to use the Microsoft tool to securely store key/value pairs in Enterprise Single Sign On. That said, if using my tool continues to bring joy into your life, than by all means, keep using it!

-

6 Things to Know About Microsoft StreamInsight

Microsoft StreamInsight is a new product included with SQL Server 2008 R2. It is Microsoft’s first foray into the event stream processing and complex event processing market that already has its share of mature products and thought leaders. I’ve spent a reasonable amount of time with the product over the past 8 months and thought I’d try and give you a quick look at the things you should know about it.

- Event processing is about continuous intelligence. An event can be all sorts of things ranging from a customer’s change of address to a meter read on an electrical meter. When you have an event driven architecture, you’re dealing with asynchronous communication of data as it happens to consumers who can choose how to act upon it. The term “complex event processing” refers to gathering knowledge from multiple (simple) business events into smaller sets of summary events. I can join data from multiple streams and detect event patterns that may have not been visible without the collective intelligence. Unlike traditional database driven applications where you constantly submit queries against a standing set of data, an event processing solution deploys a set of compiled queries that the event data passes through. This is a paradigm shift for many, and can be tricky to get your head around, but it’s a compelling way to compliment an enterprise business intelligence strategy and improve the availability of information to those who need it.

- Queries are written using LINQ. The StreamInsight team chose LINQ as their mechanism for authoring declarative queries. As you would hope, you can write a fairly wide set of queries that filter content, join distinct streams, perform calculations and much more. What if I wanted to have my customer call center send out a quick event whenever a particular product was named in a customer complaint? My query can filter out all the other products that get mentioned and amplify events about the target product:

var filterQuery = from e in callCenterInputStream where e.Product == "Seroterum" select e;One huge aspect of StreamInsight queries relates to aggregation. Individual event calculation and filtering is cool, but what if we want to know what is happening over a period of time? This is where windows come into play. If I want to perform a count, average, or summation of events, I need to specify a particular time window that I’m interested in. For instance, let’s say that I wanted to know the most popular pages on a website over the past fifteen minutes, and wanted to recalculate that total every minute. So every minute, calculate the count of hits per page over the past fifteen minutes. This is called a Hopping Window.

var activeSessions = from w in websiteInputStream group w by w.PageName into pageGroup from x in pageGroup.HoppingWindow( TimeSpan.FromMinutes(15), TimeSpan.FromMinutes(1), HoppingWindowOutputPolicy.ClipToWindowEnd) select new PageSummarySummary { PageName = pageGroup.Key, TotalRequests = x.Count() };I’ll have more on this topic in a subsequent blog post but for now, know that there are additional windows available in StreamInsight and I HIGHLY recommend reading this great new paper on the topic from the StreamInsight team.

- Queries can be reused and chained. A very nice aspect of an event processing solution is the ability to link together queries. Consider a scenario where the first query takes thousands of events per second and filters out the noise and leaves me only with a subset of events that I care about. I can use the output of that query in another query which performs additional calculations or aggregation against this more targeted event stream. Or, consider a “pub/sub” scenario where I receive a stream of events from one source but have multiple output targets. I can take the results from one stream and leverage it in many others.

- StreamInsight uses an adapter model for the input and output of data. When you build up a StreamInsight solution, you end up creating or leveraging adapters. The product doesn’t come with any production-level adapters yet, but fortunately there are a decent number of best-practice samples available. In my upcoming book I show you how to build an MSMQ adapter which takes data from a queue and feeds it into the StreamInsight engine. Adapters can be written in a generic, untyped fashion and therefore support easy reuse, or, they can be written to expect a particular event payload. As you’d expect, it’s easier to write a specific adapter, but there are obviously long term benefits to building reusable, generic adapters.

- There are multiple hosting options. If you choose, you can create an in-process StreamInsight server which hosts queries and uses adapters to connect to data publishers and consumers. This is probably the easiest option to build, and you get the most control over the engine. There is also an option to use a central StreamInsight server which installs as a Windows Service on a machine. Whereas the first option leverages a “Server.Create()” operation, the latter option uses a “Server.Connect()” manner for working with the Engine. I’m writing a follow up post shortly on how to leverage the remote server option, so stay tuned. For now, just know that you have choices for hosting.

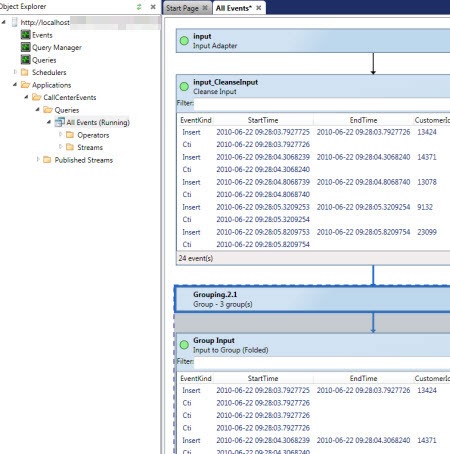

- Debugging in StreamInsight is good, but overall administration is immature. The product ships with a fairly interesting debugging tool which also acts as the only graphical UI for doing rudimentary management of a server. For instance, when you connect to a server (in process or hosted) you can see the “applications” and queries you’ve deployed.

When a query is running, you can choose to record the activities, and then play back the stream. This is great for seeing how your query was processed across the various LINQ operations (e.g. joins, counts).

Also baked into the Debugger are some nice root cause analysis capabilities and tracing of an event through the query steps. You also get a fair amount of server-wide diagnostics about the engine and queries. However, there are no other graphical tools for administering the server. You’ll find yourself writing code or using PowerShell to perform other administrative tasks. I expect this to be an area where you see a mix of community tools and product group samples fill the void until future releases produce a more robust administration interface.

That’s StreamInsight in a nutshell. If you want to learn more, I’ve written a chapter about StreamInsight in my upcoming book, and also maintain a StreamInsight Resources page on the book’s website.

-

I’m Heading to Sweden to Deliver a 2-Day Workshop

The incomparable Mikael Håkansson has just published the details of my next visit to Sweden this September. After I told Mikael about my latest book, we thought it might be epic to put together a 2 day workshop that highlights the “when to use what” discussion. Two of my co-authors, Stephen Thomas and Ewan Fairweather, will be joining me for busy couple of days at the Microsoft Sweden office. This is the first time that Stephen and Ewan have seen my agenda, so, surprise guys!

We plan to summarize each core technology in the Microsoft application platform and then dig into six of the patterns that we discuss in the book. I hope this is a great way to introduce a broad audience to the nuances of each technology and have a spirited discussion of how to choose the best tool for a given situation.

If other user groups would be interested in us repeating this session, let me know. We take payment in the form of plane tickets, puppies or gold bullion.

-

Announcing My New Book: Applied Architecture Patterns on the Microsoft Platform

So my new book is available for pre-order here and I’ve also published our companion website. This is not like any technical book you’ve read before. Let me back up a bit.

So my new book is available for pre-order here and I’ve also published our companion website. This is not like any technical book you’ve read before. Let me back up a bit.Last May (2009) I was chatting with Ewan Fairweather of Microsoft and we agreed that with so many different Microsoft platform technologies, it was hard for even the most ambitious architect/developer to know when to use which tool. A book idea was born.

Over the summer, Ewan and I started crafting a series of standard architecture patterns that we wanted to figure out which Microsoft tool solved best. We also started the hunt for a set of co-authors to bring expertise in areas where we were less familiar. At the end of the summer, Ewan and I had suckered in Stephen Thomas (of BizTalk fame), Mike Sexton (top DB architect at Avanade) and Rama Ramani (Microsoft guy on AppFabric Caching team). All of us finally pared down our list of patterns to 13 and started off on this adventure. Packt Publishing eagerly jumped at the book idea and started cracking the whip on the writing phase.

So what did we write? Our book starts off by briefly explaining the core technologies in the Microsoft application platform including Windows Workflow Foundation, Windows Communication Foundation, BizTalk Server, SQL Server (SSIS and Service Broker), Windows Server AppFabric, Windows Azure Platform and StreamInsight. After these “primer” chapters, we have a discussion about our Decision Framework that contains our organized approach to assessing technology fit to a given problem area. We then jump into our Pattern chapters where we first give you a real world use case, discuss the pattern that would solve the problem, evaluate multiple candidate architectures based on different application technologies, and finally select a winner prior to actually building the “winning” solution.

In this book you’ll find discussion and deep demonstration of all the key parts of the Microsoft application platform. This book isn’t a tutorial on any one technology, but rather, it’s intended to provide the busy architect/developer/manager/executive with an assessment of the current state of Microsoft’s solution offerings and how to choose the right one to solve your problem.

This is a different kind of book. I haven’t seen anything like it. Either you will love it or hate it. I sincerely hope it’s the former, as we’ve spent over a year trying to write something interesting, had a lot of fun doing it, and hope that energy comes across to the reader.

So go out there and pre-order, or check out the site that I set up specifically for the book: http://AppliedArchitecturePatterns.com.

I’ll be sure to let you all know when the book ships!

-

Top 9 Things To Focus On When Learning New Platform Technologies

Last week I attended the Microsoft Convergence conference in Atlanta, GA where I got a deeper dive into a technology that I’m spending a lot of time with right now. You could say that Microsoft Dynamics CRM and I are seriously dating and she’s keeping things in my medicine cabinet.

While sitting in a tips-and-tricks session, I started jotting down a list of things that I should focus on to REALLY understand how to use Dynamics CRM to build a solution. Microsoft is pitching Dynamics CRM as a multi-purpose platform (labeled xRM) for those looking to build relational database-driven apps that can leverage an the Dynamics CRM UI model, security framework, data structure, etc.

I realized that my list of platform “to do” things would be virtually identical for ANY technology platform that I was picking up and trying to use efficiently. Whether BizTalk Server, Force.com, Windows Communication Foundation, Google App Engine, or Amazon AWS Simple Notification Services, a brilliant developer can still get into trouble by not understanding a few core dimensions of the platform. Just because you’re a .NET rock star it doesn’t mean you could pick up WCF or BizTalk and just start building solutions.

So, if I were plopping down in front of a new platform and wanted to learn to use it correctly, I’d focus on (in order of importance) …

- Which things are configuration vs. code? To me, this seems like the one that could bite you the most (depending on the platform, of course). I’d hate to waste a few days coding up a capability that I later discovered was built in, and available with a checkbox. When do I have to jump into code, and when can I just flip some switches? For something like Force.com, there are just tons of configuration settings. If I don’t understand the key configurations and their impact across the platform, I will likely make the solution harder to maintain and less elegant. WCF has an almost embarrassing number of configurations that I could accidentally skip and end up wasting time writing a redundant service behavior.

- What are the core capabilities within the platform? You really need to know what this platform is good at. What are the critical subsystems and built in functions? This relates to the previous one, but does it have a security framework, logging, data access? What should I use/write outside services for, and what is baked right in? Functionally, what is it great at? I’d hate to misuse it because I didn’t grasp the core use cases.

- How do I interface with external sources? Hugely important. I like to know how to share data/logic/services from my platform with another, and how to leverage data/logic/services from another platform into mine.

- When do I plug-in vs. embed? I like to know when it is smartest to embed a particular piece of logic within a component instead of linking to an external component. For instance, in BizTalk, when would I write code in a map or orchestration versus call out to an external assembly? That may seem obvious to a BizTalk guru, but not so much to a .NET guru who picks up BizTalk for the first time. For Dynamics CRM, should I embed JavaScript on a form or reference an external JS file?

- What changes affect the object at an API level? This may relate to more UI-heavy platforms. If I add validation or authorization requirements to a data entity on a screen, do those validation and security restrictions also come into play when accessing the object via the API? Basically, I’m looking for which manipulations of an object are on a more superficial level versus all encompassing.

- How do you package up artifacts for source control or deployment? You can learn a lot about a platform by seeing how to package up a solution. Is it a hodge podge of configuration files or a neat little installable package? What are all the things that go into a deployment package? This may give me an appreciation for how to build the solution on the platform.

- What is shared between components of the framework? I like to know what artifacts are leveraged across all pieces of the platform. Are security roles visible everywhere? What if I build a code contract or schema and want to use it in multiple subsystems? Can I write a helper function and use that all over the place?

- What is supported vs. unsupported code/activities/tweaks? Most platforms that I’ve come across allow you to do all sorts of things that make your life harder later. So I like to know which things break my support contract (e.g. screwing with underlying database indexes), are just flat out disallowed (e.g. Google App Engine and saving files to the VM disk), or just not a good idea. In Dynamics CRM, this could include the types of javascript that you can include on the page. There are things you CAN do, but things that won’t survive an upgrade.

- Where to go for help? I like to hack around as much as the next guy, but I don’t have time to bang my head against the wall for hours on end just to figure out a simple thing. So one of the first things I look for is the support forums, best blogs, product whitepapers, etc.

Did I miss anything, or are my priorities (ranking) off?

-

Testing Service Oriented Solutions

A few days back, the Software Engineering Institute (SEI) at Carnegie Mellon released a new paper called Testing in Service-Oriented Environments. This report contained 65 different SOA testing tips and I found it to be quite insightful. I figured that I’d highlight the salient points here, and solicit feedback for other perspectives on this topic.

The folks at SEI highlighted three main areas of focus for testing:

- Functionality. This may include not only whether the service itself behaves as expected, but also whether it can easily be located and bound to.

- Non-functional characteristics. Testing of quality attributes such as availability, performance, interoperability, and security.

- Conformance. Do the service artifacts (WSDL, HTTP codes, etc) comply with known standards?

I thought that this was a good way to break down the testing plan. When breaking down all the individual artifacts to test, they highlighted the infrastructure itself (web servers, databases, ESB, registries), the web services (whether they be single atomic services, or composite services), and what they call end-to-end threads (combination of people/processes/systems that use the services to accomplish business tasks).

There’s a good list here of the challenges that we face when testing service oriented applications. This could range from dealing with “black box” services where source code is unavailable, to working in complex environments where multiple COTS products are mashed together to build the solution. You can also be faced with incompatible web service stacks, differences in usage of a common semantic model (you put “degrees” in Celsius but others use Fahrenheit), diverse sets of fault handling models, evolution of dependent services or software stacks, and much more.

There’s a good discussion around testing for interoperability which is useful reading for BizTalk guys. If BizTalk is expected to orchestrate a wide range of services across platforms, you’ll want some sort of agreements in place about the interoperability standards and data models that everyone supports. You’ll also find some useful material around security testing which includes threat modeling, attack surface assessment, and testing of both the service AND the infrastructure.

There’s lots more here around testing other quality attributes (performance, reliability), testing conformance to standards, and general testing strategies. The paper concludes with the full list of all 65 tips.

I didn’t add much of my own commentary in this post, but I really just wanted to highlight the underrated aspect of SOA that this paper clearly describes. Are there other things that you all think of when testing services or service-oriented applications?

-

Interview Series: Four Questions With … Udi Dahan

Welcome to the 19th interview in my series of chats with thought leaders in the “connected technologies” space. This month we have the pleasure of chatting with Udi Dahan. Udi is a well-known consultant, blogger, Microsoft MVP, author, trainer and lead developer of the nServiceBus product. You’ll find Udi’s articles all over the web in places such as MSDN Magazine, Microsoft Architecture Journal, InfoQ, and Ladies Home Journal. Ok, I made up the last one.

Let’s see what Udi has to say.

Q: Tell us a bit about why started the nServiceBus project, what gaps that it fills for architects/developers, and where you see it going in the future.

A: Back in the early 2000s I was working on large-scale distributed .NET projects and had learned the hard way that synchronous request/response web services don’t work well in that context. After seeing how these kinds of systems were built on other platforms, I started looking at queues – specifically MSMQ, which was available on all versions of Windows. After using MSMQ on one project and seeing how well that worked, I started reusing my MSMQ libraries on more projects, cleaning them up, making them more generic. By 2004 all of the difficult transaction, threading, and fault-tolerance capabilities were in place. Around that time, the API started to change to be more framework-like – it called your code, rather than your code calling a library. By 2005, most of my clients were using it. In 2006 I finally got the authorization I needed to make it fully open source.

In short, I built it because I needed it and there wasn’t a good alternative available at the time.

The gap that NServiceBus fill for developers and architects is most prominently its support for publish/subscribe communication – which to this day isn’t available in WCF, SQL Server Service Broker, or BizTalk. Although BizTalk does have distribution list capabilities, it doesn’t allow for transparent addition of new subscribers – a very important feature when looking at version 2, 3, and onward of a system.

Another important property of NServiceBus that isn’t available with WCF/WF Durable Services is its “fault-tolerance by default” behaviors. When designing a WF workflow, it is critical to remember to perform all Receive activities within a transaction, and that all other activities processing that message stay within that scope – especially send activities, otherwise one partner may receive a call from our service but others may not – resulting in global inconsistency. If a developer accidentally drags an activity out of the surrounding scope, everything continues to compile and run, even though the system is no longer fault tolerant. With NServiceBus, you can’t make those kinds of mistakes because of how the transactions are handled by the infrastructure and that all messaging is enlisted into the same transaction.

There are many other smaller features in NServiceBus which make it much more pleasurable to work with than the alternatives as well as a custom unit-testing API that makes testing service layers and long-running processes a breeze.

Going forward, NServiceBus will continue to simplify enterprise development and take that model to the cloud by providing Azure implementations of its underlying components. Developers will then have a unified development model both for on-premise and cloud systems.

Q: From your experiences doing training, consulting and speaking, what industries have you found to be the most forward-thinking on technology (e.g. embracing new technologies, using paradigms like EDA), and which industries are the most conservative? What do you think the reasons for this are?

A: I’ve found that it’s not about industries but people. I’ve met forward-thinking people in conservative oil and gas companies and very conservative people in internet startups, and of course, vice-versa. The higher-up these forward-thinking people are in their organization, the more able they are to effect change. At that point, it becomes all personalities and politics and my job becomes more about organizational psychology than technology.

Q: Where do you see the value (if any) in modeling during the application lifecycle? Did you buy into the initial Microsoft Oslo vision of the “model” being central to the envisioning, design, build and operations of an application? What’s your preferential tool for building models (e.g. UML, PowerPoint, paper napkin)?

A: For this, allow me to quote George E. P. Box: “Essentially, all models are wrong, but some are useful.”

My position on models is similar to Eisenhower’s position on plans – while I wouldn’t go so far as to say “models are useless but modeling is indispensable”, I would put much more weight on the modeling activity (and many of its social aspects) than on the resulting model. The success of many projects hinges on building that shared vocabulary – not only within the development group, but across groups like business, dev, test, operations, and others; what is known in DDD terms as the “ubiquitous language”.

I’m not a fan of “executable pictures” and am more in the “UML as a sketch” camp so I can’t say that I found the initial Microsoft Oslo vision very compelling.

Personally, I like Sparx Systems tool – Enterprise Architect. I find that it gives me the right balance of freedom and formality in working with technical people.

That being said, when I need to communicate important aspects of the various models to people not involved in the modeling effort, I switch to PowerPoint where I find its animation capabilities very useful.

Q [stupid question]: April Fool’s Day is upon us. This gives us techies a chance to mess with our colleagues in relatively non-destructive ways. I’m a fan of pranks like:

- Turning on Sticky Keys

- Setting someone’s default font color to “white”

- Taking a screen grab of someone’s desktop, hiding all their desktop icons, and then setting their desktop wallpaper to the screen grab image

- Configuring the “Blue Screen of Death” screensaver

- Switching the handle on the office refrigerator door

Tell us Udi, what sort of geek pranks you’d find funny on April Fool’s Day.

A: This reminds me why I always lock my machine when I’m not at my desk 🙂

I hadn’t heard of switching the handle of the refrigerator before so, for sheer applicability to non-geeks as well, I’d vote for that one.

The first lesson I learned as a consultant was to lock my laptop when I left it alone. Not because of data theft, but because my co-workers were monkeys. All it took to teach me this point was coming back to my desk one day and finding that my browser home page was reset and displaying MenWhoLookLikeKennyRogers.com. Live and learn.

Thanks Udi for your insight.

-

Project Plan Activities for an Architect

I’m the lead architect on a large CRM project that is about to start the Design phase (or in my RUP world, “Elaboration”), and my PM asked me what architectural tasks belong in her project plan for this phase of work. I don’t always get asked this question on projects as there’s usually either a large “system design” bucket, or, just a couple high level tasks assigned to the project architect.

So, I have three options here:

- Ask for one giant “system design” task that goes for 4 months. On the plus side, this let’s the design process be fairly fluid and doesn’t put the various design tasks into a strict linear path. However, obviously this makes tracking progress quite difficult, and, would force the PM to add 5-10 different “assigned to” parties because the various design tasks involve different parts of the organization.

- Go hyper-detailed and list every possible design milestone. The PM started down this path, but I’m not a fan. I don’t want every single system integration, or software plug-in choice called out as specific tasks. Those items must be captured and tracked somewhere (of course), but the project plan doesn’t seem to me to be the right place. It makes the plan too complicated and cross-referenced and makes maintenance such a chore for the PM.

- List high level design tasks which allow for segmentation of responsibility and milestone tracking. I naturally saved my personal preference for last, because that’s typically how my lists work. In this model, I break out “system design” into its core components.

So I provided the list below to my PM. I broke out each core task and flagged which dependencies are associated with each. If you “mouseover” the task title, I have a bit more text explaining what the details of that task are. Some of these tasks can and will happen simultaneously, so don’t totally read this as a linear sequence. I’d be interested in all of your feedback.

Task Dependency 1 System Design 2 Capture System Use Cases Functional requirements Non-functional requirements

3 Record System Dependencies Functional requirements 4 Identify Data Sources Functional requirements 5 List Design Constraints Functional requirements Non-functional requirements

[Task 2] [Task 3] [Task 4]

6 Build High Level Data Flow Functional requirements [Task 2] [Task 3] [Task 4]

7 Catalog System Interfaces Functional requirements [Task 6]

8 Outline Security Strategy Functional requirements Non-functional requirements

[Task 5]

9 Define Deployment Design Non-functional requirements 10 Design Review 11 Organization Architecture Board Review [Task 1] 12 Team Peer Review [Task 11] Hopefully this provides enough structure to keep track of key milestones, but not so much detail that I’m constantly updating the minutiae of my progress. How do your projects typically track architectural progress during a design phase?