While in Europe last week presenting at the Integration Days event, I showed off some demonstration of cool new technologies working with existing integration tools. One of those demos combined SignalR and BizTalk Server in a novel way.

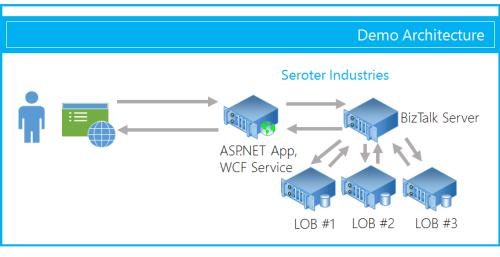

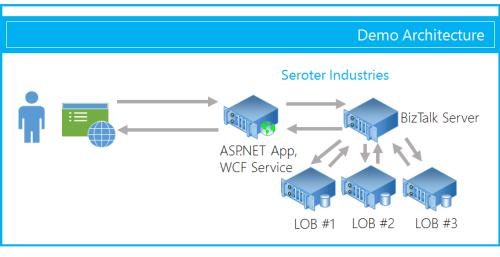

One of the use cases for an integration bus like BizTalk Server is to aggregate data from multiple back end systems and return a composite message (also known as a Scatter-Gather pattern). In some cases, it may make sense to do this as part of a synchronous endpoint where a web service caller makes a request, and BizTalk returns an aggregated response. However, we all know that BizTalk Server’s durable messaging architecture introduces latency into the communication flow, and trying to do this sort of operation may not scale well when the number of callers goes way up. So how can we deliver a high-performing, scalable solution that will accommodate today’s highly interactive web applications? In this solution that I build, I used ASP.NET and SignalR to send incremental messages from BizTalk back to the calling web application.

The end user wants to search for product inventory that may be recorded in multiple systems. We don’t want our web application to have to query these systems individually, and would rather put an aggregator in the middle. Instead of exposing the scatter-gather BizTalk orchestration in a request-response fashion, I’ve chosen to expose an asynchronous inbound channel, and will then send messages back to the ASP.NET web application as soon as each inventory system respond.

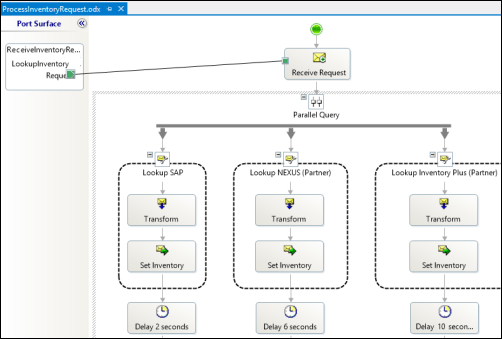

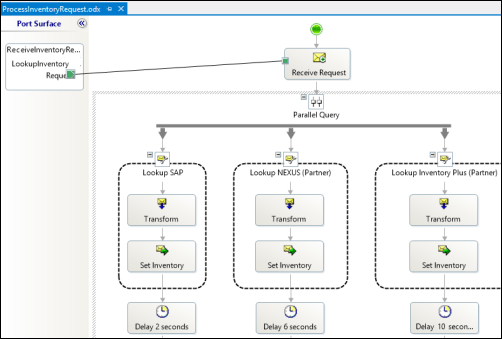

First off, I have a BizTalk orchestration. It takes in the inventory lookup request and makes a parallel query to three different inventory systems. In this demonstration, I don’t actually query back-end systems, but simulate the activity by introducing a delay into each parallel branch.

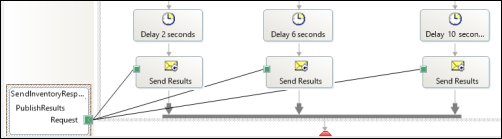

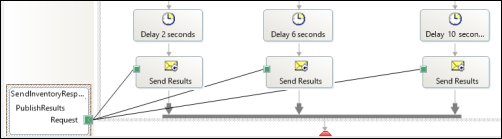

As each branch concludes, I send the response immediately to a one-way send port. This is in contrast to the “standard” scatter-gather pattern where we’d wait for all parallel branches to complete and then aggregate all the responses into a single message. This way, we are providing incremental feedback, a more responsive application, and protection against a poor-performing inventory system.

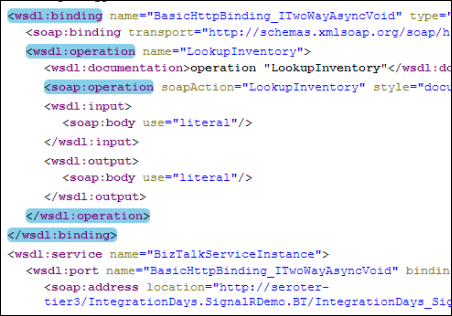

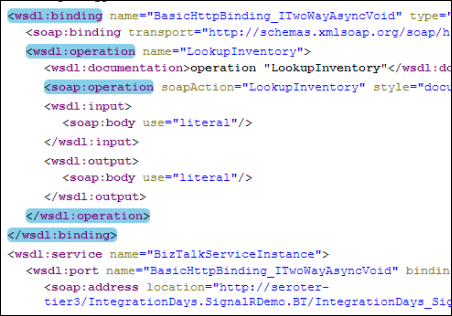

After building and deploying this solution, I walked through the WCF Service Publishing Wizard in order to create the web service on-ramp into the BizTalk orchestration.

I couldn’t yet create the BizTalk send port as I didn’t have an endpoint to send the inventory responses to. Next up, I built the ASP.NET web application that also had a WCF service for accepting the inventory messages. First, in a new ASP.NET project in Visual Studio, I added a service reference to my BizTalk-generated service. I then added the NuGet package for SignalR, and a new class to act as my SignalR “hub.” The Hub represents the code that the client browser will invoke on the server. In this case, the client code needs to invoke a “lookup inventory” action which will forwards a request to BizTalk Server. It’s important to notice that I’m acquiring and transmitting the unique connection ID associated with the particular browser client.

public class NotifyHub : Hub

{

/// <summary>

/// Operation called by client code to lookup inventory for a given item #

/// </summary>

/// <param name="itemId"></param>

public void LookupInventory(string itemId)

{

//get this caller's unique browser connection ID

string clientId = Context.ConnectionId;

LookupService.IntegrationDays_SignalRDemo_BT_ProcessInventoryRequest_ReceiveInventoryRequestPortClient c =

new LookupService.IntegrationDays_SignalRDemo_BT_ProcessInventoryRequest_ReceiveInventoryRequestPortClient();

LookupService.InventoryLookupRequest req = new LookupService.InventoryLookupRequest();

req.ClientId = clientId;

req.ItemId = itemId;

//invoke async service

c.LookupInventory(req);

}

}

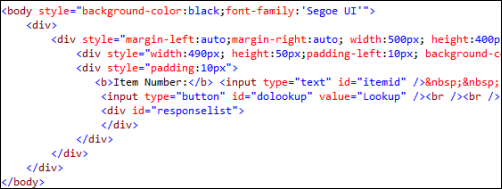

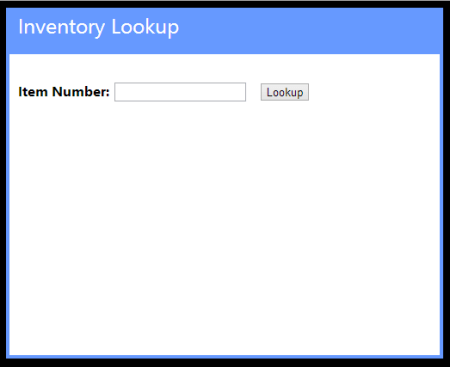

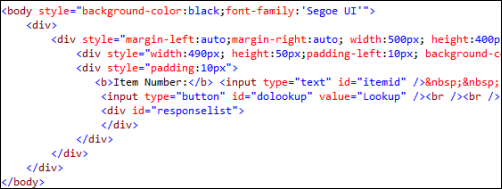

Next, I added a single Web Form to this ASP.NET project. There’s nothing in the code-behind file as we’re dealing entirely with jQuery and client-side fun. The HTML markup of the page is pretty simple and contains a single textbox that accepts a inventory part number, and a button that triggers a lookup. You’ll also notice a DIV with an ID of “responselist” which will hold all the responses sent back from BizTalk Server.

The real magic of the page (and SignalR) happens in the head of the HTML page. Here I referenced all the necessary JavaScript libraries for SignalR and jQuery. Then I established a reference to the server-side SignalR Hub. Then you’ll notice that I create a function that the *server* can call when it has data for me. So the *server* will call the “addLookupResponse” operation on my page. Awesome. Finally, I start up the connection and define the click function that the button on the page triggers.

<head runat="server">

<title>Inventory Lookup</title>

<!--Script references. -->

<!--Reference the jQuery library. -->

<script src="Scripts/jquery-1.6.4.min.js" ></script>

<!--Reference the SignalR library. -->

<script src="Scripts/jquery.signalR-1.0.0-rc1.js"></script>

<!--Reference the autogenerated SignalR hub script. -->

<script src="<%= ResolveUrl("~/signalr/hubs") %>"></script>

<!--Add script to update the page-->

<script type="text/javascript">

$(function () {

// Declare a proxy to reference the hub.

var notify = $.connection.notifyHub;

// Create a function that the hub can call to broadcast messages.

notify.client.addLookupResponse = function (providerId, stockAmount) {

$('#responselist').append('<div>Provider <b>' + providerId + '</b> has <b>' + stockAmount + '</b> units in stock.</div>');

};

// Start the connection.

$.connection.hub.start().done(function () {

$('#dolookup').click(function () {

notify.server.lookupInventory($('#itemid').val());

$('#responselist').append('<div>Checking global inventory ...</div>');

});

});

});

</script>

</head>

Nearly done! All that’s left is to open up a channel for BizTalk to send messages to the target browser connection. I added a WCF service to this existing ASP.NET project. The WCF contract has a single operation for BizTalk to call.

[ServiceContract]

public interface IInventoryResponseService

{

[OperationContract]

void PublishResults(string clientId, string providerId, string itemId, int stockAmount);

}

Notice that BizTalk is sending back the client (connection) ID corresponding to whoever made this inventory request. SignalR makes it possible to send messages to ALL connected clients, a group of clients, or even individual clients. In this case, I only want to transmit a message to the browser client that made this specific request.

public class InventoryResponseService : IInventoryResponseService

{

/// <summary>

/// Send message to single connected client

/// </summary>

/// <param name="clientId"></param>

/// <param name="providerId"></param>

/// <param name="itemId"></param>

/// <param name="stockAmount"></param>

public void PublishResults(string clientId, string providerId, string itemId, int stockAmount)

{

var context = GlobalHost.ConnectionManager.GetHubContext<NotifyHub>();

//send the inventory stock amount to an individual client

context.Clients.Client(clientId).addLookupResponse(providerId, stockAmount);

}

}

After adding the rest of the necessary WCF service details to the web.config file of the project, I added a new BizTalk send port targeting the service. Once the entire BizTalk project was started up (receive location for the on-ramp WCF service, orchestration that calls inventory systems, send port that sends responses to the web application), I browsed to my ASP.NET site.

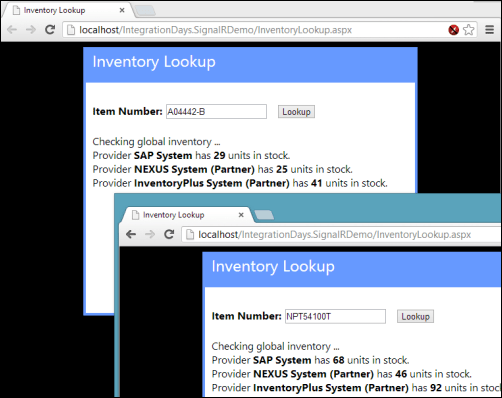

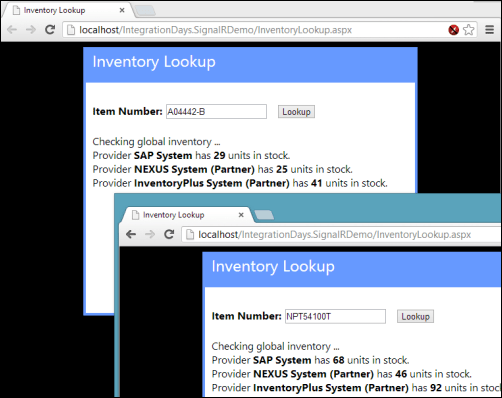

For this demonstration, I opened a couple browser instances to prove that each one was getting unique results based on whatever inventory part was queried. Sure enough, a few seconds after entering in a random part identifier, data starting trickling back. On each browser client, results were returned in a staggered fashion as each back-end system returned data.

I’m biased of course, but I think that this is a pretty cool query pattern. You can have the best of BizTalk (e.g. visually modeled workflow for scatter-gather, broad application adapter choice) while not sacrificing interactivity and performance.

In the spirit of sharing, I’ve made the source code available on GitHub. Feel free to browse it, pull it, and try this on your own. Let me know what you think!