Has your company been in business for more than a couple years? If so, it’s likely that you’ve installed and use packaged software, also know as commercial off-the-shelf (COTS) software. For some, the days of installing software via CD/DVD (or now, downloads) is drawing to a close as SaaS providers rapidly take the place of installing and running software on your own. But, what do you do about all those existing apps that probably run mission critical workloads and host your most strategic business logic and data? In this post, I’m playing around with ideas for what companies can do with their apps based on packaged software, and offering five options.

What types of packaged software are we talking about here? The possibilities are seemingly endless. Client-server products, web applications, middleware, databases, infrastructure services (like email, directory services). Your business likely runs on things like Microsoft Dynamics CRM, JD Edwards Enterprise One, Siebel systems, learning management systems, inventory apps, laboratory information management systems, messaging engines like BizTalk Server, databases like SQL Server, and so on. The challenge is that the cloud is different from traditional server environments. The value of the cloud is agility and elasticity. Few, if any, packaged software products are defined with 12-factor concepts in mind. They can’t automatically take advantage of the rapid deployment, instant scaling, or distributed services that are inherent in the cloud. Worse, these software packages aren’t friendly to the ephemeral nature of cloud resources and respond poorly to servers or services that come and go without warning. 12 factor apps cater to custom built solutions where you can own startup routines, state management, logging, and service discovery. Apps like THAT can truly take off in cloudy environments!

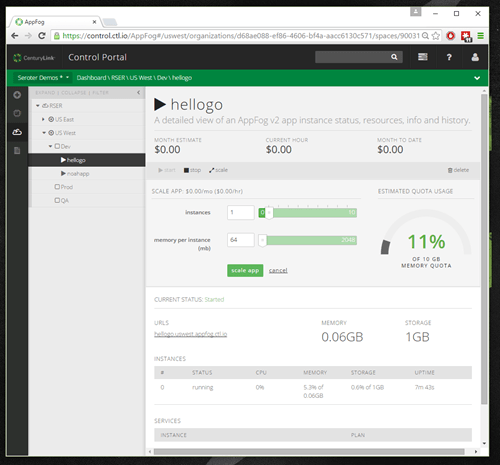

So where should your apps run? That’s a loaded question with no single answer. It depends on your isolation needs and what’s available to you. From a location perspective, they can run today on dedicated servers onsite, hosted servers elsewhere, on-premises virtualization environments, public clouds, or private clouds. You’ve probably got a mix of all that today (if surveys are to be believed). From the perspective of cloudy delivery models, your apps might run on raw servers (IaaS), platform abstractions, or with a SaaS provider. As an aside, I haven’t seen many PaaS-like environments that work cleanly with packaged software, but it’s theoretically possible. Either way, I’ve met very few companies where “we’re great at running apps!” is a core competency that generates revenue for that business. Ideally, applications run in the place that offers the most accessibility, least intervention, and the greatest ability to redirect talented staff to high priority activities.

I see five options for you to consider when figuring out what to do with your packaged software:

Option #1 – Leave it the hell alone.

If it ain’t broke, don’t fix it, amiright? That’s a terrible saying that’s wrong for so many reasons, but there are definitely cases where it’s not worth it to change something.

Why do it?

The packaged software might be hyper-specialized to your industry, and operate perfectly fine wherever it’s running. There’s no way to SaaS-ify it, and you see no real benefit in hosting it offsite. There could be a small team that keeps it up and running, and therefore it’s supportable in an adequate fashion. There’s no longer a licensing cost, and it’s fairly self-sufficient. Or the app is only used by a limited set of people, and therefore it’s difficult to justify the cost of migrating it.

Why don’t do it?

In my experience, there are tons of apps squirreled away in your organization, many of which have long outlived their usefulness or necessity and aren’t nearly as “self-sufficient” as people think. Rather than take my colleague Jim Newkirk’s extreme advice – he says to turn off all the apps once a year, and only turn apps back on once someone asks for it – I do see a lot of benefit to openly evaluating your portfolio and asking yourself why that app is running, and if the current host the right one. Leaving an app alone because it’s “not important enough” or “it works fine” might be ignoring hidden costs associated with infrastructure, software licensing, or 3rd party support. The total cost of ownership may be higher than you think. If you do nothing else, at least consider option #2 for more elastic development/test environments!

Option #2 – Lift-and-shift to IaaS

Unfortunately, picking up an app as-is and moving it to a cloud environment is is seen by many as the end-state for a “journey to the cloud.” It ignores the fundamental architectural and business model shifts that cloud introduces. But, it’s a start.

Why do it?

A move to cloud IaaS (private/public/whatever) gets you out of underutilized environments and into more dynamic, feature-rich sandboxes. Even if all you’re doing is taking that packaged app and running it in an infrastructure cloud, you’ll likely see SOME benefits. You’ll likely have access to more powerful hardware, enjoy the ability to right-size the infrastructure on demand, get easier access to that software by making it Internet-accessible, and have access to more API-centric tools for managing the app environment.

Why don’t do it?

Check out my cloud migration checklist for some of the things you really need to consider before doing a lift-and-shift migration. I could make an argument that apps that require users or services to know a server by name shouldn’t go to the cloud. But unless you rebuild your app in a server-less, DNS-friendly, service-discovery driven, 12-factor fashion, that’s not realistic. And, you may be missing out on some usefulness that the cloud can offer. However, your public/private IaaS or PaaS environment has all sorts of cloudy capabilities like auto-scaling, usage APIs, subscription APIs, security policy management, service catalogs and more that are tough to use with closed-source, packaged software. A lift-and-shift approach can give you the quick-win of adopting cloud, but will likely leave you disappointed with all the things you can’t magically take advantage of just because the app is running “in the cloud.” Elasticity is so powerful, and a lift-and-shift approach only gets you part of the way there. It also doesn’t force you to assess the business impact of adopting cloud as a whole.

Option #3 – Migrate to SaaS version of same product

Most large software vendors have seen the writing on the wall and are offering cloud-like versions of their traditionally boxed software. Companies from Adobe to Oracle are offering as-a-Service flavors of their flagship products.

Why do it?

If – and that’s a big “if” – your packaged software vendor offers a SaaS version of their software, then this likely provides the smoothest transition to the cloud. Assuming the cloud version isn’t a complete re-write of the packaged version, then your configurations and data should move without issue. For instance, if you use Microsoft Dynamics CRM, then shifting to Microsoft CRM Online isn’t a major deal. Moving your packaged app to the cloud version means that you don’t deal with software upgrades any longer, you switch to a subscription-based consumption model versus Capex+Opex model, and you can scale capacity to meet your usage.

Why don’t do it?

Some packaged software products allow you do make some substantial customizations. If you’ve built custom adapters, reports directly against the database, JavaScript in the UI, or integrations with your messaging engine, then it may be difficult to keep most of that in place in the SaaS version. In some cases, it becomes an entirely new app when switching to cloud, and it may be time to revisit your technology choices. There’s another reason to question this option: you’ll find some cases where the “cloud” option provided by the vendor is nothing more than hosting. On the surface, that’s not a huge problem. But, it becomes one when you think you’re getting elasticity, a different licensing model, and full self-service control. What you actually get is a ticket-based or constrained environment that simply runs somewhere else.

Option #4 – Re-platform to an equivalent SaaS product

“Adopting cloud” may mean transitioning from one product to another. In this option, you drop your packaged software product for a similar one that’s entirely cloud-based.

Why do it?

Sometimes a clean break is what’s needed to change a company’s status quo. All of the above options are “more of the same,” to some extent. A full embrace of the cloud means understanding that the services you deliver, who you deliver them to, and how you deliver them, can fundamentally change for the better. Determine whether your existing software vendor’s “cloud” offering is simply a vehicle to protect revenue and prevent customer defections, or whether it’s truly an innovative way to offer the service. If the former, then take a fresh look at the landscape and see if there’s a more cloud-native service that offers the capabilities to you need (and didn’t even KNOW you needed!). If you hyper-customized the software and can’t do option #3 above, then it may actually be simpler to start over, define a minimum viable release, and pare down to the capabilities that REALLY matter. Don’t make the mistake of just copying everything (logic, UX, data) from the legacy packaged software to the SaaS product.

Why don’t do it?

Cloud-based modernization may not be worth it, especially if you’re dealing with packaged software that doesn’t have a clear SaaS equivalent. While areas like CRM, ERP, creative suites, educational tools, and travel are well represented with SaaS products, there are packaged software products that cater to specific industries and aren’t available as-a-Service. While you could take a multi-purpose data-driven platform like Force.com and build it, I’m not sure it adds enough value to make up for the development cost and new maintenance burden. It might be better to lift and shift the working software to a cloud IaaS platform, or accept a non best-of-breed SaaS offering from the same vendor instead.

Option #5 – Rebuild as cloud-native application

The “build versus buy” discussion has been around for ages. With a smart engineering team, you might gain significant value from developing new cloud-native systems to replace legacy packaged software. However, you run a clear risk of introducing complexity where none is required.

Why do it?

If your team looks at the current and available solutions and none of them do what your business needs, then you need to consider building it. That packaged software that you bought in 2006 may have been awesome at generating pre-approvals for home loans, but now you probably need something API-enabled, Internet-accessible, highly available, and mobile friendly. If you don’t see a SaaS product that does what’s necessary, then it’s never been easier to compose solutions out of established libraries and cloud services. You could use something like Spring Cloud to build wicked cloud-native apps where all the messaging, configuration management, and discovery is simple, and you can focus your attention on building relevant microservices, user interfaces and data repositories. You can build apps today based on cloud-based database, mobile, messaging, and analytics systems, thus making your work more composition-driven versus building tons of raw infrastructure services. In some cases, building an app to replace packaged software will give you the flexibility and agility you’re truly after.

Why don’t do it?

While a seductive option, this one carries plenty of risk. Regardless of how many frameworks are available – and there are a borderline ridiculous number of app development and container-based frameworks today – it’s not trivial to build a modern application that replaces a comprehensive commercial software solution. Without very clear MVP criteria and iterative development, you can get caught in a development quagmire where “cool technology” bogs down your progress. And even if you successfully build a replacement app, there’s still the issue of maintaining it. If you’ve developed your own “platform” and then defined tons of microservices all connected through distributed messaging … well, you don’t necessarily have the most intuitive system to manage. Keep the full lifecycle in mind before embarking on such an effort.

Summary

The best thing to put in the cloud is new apps designed with the cloud in mind. However, there is real value in migrating or modernizing some of your existing software. Start with the value you’re trying to add with this software. What problem is it solving? Who uses this “service”? What process(es) is it part of? Never undertake any effort to change the user’s experience without answering questions like that. Once you know the goal, consider the options above when assessing where to run those packaged apps.

You’re seeing a new crop of solutions here, and I like this trend.

You’re seeing a new crop of solutions here, and I like this trend.  Also, our customers use

Also, our customers use  Most clouds offer a vast ecosystem of 3rd party open source and commercial appliances. Create isolated networks with an overlay solution, encrypt workloads at the host level, stand up self-managed database solutions, and much more. Look at something like

Most clouds offer a vast ecosystem of 3rd party open source and commercial appliances. Create isolated networks with an overlay solution, encrypt workloads at the host level, stand up self-managed database solutions, and much more. Look at something like

On the phone, you can’t gauge reactions in the room. Since we have very few (none?) dumb meetings, there’s value in being there in person to see how everyone is reacting and if people are engaged. I’ve already changed my approach in a few conversations because I noticed that the original direction wasn’t “landing.”

On the phone, you can’t gauge reactions in the room. Since we have very few (none?) dumb meetings, there’s value in being there in person to see how everyone is reacting and if people are engaged. I’ve already changed my approach in a few conversations because I noticed that the original direction wasn’t “landing.”