Welcome to the 19th interview in my series of chats with thought leaders in the “connected technologies” space. This month we have the pleasure of chatting with Udi Dahan. Udi is a well-known consultant, blogger, Microsoft MVP, author, trainer and lead developer of the nServiceBus product. You’ll find Udi’s articles all over the web in places such as MSDN Magazine, Microsoft Architecture Journal, InfoQ, and Ladies Home Journal. Ok, I made up the last one.

Let’s see what Udi has to say.

Q: Tell us a bit about why started the nServiceBus project, what gaps that it fills for architects/developers, and where you see it going in the future.

A: Back in the early 2000s I was working on large-scale distributed .NET projects and had learned the hard way that synchronous request/response web services don’t work well in that context. After seeing how these kinds of systems were built on other platforms, I started looking at queues – specifically MSMQ, which was available on all versions of Windows. After using MSMQ on one project and seeing how well that worked, I started reusing my MSMQ libraries on more projects, cleaning them up, making them more generic. By 2004 all of the difficult transaction, threading, and fault-tolerance capabilities were in place. Around that time, the API started to change to be more framework-like – it called your code, rather than your code calling a library. By 2005, most of my clients were using it. In 2006 I finally got the authorization I needed to make it fully open source.

In short, I built it because I needed it and there wasn’t a good alternative available at the time.

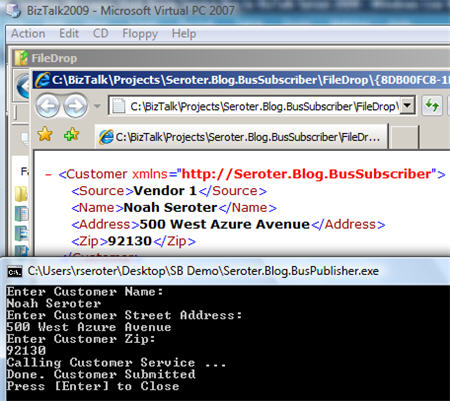

The gap that NServiceBus fill for developers and architects is most prominently its support for publish/subscribe communication – which to this day isn’t available in WCF, SQL Server Service Broker, or BizTalk. Although BizTalk does have distribution list capabilities, it doesn’t allow for transparent addition of new subscribers – a very important feature when looking at version 2, 3, and onward of a system.

Another important property of NServiceBus that isn’t available with WCF/WF Durable Services is its “fault-tolerance by default” behaviors. When designing a WF workflow, it is critical to remember to perform all Receive activities within a transaction, and that all other activities processing that message stay within that scope – especially send activities, otherwise one partner may receive a call from our service but others may not – resulting in global inconsistency. If a developer accidentally drags an activity out of the surrounding scope, everything continues to compile and run, even though the system is no longer fault tolerant. With NServiceBus, you can’t make those kinds of mistakes because of how the transactions are handled by the infrastructure and that all messaging is enlisted into the same transaction.

There are many other smaller features in NServiceBus which make it much more pleasurable to work with than the alternatives as well as a custom unit-testing API that makes testing service layers and long-running processes a breeze.

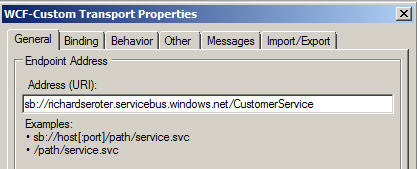

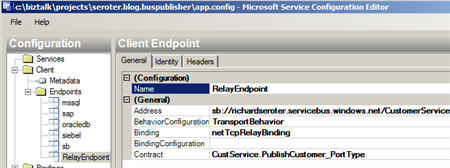

Going forward, NServiceBus will continue to simplify enterprise development and take that model to the cloud by providing Azure implementations of its underlying components. Developers will then have a unified development model both for on-premise and cloud systems.

Q: From your experiences doing training, consulting and speaking, what industries have you found to be the most forward-thinking on technology (e.g. embracing new technologies, using paradigms like EDA), and which industries are the most conservative? What do you think the reasons for this are?

A: I’ve found that it’s not about industries but people. I’ve met forward-thinking people in conservative oil and gas companies and very conservative people in internet startups, and of course, vice-versa. The higher-up these forward-thinking people are in their organization, the more able they are to effect change. At that point, it becomes all personalities and politics and my job becomes more about organizational psychology than technology.

Q: Where do you see the value (if any) in modeling during the application lifecycle? Did you buy into the initial Microsoft Oslo vision of the “model” being central to the envisioning, design, build and operations of an application? What’s your preferential tool for building models (e.g. UML, PowerPoint, paper napkin)?

A: For this, allow me to quote George E. P. Box: “Essentially, all models are wrong, but some are useful.”

My position on models is similar to Eisenhower’s position on plans – while I wouldn’t go so far as to say “models are useless but modeling is indispensable”, I would put much more weight on the modeling activity (and many of its social aspects) than on the resulting model. The success of many projects hinges on building that shared vocabulary – not only within the development group, but across groups like business, dev, test, operations, and others; what is known in DDD terms as the “ubiquitous language”.

I’m not a fan of “executable pictures” and am more in the “UML as a sketch” camp so I can’t say that I found the initial Microsoft Oslo vision very compelling.

Personally, I like Sparx Systems tool – Enterprise Architect. I find that it gives me the right balance of freedom and formality in working with technical people.

That being said, when I need to communicate important aspects of the various models to people not involved in the modeling effort, I switch to PowerPoint where I find its animation capabilities very useful.

Q [stupid question]: April Fool’s Day is upon us. This gives us techies a chance to mess with our colleagues in relatively non-destructive ways. I’m a fan of pranks like:

- Turning on Sticky Keys

- Setting someone’s default font color to “white”

- Taking a screen grab of someone’s desktop, hiding all their desktop icons, and then setting their desktop wallpaper to the screen grab image

- Configuring the “Blue Screen of Death” screensaver

- Switching the handle on the office refrigerator door

Tell us Udi, what sort of geek pranks you’d find funny on April Fool’s Day.

A: This reminds me why I always lock my machine when I’m not at my desk 🙂

I hadn’t heard of switching the handle of the refrigerator before so, for sheer applicability to non-geeks as well, I’d vote for that one.

The first lesson I learned as a consultant was to lock my laptop when I left it alone. Not because of data theft, but because my co-workers were monkeys. All it took to teach me this point was coming back to my desk one day and finding that my browser home page was reset and displaying MenWhoLookLikeKennyRogers.com. Live and learn.

Thanks Udi for your insight.