As a Microsoft MVP for Integration – or however I’m categorized now – I keep a keen interest in where Microsoft is going with (app) integration technologies. Admittedly, I’ve had trouble keeping up with all the various changes, and thought it’d be useful to take a tour through the status of the Microsoft integration services. For each one, I’ll review its use case, recent updates, and how to consume it.

What qualifies as an integration technology nowadays? For me, it’s anything that lets me connect services in a distributed system. That “system” may be comprised of components running entirely on-premises, between business partners, or across cloud environments. Microsoft doesn’t totally agree with that definition, if their website information architecture is any guide. They spread around the services in categories like “Hybrid Integration”, “Web and Mobile”, “Internet of Things”, and even “Analytics.”

But, whatever. I’m considering the following Microsoft technologies as part of their cloud-enabled integration stack:

- Service Bus

- Event Hubs

- Data Factory

- Stream Analytics

- BizTalk Services

- Logic Apps

- BizTalk Server on Cloud Virtual Machines

I considered, but skipped, Notification Hubs, API Apps, and API Management. They all empower application integration scenarios in some fashion, but it’s more ancillary. If you disagree, tell me in the comments!

Service Bus

What is it?

The Service Bus is a general purpose messaging engine released by Microsoft back in 2008. It’s made up of two key sets of services: the Service Bus Relay, and Service Bus Brokered Messaging.

https://twitter.com/clemensv/status/648902203927855105The Service Bus Relay is a unique service that makes it possible to securely expose on-premises services to the Internet through a cloud-based relay. The service supports a variety of messaging patterns including request/reply, one-way asynchronous, and peer-to-peer.

But what if the service client and server aren’t online at the same time? Service Bus Brokered Messaging offers a pair of asynchronous store-and-forward services. Queues provide first-in-first-out delivery to a single consumer. Data is stored in the queue until retrieved by the consumer. Topics are slightly different. They make it possible for multiple recipients to get a message from a producer. It offers a publish/subscribe engine with per-recipient filters.

How does the Service Bus enable application integration? The Relay lets companies expose legacy apps through public-facing services, and makes cross-organization integration much simpler than setting up a web of VPN connections and FTP data exchanges. Brokered Messaging makes it possible to connect distributed apps in a loosely coupled fashion, regardless of where those apps reside.

What’s new?

This is a fairly mature service with a slow rate of change. The only thing added to the Service Bus in 2015 is Premium messaging. This feature gives customers the choice to run the Brokered Messaging components in a single-tenant environment. This gives users more predictable performance and pricing.

From the sounds of it, Microsoft is also looking at finally freeing Service Bus Relay from the shackles of WCF. Here’s hoping.

https://twitter.com/clemensv/status/639714878215835648How to use it?

Developers work with the Service Bus primarily by writing code. To host a Relay service, you must write a WCF service that uses one of the pre-defined Service Bus bindings. To make it easy, developers can add the Service Bus package to their projects via NuGet.

The only aspect that requires .NET is hosting Relay services. Developers can consume Relay-bound services, Queues, and Topic subscriptions from a host of other platforms, or even just raw REST APIs. The Microsoft SDKs for Java, PHP, Ruby, Python and Node.js all include the necessary libraries for talking to the Service Bus. AMQP support appears to be in a subset of the SDKs.

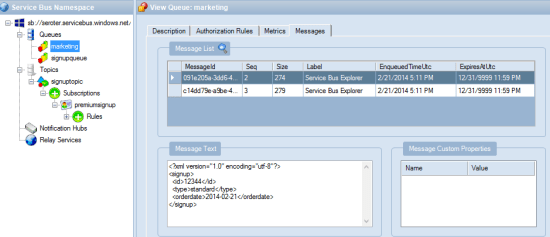

It’s also possible to set up Service Bus Queues and Topics via the Azure Portal. From here, I can create new Queues, add Topics, and configure basic Subscriptions. I can also see any active Relay service endpoints.

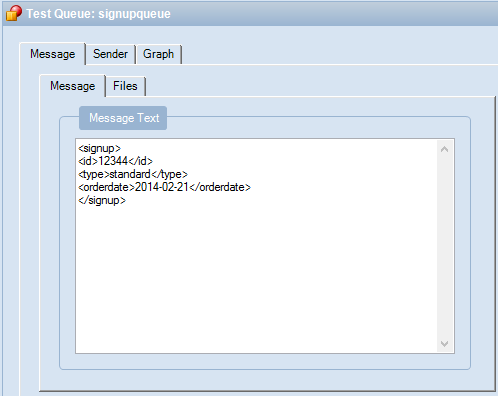

Finally, you can interact with the Service Bus through the super powerful (and open source) Service Bus Explorer created by Micosoftie Paolo Salvatori. From here, you can configure and test virtually every aspect of the Service Bus.

Event Hubs

What is it?

Azure Event Hubs is a scalable service for high-volume event intake. Stream in millions of events per second from applications or devices. It’s not an end-to-end messaging engine, but rather, focuses heavily on being a low latency “front door” that can reliably handle consistent or bursty event streams.

Event Hubs works by putting an ordered sequence of events into something called a partition. Like Apache Kafka, an Event Hub partition acts like an append-only commit log. Senders – who communicate with Event Hubs via AMQP and HTTP – can specify a partition key when submitting events, or leave it out so that a round-robin approach decides which partition the event goes to. Partitions are accessed by readers through Consumer Groups. A consumer group is like a view of the event stream. There should only be a single partition reader at one time, and Event Hub users definitely have some responsibility for managing connections, tracking checkpoints, and the like.

How do Event Hubs enable application integration? A core use case of Event Hubs is capturing high volume “data exhaust” thrown off by apps and devices. You may also use this to aggregate data from multiple sources, and have a consumer process pull data for further processing and sending to downstream systems.

What’s new?

In July of 2015, Microsoft added support for AMQP over web sockets. They also added the service to an addition region in the United States.

How to use it?

It looks like only the .NET SDK has native libraries for Event Hubs, but developers can still use either the REST API or AMQP libraries in their language of choice (e.g. Java).

The Azure Portal lets you create Event Hubs, and do some basic configuration.

Paolo also added support for Event Hubs in the Service Bus Explorer.

Data Factory

What is it?

The Azure Data Factory is a cloud-based data integration service that does traditional extract-transform-load but with some modern twists. Data Factory can pull data from either on-premises or cloud endpoints. There’s an agent-based “data management gateway” for extracting data from on-premises file systems, SQL Servers, Oracle databases, Teradata databases, and more. Data transformation happens in Hadoop cluster or batch processing environment. All the various processing activities are collected into a pipeline that gets executed. Activities can have policies attached. A policy controls concurrency, retries, delay duration, and more.

How does the Data Factory enable application integration? This could play a useful part in synchronizing data used by distributed systems. It’s designed for large data sets and is much more efficient than using messaging-based services to ship chunks of data between repositories.

What’s new?

This service just hit “general availability” in August, so the whole service is kinda new.

How to use it?

You have a few choices for interacting with the Data Factory service. As mentioned earlier, there are a whole bunch of supported database and file endpoints, but what about creating and managing the factories themselves? Your choices are Visual Studio, PowerShell, REST API, or the graphical designer in the Azure Preview Portal. Developers can download a package to add the appropriate project types to Visual Studio, and download the latest Azure PowerShell executable to get Data Factory extensions.

To do any visual design, you need to jump into the (still) Preview Portal. Here you can create, manage, and monitor individual factories.

Stream Analytics

What is it?

Stream Analytics is a cloud-hosted event processing engine. Point it at an event source (real-time or historical) and run data over queries written in a SQL-like language. An event source could be a stream (like Event Hubs), or reference data (like Azure Blob storage). Queries can join streams, convert data types, match patterns, count unique values, look for changed values, find specific events in windows, detect absence of events, and more.

Once the data has been processed, it goes to one of many possible destinations. These include Azure SQL Databases, Blob storage, Event Hubs, Service Bus Queues, Service Bus Topics, Power BI, or Azure Table Storage. The choice of consumer obviously depends on what you want to do with the data. If the stream results should go back through a streaming process, then Event Hubs is a good destination. If you want to stash the resulting data in a warehouse for later BI, go that way.

How does Stream Analytics enable application integration? The output of a stream can go into the Service Bus for routing to other systems or triggering actions in applications hosted anywhere. One system could pump events through Stream Analytics to detect relevant business conditions and then send the output events to those systems via database or messaging.

What’s new?

This service became generally available in April 2015. In July, Microsoft added support for Service Bus Queues and Topics as output types. A few weeks ago, there was another update that added IoT-friendly capabilities like DocumentDB as an output, support for the IoT Hub service, and more.

How to use it?

Lots of different app services can connect to Stream Analytics (including Event Hubs, Power BI, Azure SQL Databases), but it looks like you’ve got limited choices today in setting up the stream processing jobs themselves. There’s the REST API, .NET SDK, or classic Portal UI.

The Portal UI lets you create jobs, configure inputs, write and test queries, configure outputs, scale streaming units up and down, and change job settings.

BizTalk Services

What is it?

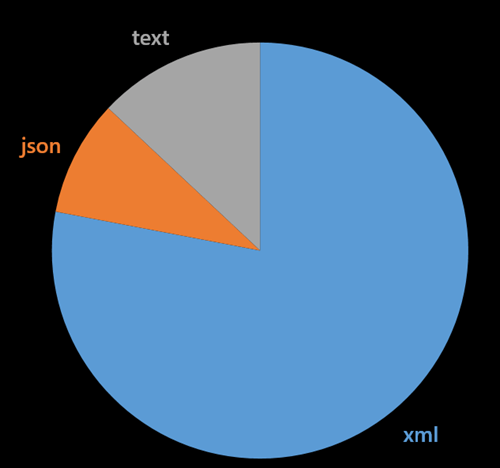

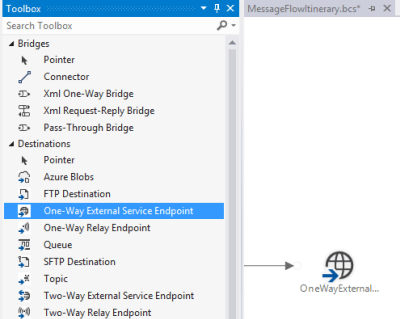

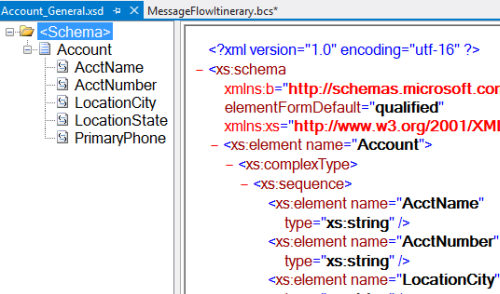

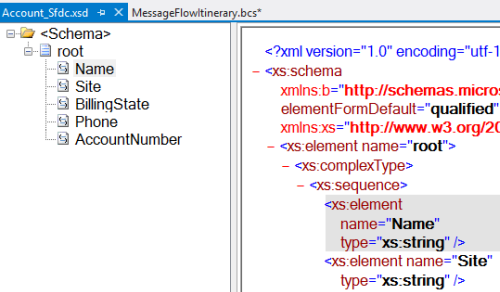

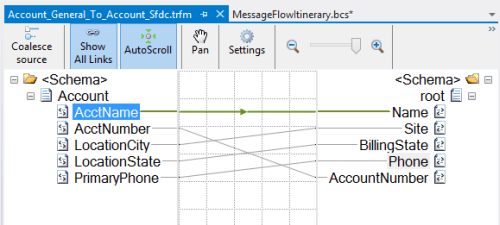

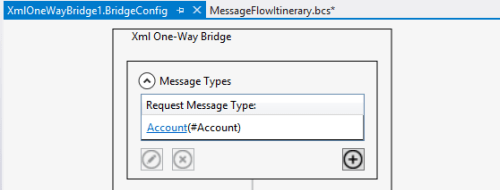

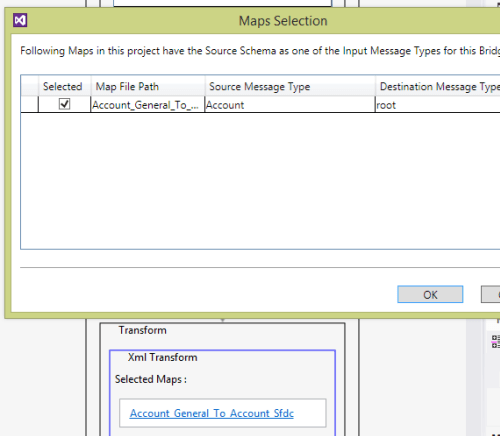

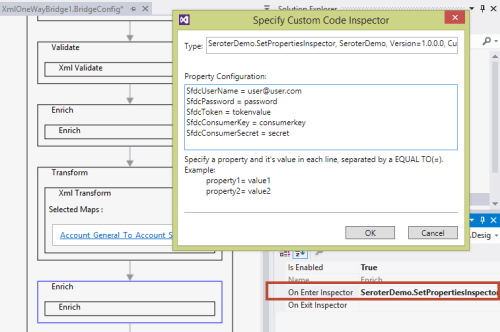

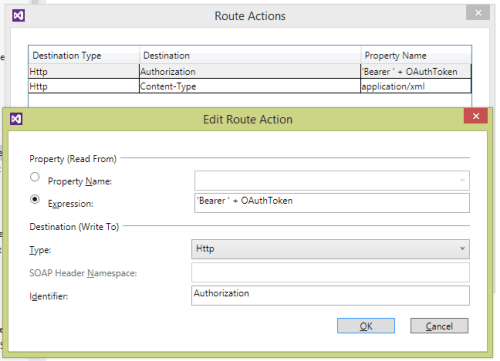

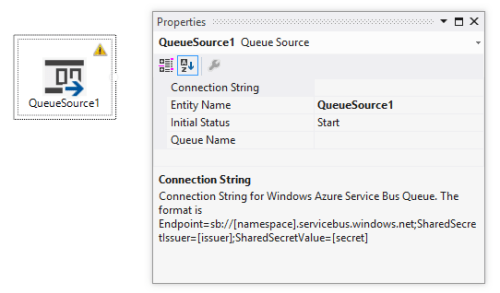

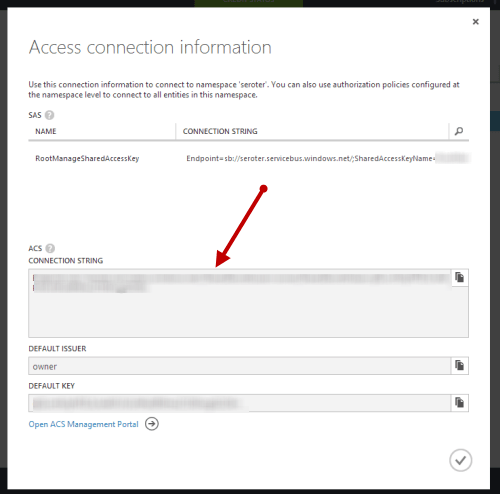

BizTalk Services targets EAI (enterprise application integration) and EDI (electronic data exchange) scenarios by offering tools and connectors that are designed to bridge protocol and data mismatches between systems. Developers send in XML or flat file data, and it can be validated, transformed, and routed via a “bridge” component. Message validation is done against an XSD schema, and XSLT transformations are created visually in a sophisticated mapping tool. Data comes into BizTalk Services via HTTP, S/FTP, Service Bus Queues, or Service Bus Topic subscription. Valid destinations for the output of a bridge include FTP, Azure Blob storage, one-way Service Bus Relay endpoints, Service Bus Queues, and more. Microsoft also added a BizTalk Adapter Service that lets you expose on-premises endpoints – options include SQL Server, Oracle databases, Oracle E-Business Suite, SAP, and Siebel – to cloud-hosted bridges.

BizTalk Services also has some business-to-business capabilities. This includes support for a wide range of EDI and EDIFACT schemas, and a basic trading partner management portal.

How does BizTalk Services enable application integration? BizTalk Services makes it possible for applications with different endpoints and data structures to play nice. Obviously this has potential to be a useful part of an application integration portfolio. Companies need to connect their assets, which are more distributed now more than ever.

What’s new?

BizTalk Services itself seems a bit stagnant (judging by the sparse release notes), but some of its services are now exposed in Logic and API apps (see below). Not sure where this particular service is heading, but its individual pieces will remain useful to teams that want access to transformation and connectivity services in their apps.

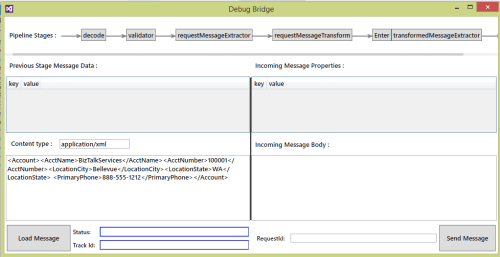

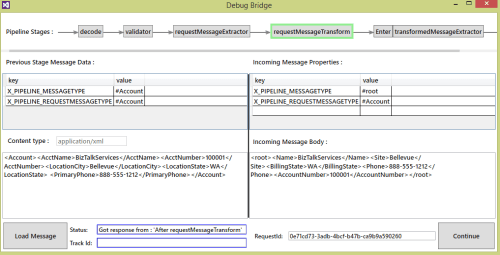

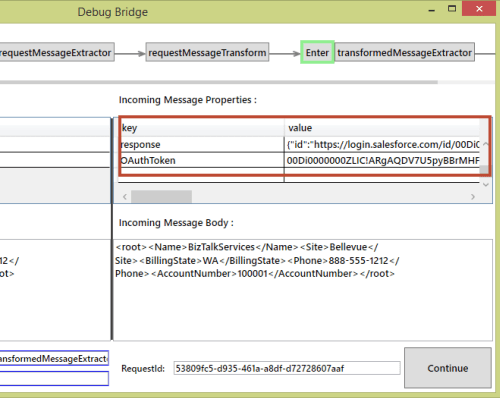

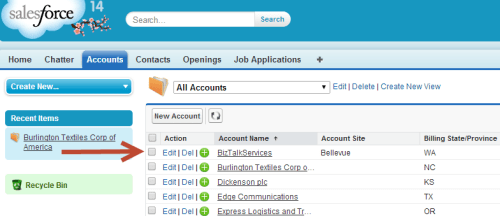

How to use it?

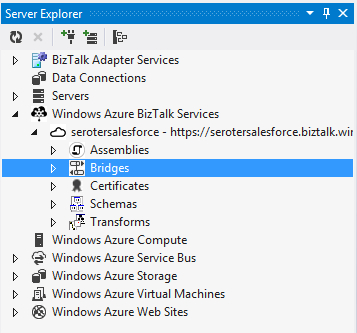

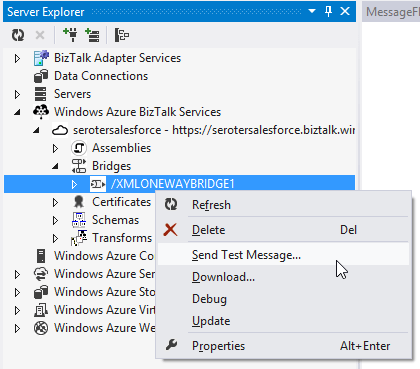

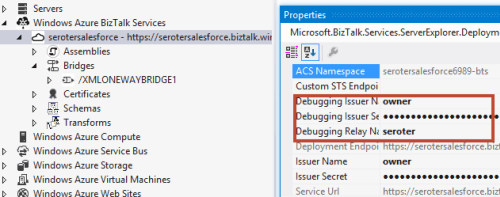

Working with BizTalk Services means using the REST API, PowerShell commandlets, or Visual Studio. There’s also a standalone management portal that hangs off the main Azure Portal.

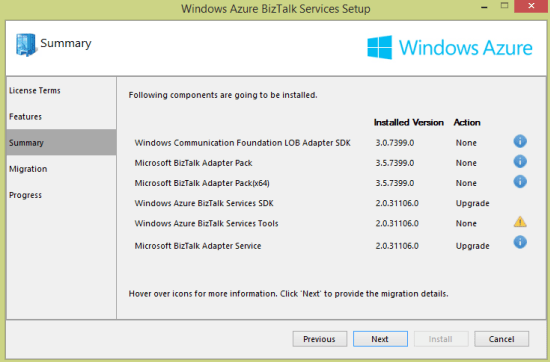

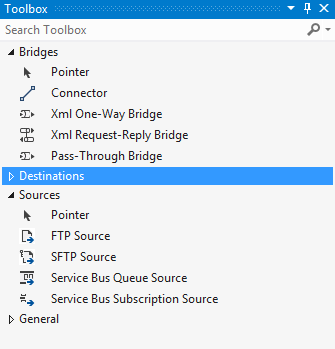

In Visual Studio, developers need to add the BizTalk Services SDK in order to get the necessary components. After installing, it’s easy enough to model out a bridge with all the necessary inputs, outputs, schemas, and maps.

In the standalone portal, you can search for and delete bridges, upload schemas and maps, add certificates, and track messages. Back in the standard Azure Portal, you configure things like backup policies and scaling settings.

Logic Apps

What is it?

Logic Apps let developers build and host workflows in the cloud. These visually-designed processes run a series of steps (called “actions”) and use “connectors” to access remote data and business logic. There are tons of connectors available so far, and it’s possible to create your own. Core connectors include Azure Service Bus, Salesforce Chatter, Box, HTTP, SharePoint, Slack, Twilio, and more. Enterprise connectors include an AS2 connector, BizTalk Transform Service, BizTalk Rules Service, DB2 connector, IBM WebSphere MQ Server, POP3, SAP, and much more.

Triggers make a Logic App run, and developers can trigger manually, or off the action of a connector. You could start a Logic app with an HTTP call, a recurring schedule, or upon detection of a relevant Tweet in Twitter. Within the Logic App, you can specify repeating operations and some basic conditional logic. Developers can see and edit the underlying JSON that describes a Logic App. Features like shared parameters are ONLY available to those writing code (versus visually designing the workflow). The various BizTalk-branded API actions offer the ability to validate, transform, and encode data, or execute independently-maintained business rules.

How do Logic Apps enable application integration? This service helps developers put together cloud-oriented application integration workflows that don’t need to run in an on-premises message bus. The various social and SaaS connectors help teams connect to more modern endpoints, while the on-premises connectors and classic BizTalk functionality addresses more enterprise-like use cases.

What’s new?

This is clearly an area of attention for Microsoft. Lots of updates since the server launched in March. Microsoft has added Visual Studio support for designing Logic Apps, future execution scheduling, connector search in the Preview Portal, do … until looping, improvements to triggers, and more.

How to use it?

This is still a preview service, so it’s not surprising that you only have a few ways to interact with it. There’s a REST API for management, and the user experience in the Azure Preview Portal.

Within the Preview Portal, developers can create and manage their Logic Apps. You can either start from scratch, or use one of the pre-built templates that reflect common patterns like content-based routing, scatter-gather, HTTP request/response, and more.

If you want to build your own, you choose from any existing or custom-built API apps.

You then save your Logic App and can have it run either manually or based on a trigger.

BizTalk Server (on Cloud Virtual Machines)

What is it?

Azure users can provision and manage their own BizTalk Server integration server in Azure Virtual Machines using prebuilt images. BizTalk Server is the mature, feature-rich integration bus used to connect enterprise apps. With it, customers get a stateful workflow engine, reliable pub/sub messaging engine, adapter framework, rules engine, trading partner management platform, and full design experience in Visual Studio. While not particularly cloud integrated (with the exception of a couple Service Bus adapters), it can be reasonably used to connect to integrate apps across environments.

What’s new?

BizTalk Server 2013 R2 was released in the middle of last year and included some incremental improvements like native JSON support, and updates to some built-in adapters. The next major release of the platform is expected in 2016, but without a publicly announced feature set.

How to use it?

Deploy the image from the template library if you want to run it in Azure, or do the same in any other infrastructure cloud.

Summary

Whew. Those are a lot of options. Definitely some overlap, but Microsoft also seems to be focused on building these in a microservices fashion. Specifically, single purpose services that do one thing really well and don’t encroach into unnatural territory. For example, Stream Analytics does one thing, and relies on other services to handle other parts of the processing pipeline. I like this trend, as it gets away from a heavyweight monolithic integration service that has a bunch of things I don’t need, but have to deploy. It’s much cleaner (although potentially MORE complex) to assemble services as needed!