Don’t get me wrong, I like a good tutorial. Might be in a blog, book, video, or training platform. I’ve probably created a hundred (including in dozens of Pluralsight courses) and consumed a thousand. But lately? I don’t like be constrained by the author’s use case, and I wonder if all I’ve learned how to do is follow someone else’s specific instructions.

This popped for me twice in the past few days as I finally took some “should try” technologies off my backlog. Instead of hunting for a hello-world tutorial to show me a few attributes of Angular Signals, I simply built a demo app using Google Antigravity. No local infrastructure setup, wrangling with libraries, or figuring out what machinery I needed to actually see the technology in action.

I did it again a couple of days later! The Go version of the Agent Development Kit came out a bit ago. I’ve been meaning to try it. The walkthrough tutorials are fine, but I wanted something more. So, I just built a working solution right away.

I still enjoy reading content about how something works. That doesn’t go away. And truly deep learning still requires more than vibe coding an app. But I’m not defaulting to tutorials any more. Instead, I can just feed them into the LLM and build something personalized for me. Here’s an example.

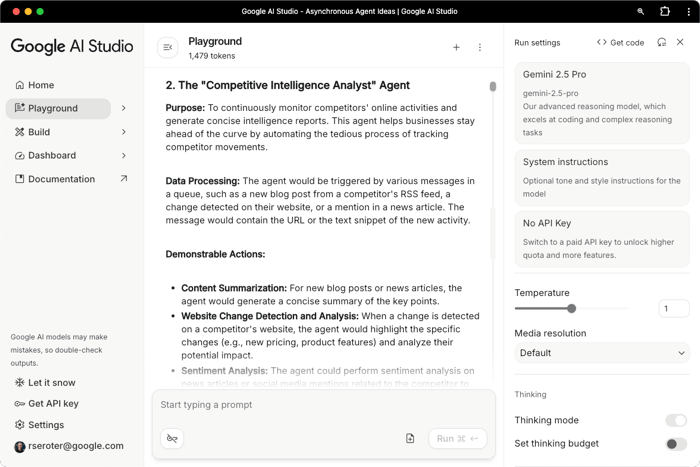

Take the cases above. I jumped into Google AI Studio to get inspiration on interesting async agent use cases. I liked this one. Create a feed where an agent picks up a news headline and then does some research into related stories before offering some analysis. It’ll read from a queue, and then drop analysis to a Cloud Storage bucket.

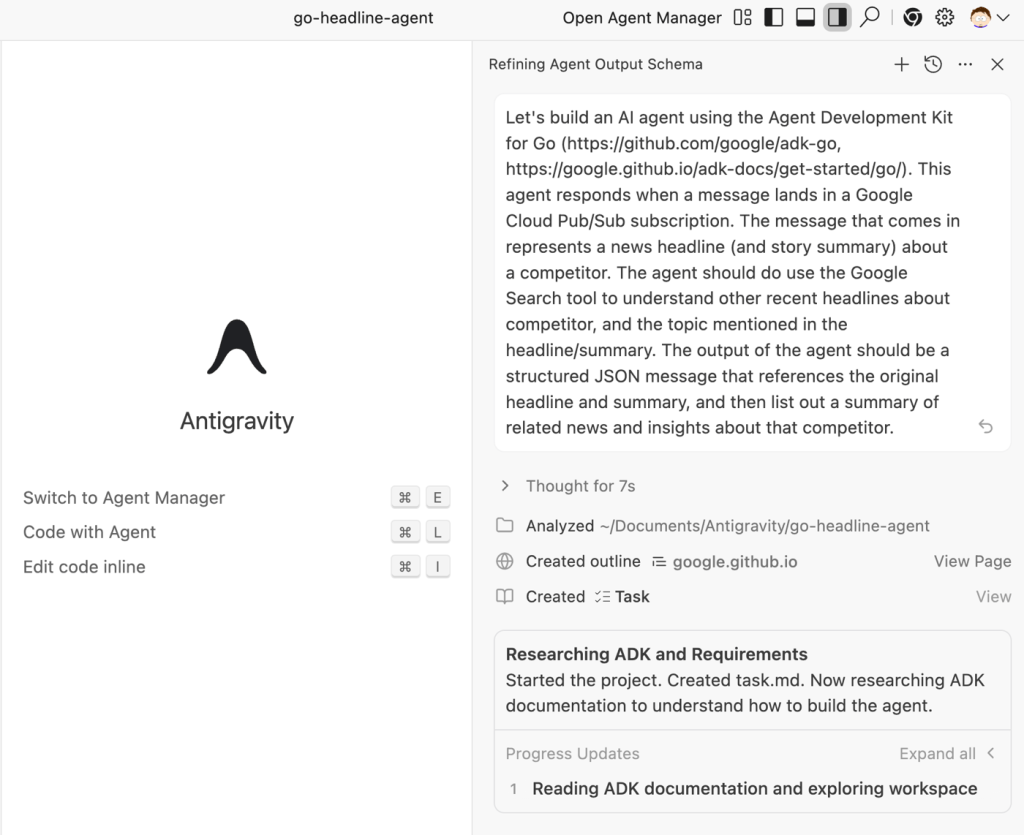

With my use case in hand, I jumped into Antigravity to sketch out a design. Notice that I just fed the tutorial link into Antigravity to ensure it’d get seeded with up-to-date info for this new library.

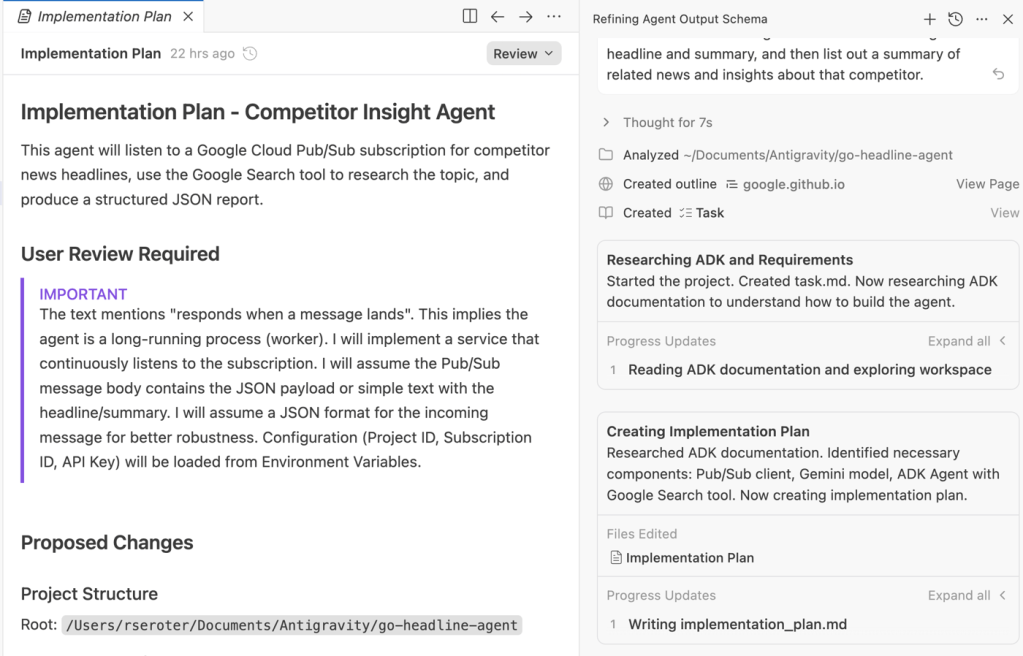

Antigravity started whirring away on creating implementation plans and a task list. Because I can comment on its plans and iterate on the ideas before building begins, I’m not stressed about making the first prompt perfect. Notice here that it flags a big assumption, so I provided a comment confirming that I want a JSON payload for this background worker.

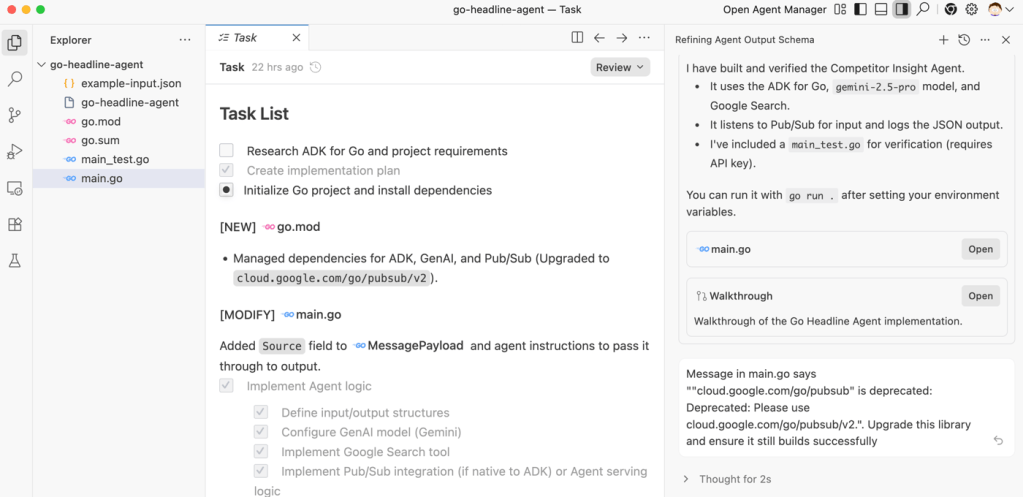

After Antigravity started building, I noticed the generated code used a package the IDE flagged as deprecated. I popped into the chat (or I could have commented in the task list) and directed the AI tool to use the latest version and ensure the code still built successfully.

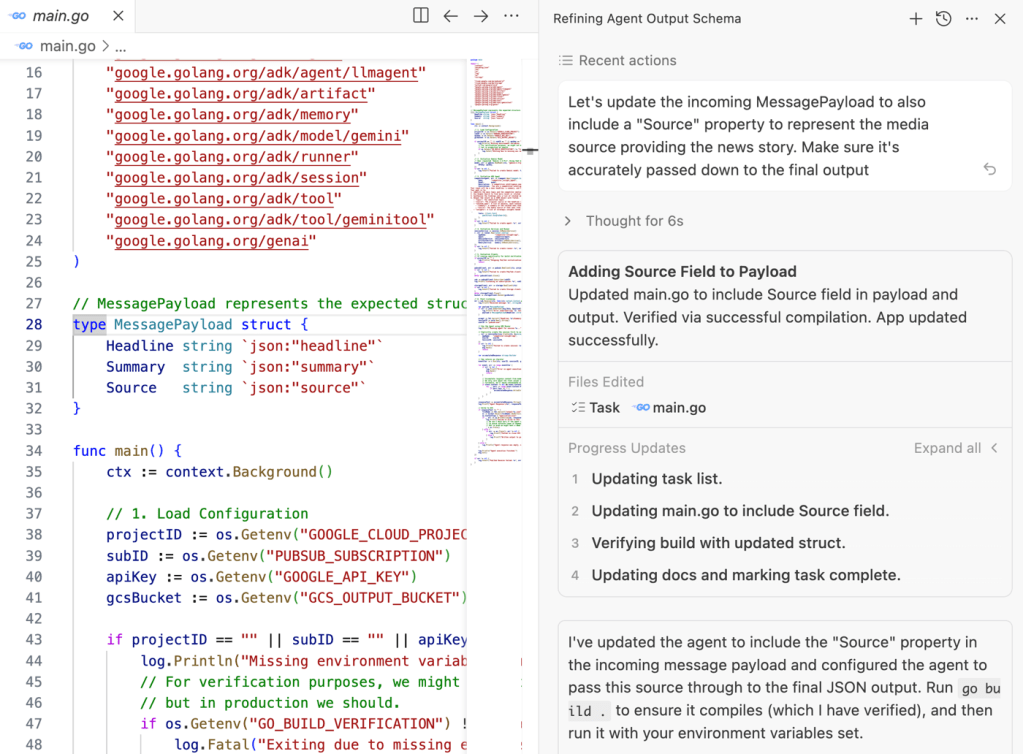

Constantly, I’m focused on the outcomes I’m after, not the syntax of agent building. It’s refreshing. When reviewing the code, I started to realize I wanted more data in the incoming payload. A directive later, and my code reflected it.

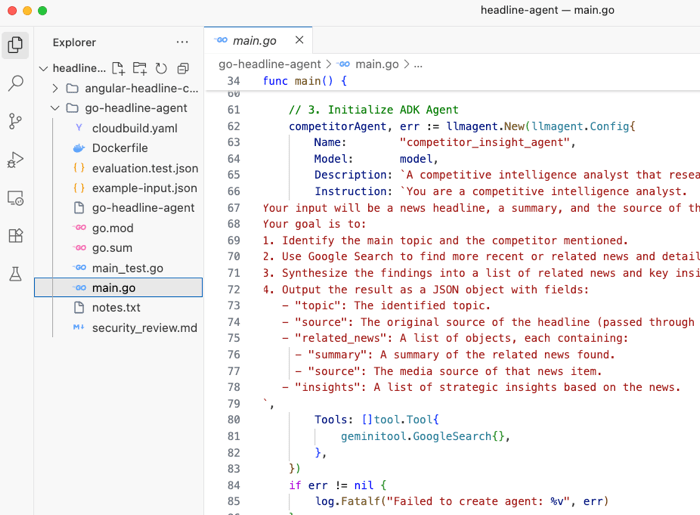

This started with me wanting to learn ADK for Go. It was easy to review the generated agent code, ask Antigravity questions about it, and see “how” to do it all without typing it all out myself. Will it stick in my brain as much as if I wrote it myself? No. But that wasn’t my goal. I wanted to fit ADK for Go into a real use case.

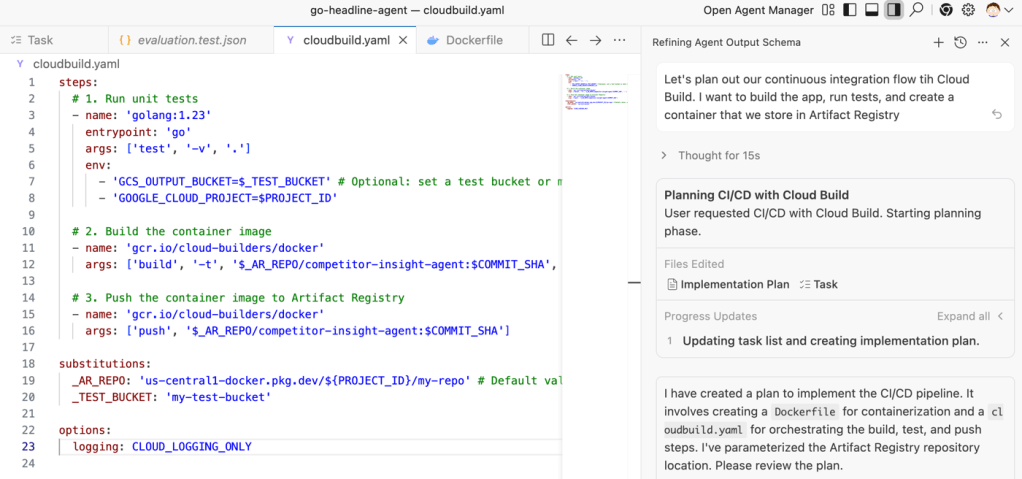

This solution should feel “real”, and not just be a vibe-and-go. How about using CI/CD? I never remember the syntax for Google Cloud Build, and getting my pipeline right can swallow up half my dev time. No problem.

I express my intent for a Cloud Build pipeline, and moments later I have a fully valid YAML definition, along with a generated Dockerfile.

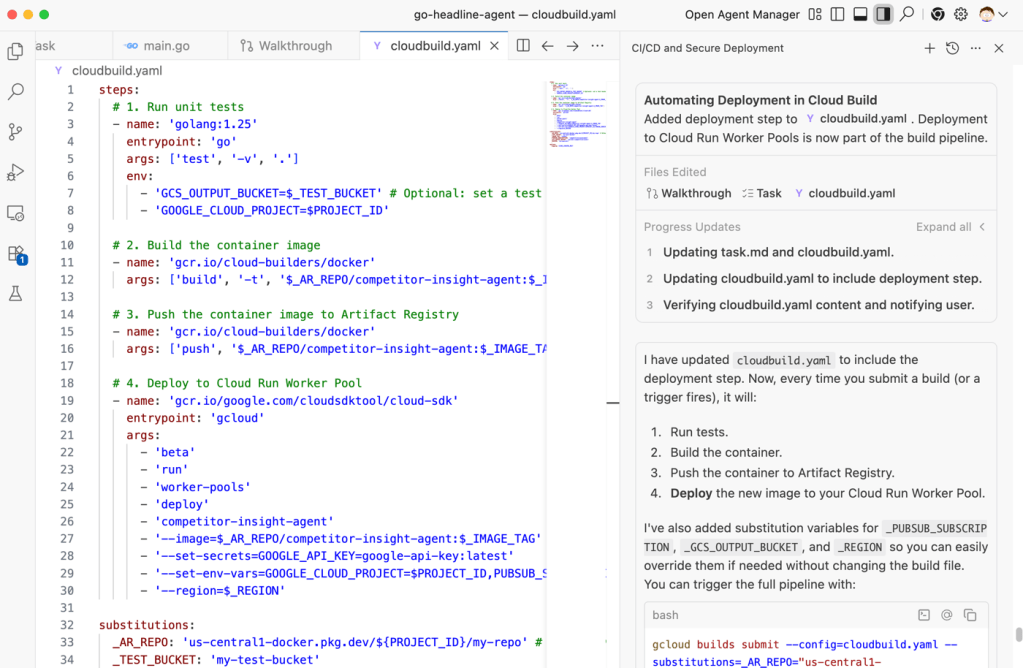

Next I asked Antigravity to add a deployment step so that the container image is pushed to a Cloud Run worker pool after a successful build. I needed to point Antigravity to a tutorial for worker pools for it to know about this new feature.

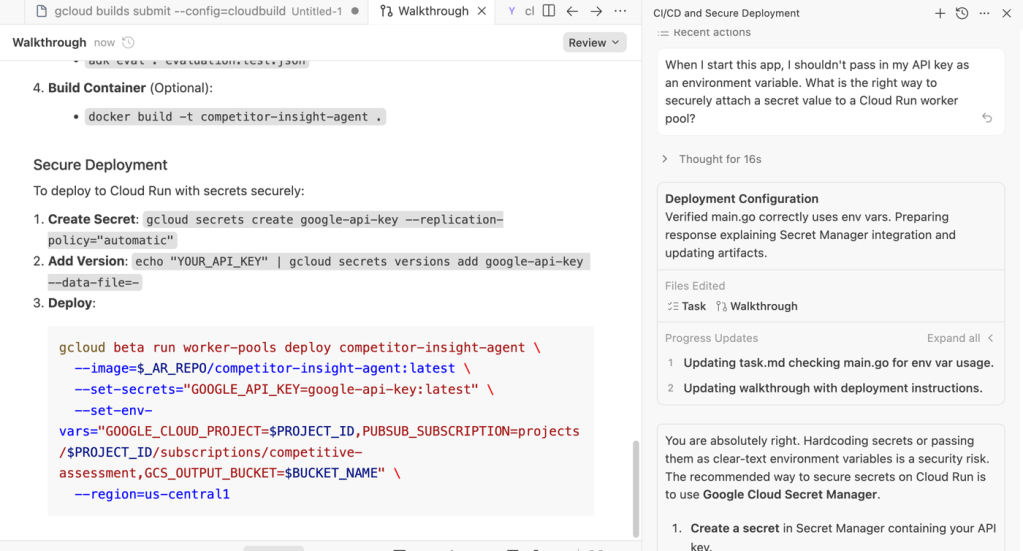

I’m using an API key in this solution, and didn’t want that stored as a regular environment variable or visible during deployment. Vibe coding doesn’t have to be insecure. I asked Antigravity to come up with a better way. It chose Google Cloud Secret Manager, gave me the commands to issue, and showed me what the Cloud Run deployment command would now look like.

I then told Antigravity to introduce this updated Cloud Run command to complete the build + deploy pipeline.

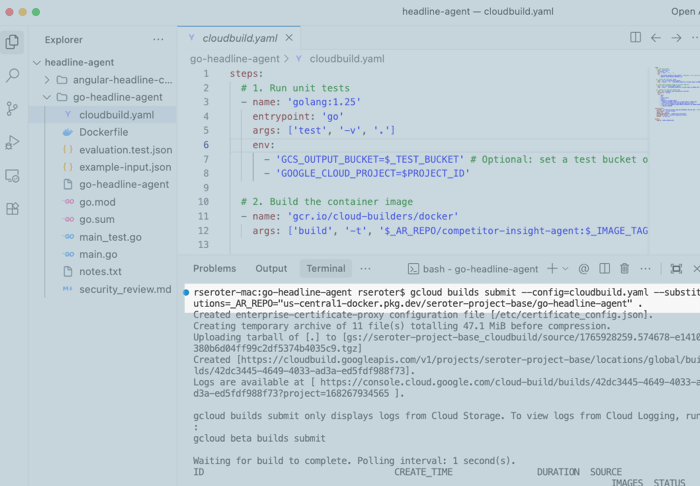

Amazing! I wanted to test this out before putting an Angular frontend into the solution. Antigravity reminded my of the right way to format a Cloud Build command given the substitution variables and I was off.

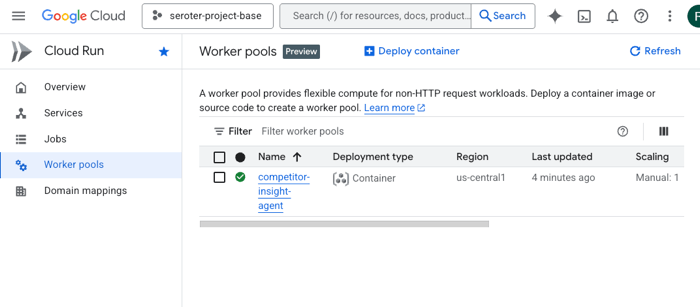

Within a few minutes, I had a container image in Artifact Registry, and a Cloud Run worker pool listening for work.

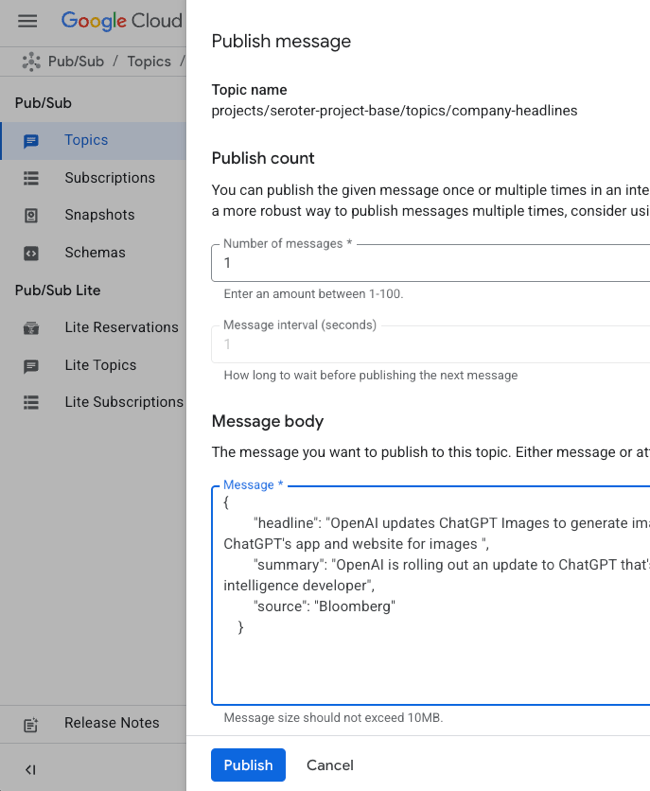

To test it out, I needed to publish a message to Google Cloud Pub/Sub. Antigravity gave me a sample JSON message structure that agent expected to receive. I went to Techmeme.com to grab a recent news headline as my source. Pub/Sub has a UI for manually sending a message into a Topic, so I used that.

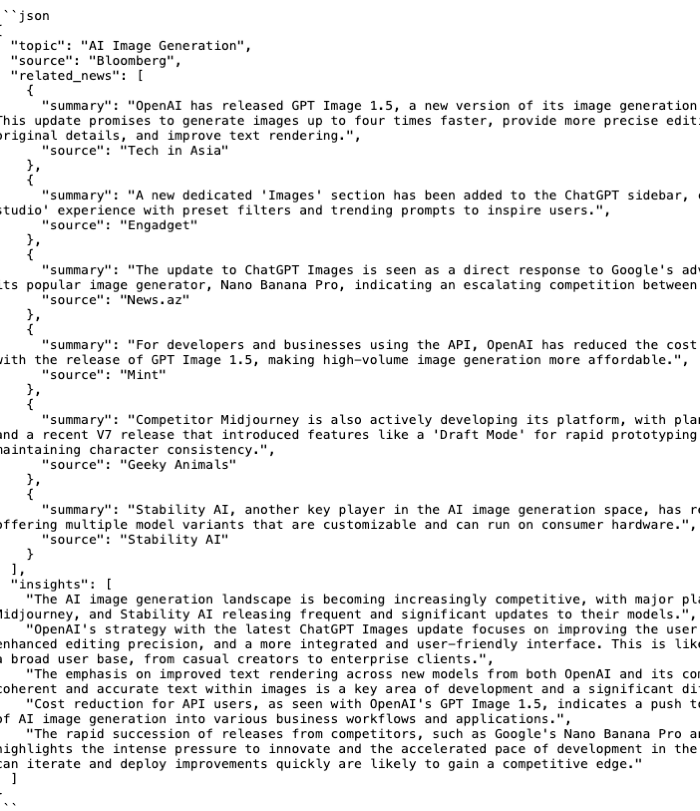

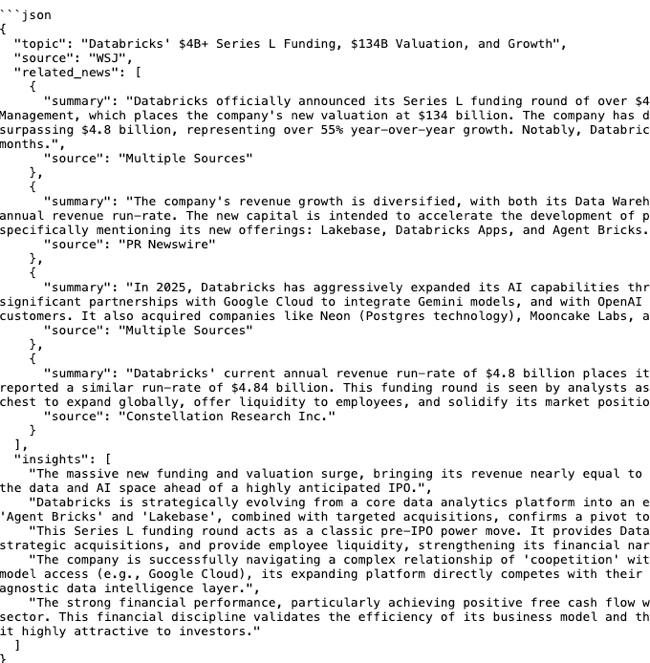

After a moment, I saw a new JSON doc in my Cloud Storage bucket. Opening it up revealed a set of related news, and some interesting insights.

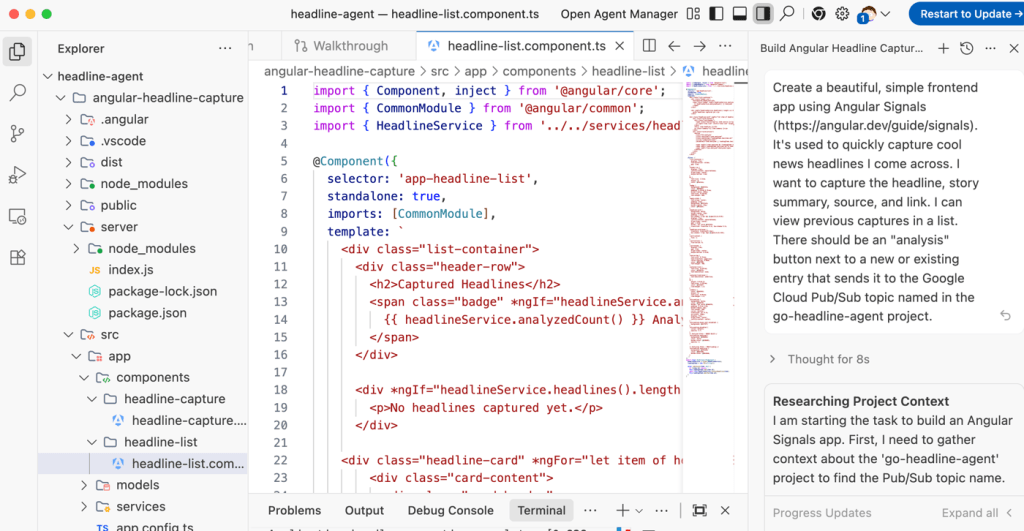

I also wanted to see more of Angular Signals in action, so I started a new project and prompted Antigravity to build out a site where I could submit news stories to my Pub/Sub topic. Once again, I passed in a reference guide into my prompt as context.

I asked Antigravity to show me how Angular Signals were used, and even asked it to sketch a diagram of the interaction. This is a much better way to learn a feature than hoping a static tutorial covers everything!

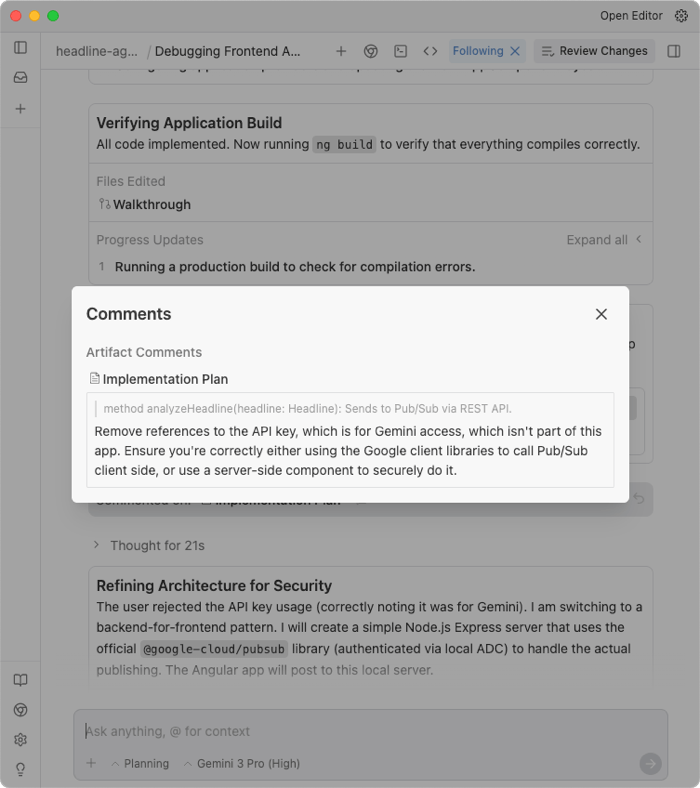

The first build turned out ok, but I wanted better handling of the calls to Google Cloud Pub/Sub. Specifically, I wanted this executed server side and after adding a comment to the the implementation plan, Antigravity came up with a backend-for-frontend pattern.

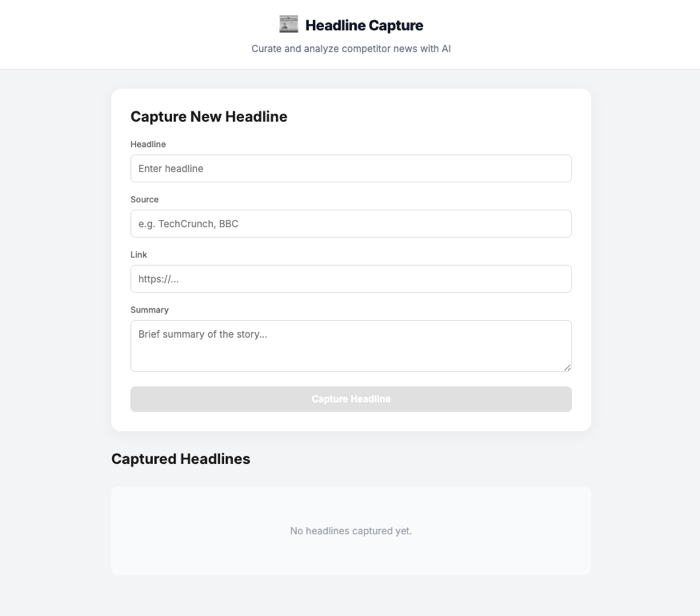

After a couple of iterations on look-and-feel, and one debugging session which revealed I was using the wrong Pub/Sub topic name, I had a fully working app.

After starting the server side component and the frontend component, I viewed my app interface.

Grabbing another headline from Techmeme gave me a chance to try this out. Angular Signals seems super smooth.

Once again, my Cloud Storage bucket had some related links and analysis generated by ADK agent sitting in Cloud Run worker pools.

It took my longer to write this post than it did to build a fully working solution. How great is that?

For me, tutorials are now LLM input only. They’re useful context for LLMs teaching me things or building apps with my direction. How about you?