I recently had a chance to investigate the new SQL Adapter that ships with BizTalk Server 2009 (as part of the BizTalk Adapter Pack) and wanted to highlight one of the most interesting features.

There are lots of things to love about the new adapter over the old one (now WCF-based, greater configuration, cross-table transactions, etc), but one of the coolest ones is support for SQL Server Query Notification. Instead of relying on a polling based solution to discover database changes, you can have SQL Server raise an event to your receive location when relevant changes are committed. Why is this good? For one scenario, consider a database that updates infrequently, but when changes DO occur, they need to be disseminated in a timely manner. You could use polling with a small interval, but that’s quite wasteful given the infrequency of change. However, using a 1-day polling interval is impractical if you need rapid communication of updates. This is where Query Notification is useful. Let’s walk through an example.

First, I created the following database table for “Employees.” I’ve highlighted the fact that when I installed the SQL Server 2008 database engine, I also installed Service Broker (which is required for Query Notification).

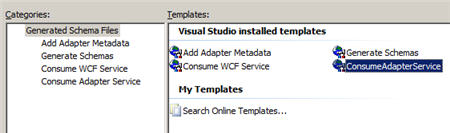

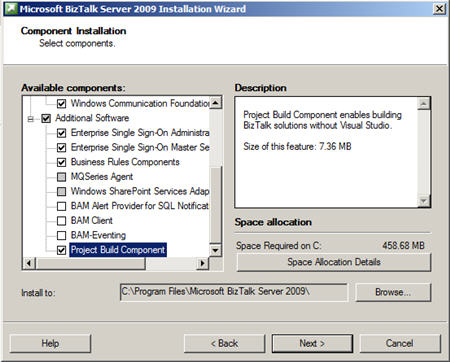

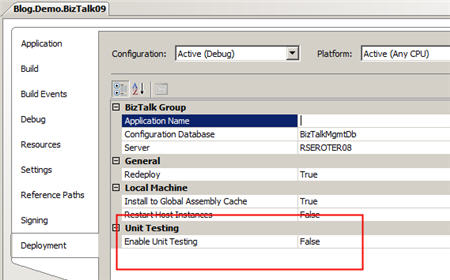

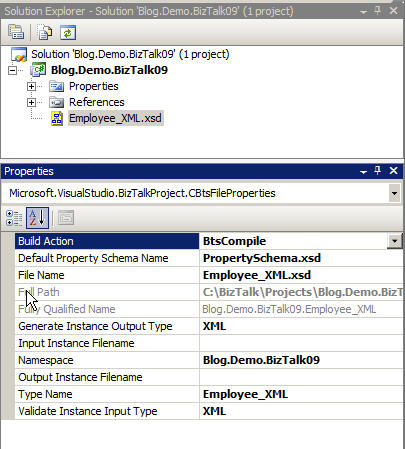

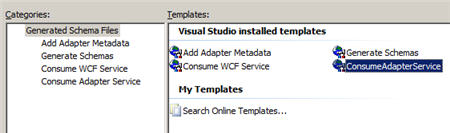

Once my table is in place, I want to next add the appropriate schema(s) to my BizTalk Server 2009 project. If you recall from using the BizTalk Adapter Pack in the past, you go to the “Add Generated Items” page and choose “Consume Adapter Service.”

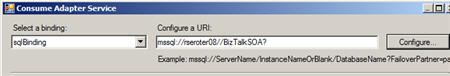

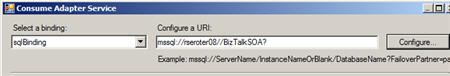

The first thing you need to do is establish a connection string for accessing your database. The connection property window allows you to pick the server, instance, failover node, database name and more.

Once a valid connection is created, we can browse the database. Because I’ve chosen “Service” under the “Select Contract Type” drop down, I do not see the database artifacts in the “Select a Category” pane. Instead, I see operations that make BizTalk Server act as a service provider (e.g. polling) instead of a service consumer (e.g. select from table). I’ve chosen the “Notification” option.

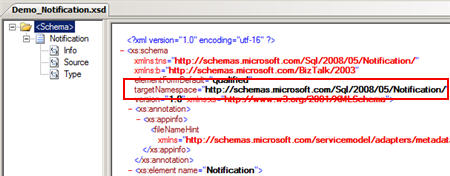

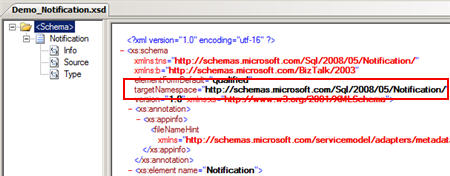

The schema generated by this wizard is one of the most generic, non-contextual schema I’ve seen in a while. However, I’m not sure that I can fault BizTalk for that as it appears to be the standard Query Notification event schema provided by SQL Server.

Note that this schema DOES NOT have any information about which table changed, which row changed, or which data changed. All it tells you is that an event occurred in a database. During receive location configuration you can specify the type of data you are interested in, but that interest does not seep into this event message. The idea is that you take this notice and use an orchestration to then retrieve the data implied by this event. One big “gotcha” I see here is that the target namespace is not related to the target database. This means you can you can only have one instance of this schema in your environment in order to avoid encountering collisions between matching namespace+root schemas. So, I’d suggest generating this schema once, and throwing it into a BizTalk.Common.dll and having everyone else reference that in their projects.

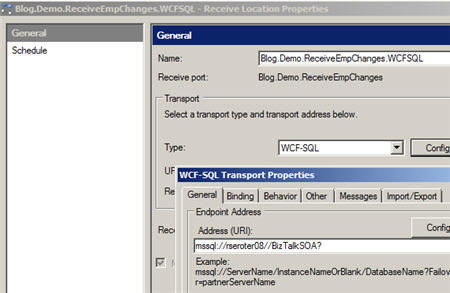

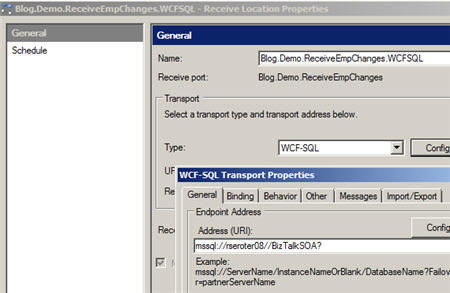

Ok, let’s see what this event message actually looks like. After deploying the schema, we need to create a receive location that SQL Server publishes to. This adapter looks and feels like all the other WCF adapters, down to the URI request on the first tab.

The most important tab is the “Binding” one where we set our notification details. Specifically, I set the “Inbound Operation Type” to “Notification” (instead of polling), and set a notification statement. I’m looking for any changes to my table where the “IsChanged” column is set to “True.” Be aware that you have to specify a column name (instead of “select *”) and you must provide the database owner on the table reference (dbo.Employees).

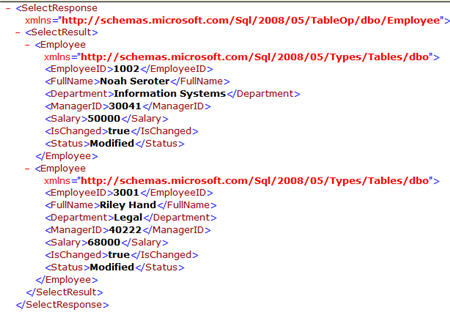

After I built a send port that simply subscribed to this receive port, I changed a record in my table. The resulting Query Notification event message looked like this:

As you can see, by itself, this is a useless message. You require the knowledge of which receive port it came from, and what your notification statement was. I haven’t thought it through too much, but it would probably be nice to at least have a database or table reference in this message.

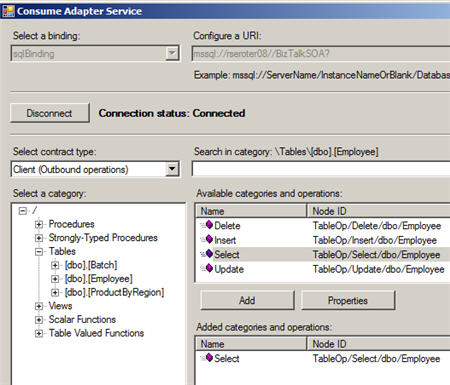

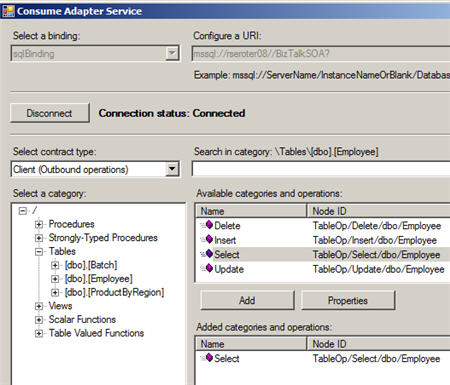

Now what if we want to do something with this event message? Let’s say that upon updates to the table, I want to select all changed records, update them so that they are no longer “changed”, and then publish that record set out. First, I walked through the “Consume Adapter Service” option again and chose a “Client” contract type and browsed to my “Employee” table and highlighted the “Select” operation.

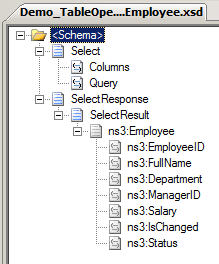

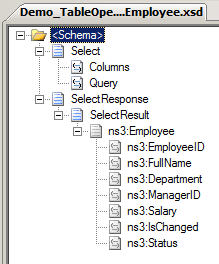

From this wizard, I now get a schema with both a request message and a strongly typed response.

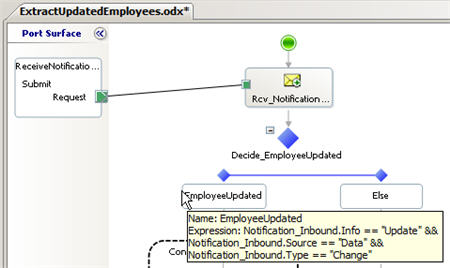

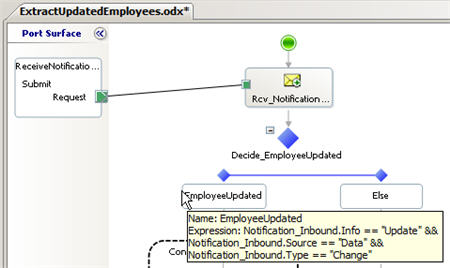

After distinguishing the fields of my Query Notification message, I created a new orchestration. I receive in the Query Notification message, and have a Decision to see if and update of the data has occurred.

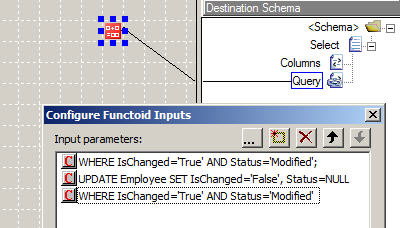

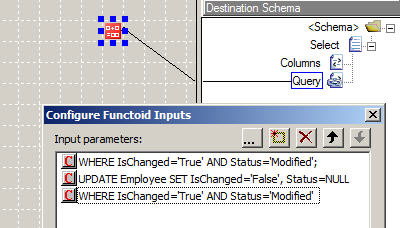

If a “change” event is encountered, I want to query my database and pull back all records whose “IsChanged” value is “True”, and “Status” is equal to “Modified.” When using the classic SQL adapter, we had to constantly remember to flip some sort of bit so that the next polling action didn’t repeatedly pull the same records. This is still useful with the new adapter as I don’t want my “Select” query to yank messages that were previously read. So, I need to both select the records, and then update them. What’s great about the new adapter is that I can do this all at once, in a single transaction. Specifically, in my Select request message, I can embed an “Update” statement in it.

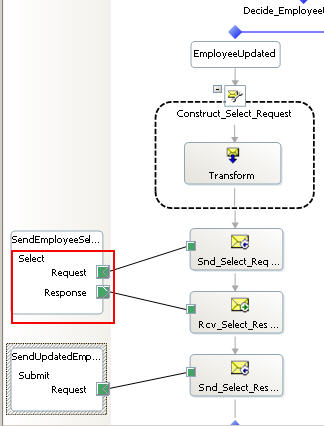

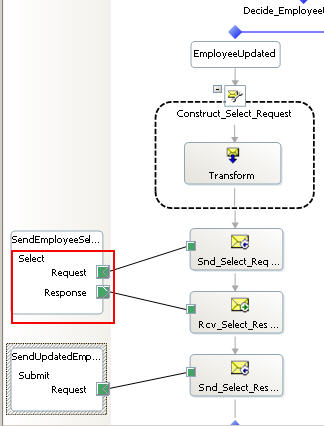

So above, I’m selecting “*” columns, and my “Query” node contains both my WHERE clause and UPDATE statement. In my orchestration, I send this Select request, get back all the records from the database, and publish that record set back out. I’ve highlighted the fact that my port operation name must match what’s expected by the adapter (in my case, “Select”).

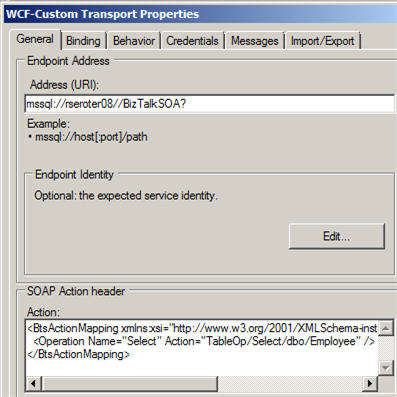

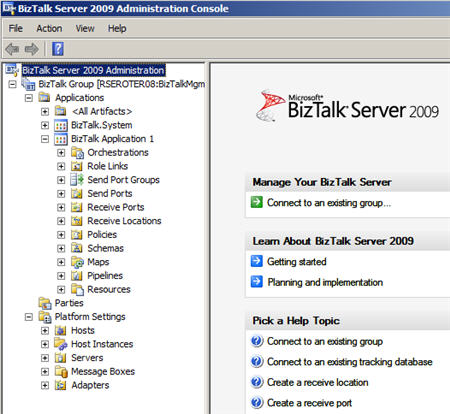

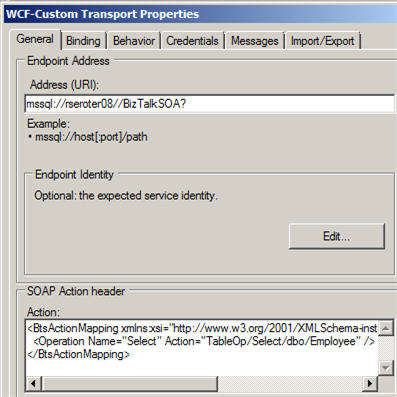

Because the “Consume Adapter Service” wizard also generated a send port binding (which I’ve imported into my application), I can confirm that the expected operation name is “Select.”

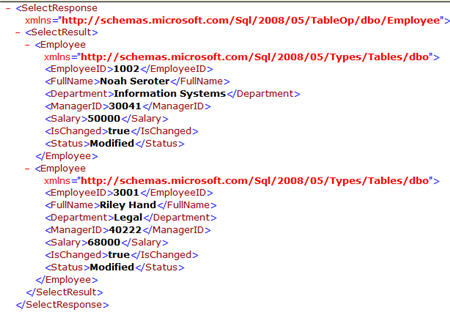

So what does the result look like? I changed two records in my database table, which means a notification was instantly sent to my receive location, which in turn instantiated an orchestration which both selected the changed records and updated those same records. The final message distributed by my orchestration looked like this:

There you go. I’m a fan of this new capability as it truly makes our databases event driven participants in our environment instead of passive repositories that require explicit querying for changes of state. While I don’t think I’m a fan of the generic schema, I suspect the best pattern is to take that generated event message, and, using what we know about the notification statement, republish a new event message that contains enough context to be actionable by downstream consumers.

What do you think? Is this interesting or will you stick with straight polling as a database access method?

Technorati Tags: BizTalk