In my first post of this series, I looked at the provisioning experience of five leading cloud Infrastructure-as-a-Service providers. No two were alike, as each offered a unique take.

Elasticity is an oft-cited reason for using the cloud, so scalability is a key way to assess the suitability of a given cloud to your workloads. Like before, I’ll assess Google Compute Engine, Microsoft Azure, AWS, CenturyLink Cloud, and Digital Ocean. Each cloud will be evaluated based on the ability to scale vertically (i.e. add/remove instance capacity) and horizontally (i.e. add/remove instances) either manually or automatically.

Let’s get going in alphabetical order.

DISCLAIMER: I’m the product owner for the CenturyLink Cloud. Obviously my perspective is colored by that. However, I’ve taught three well-received courses on AWS, use Microsoft Azure often as part of my Microsoft MVP status, and spend my day studying the cloud market and playing with cloud technology. While I’m not unbiased, I’m also realistic and can recognize strengths and weaknesses of many vendors in the space.

Amazon Web Services

How do you scale vertically?

In reality, AWS treats individual virtual servers as immutable. There are some complex resizing rules, and local storage cannot be resized at any time. Resizing an AWS image also results in all new public and private IP addresses. Honestly, you’re really building a new server when you choose to resize.

If you want to add CPU/memory capacity to a running virtual machine – and you’re not trying to resize to an instance type of a different virtualization type – then you must stop it first. You cannot resize instances between different virtualization types, so you may want to carefully plan for this. Note that stopping an AWS VM means that anything on the ephemeral storage is destroyed.

Once the VM is stopped, it’s easy to switch to a new instance type. Note that you have to be familiar with the instance types (e.g. size and cost) as you aren’t given any visual indicator of what you’re signing up for. Once you choose a new instance type, simply start up the instance.

Want to add storage to an existing AWS instance? You don’t do that from the “instances” view in their Console, but instead, create an EBS volume separately and attach it later.

Attaching is easy, but you do have to remember your instance name.

By changing instance type, and adding EBS volumes, teams can vertically scale their resources.

How do you scale horizontally?

AWS strongly encourages customers to build horizontally-scalable apps, and their rich Auto Scaling service supports that. Auto Scaling works by adding (or removing) virtual resources from a pool based on policies.

When creating an Auto Scaling policy, you first choose the machine image profile (the instance type and template to add to the Auto Scale group), and then define the Auto Scale group. These details include which availability zone(s) to add servers to, how many servers to start with, and which load balancer pool to use.

With those details in place, the user then sets up the scaling policy (if they wish) which controls when to scale out and when to scale in. One can use Auto Scale to keep the group at a fixed size (and turn up instances if one goes away), or keep the pool size fluid based on usage metrics or schedule.

Amazon has a very nice horizontal scaling solution that works automatically, or manually. Users are free to set up infrastructure Auto Scale groups, or, use AWS-only services like Elastic Beanstalk to wrap up Auto Scale in an application-centric package.

CenturyLink Cloud

How do you scale vertically?

CenturyLink Cloud offers a few ways to add new capacity to existing virtual servers.

First off, users can resize running servers by adding/removing vCPUs and memory, and growing storage. When adding capacity, the new resources are typically added without requiring a power cycle on the server and there’s no data loss associated with a server resize. Also, note that when you look at dialing resources up and down, the projected impact on cost is reflected.

Users add more storage to a given server by resizing any existing drives (including root) and by adding entirely new volumes.

If the cloud workload has spiky CPU consumption, then the user can set up a vertical Autoscale policy that adds and removes CPU capacity. When creating these per-server policies, users choose a CPU min/max range, how long to collect metrics before scaling, and how long to wait before another scale event (“cool down period”). Because scaling down (removing vCPUs) requires a reboot, the user is asked for a time window when it’s ok to cycle the server.

How do you scale horizontally?

Like any cloud, CenturyLink Cloud makes it easy to manually add new servers to a fleet. Over the summer, CenturyLink added a Horizontal Autoscale service that powers servers on and off based on CPU and memory consumption thresholds. These policies – defined once and available in any region – call out minimum sizing, monitoring period threshold, cool down period, scale out increment, scale in increment, and CPU/RAM utilization thresholds.

Unlike other public clouds, CenturyLink organizes servers by “groups.” Horizontal Autoscale policies are applied at the Group level, and are bound to a load balancer pool when applied. When a scale event occurs, the servers are powered on and off within seconds. Parked servers only incur cost for storage and OS licensing (if applicable), but there still is a cost to this model that doesn’t exist in the AWS-like model of instantiating and tearing down servers each time.

CenturyLink Cloud provides a few ways to quickly scale vertically (manually or automatically without rebooting), and now, horizontally. While the autoscaling capability isn’t as feature-rich as what AWS offers, the platform recognizes the fact that workloads have different scale vectors and benefit from capacity being added up or out.

Digital Ocean

How do you scale vertically?

Digital Ocean offers a pair of ways to scale a droplet (virtual instance).

First, users can do a “Fast-Resize” which quickly increases or decreases CPU and memory. A droplet must be powered off to resize.

After shutting the droplet down and choosing a new droplet size, the additional capacity is added in seconds.

Once a droplet is sized up, it’s easy to (power off) and size down again.

If you want to change your disk size as well, Digital Ocean offers a “Migrate-Resize” model where you first take a snapshot of your (powered off) droplet.

Then, you create an entirely new droplet, but choose that snapshot as the “base.” This way, you end up with a new (larger) droplet with all the data from the original one.

How do you scale horizontally?

You do it manually. There are no automated techniques for adding more machines when a usage threshold is exceeded. They do tout their API as a way to detect scale conditions and quickly clone droplets to add more to a running fleet.

Digital Ocean is known for its ease, performance, and simplicity. There isn’t the level of sophistication and automation you find elsewhere, but the scaling experience is very straightforward.

Google Compute Engine

How do you scale vertically?

Google lets you add more storage to a running virtual machine. Persistent disks can be shared among many machines, although only one machine at a time can have read/write permission.

Interestingly, Google Compute Engine doesn’t support an upgrade/downgrade to different instance types, so there’s no way to add/remove CPU or memory from a machine. They recommend creating a new virtual machine and attaching the persistent disks from the original one. So, “more storage” is the only vertical scaling capability currently offered here.

How do you scale horizontally?

Up until a week ago, Google didn’t have an auto scaling solution. That changed, and now the Compute Engine Autoscaler is in beta.

First, you need to set up an instance template for use by the Autoscaler. This is the same data you provide when creating an actual running instance. In this case, it’s template-ized for future use.

Then, create an instance group that lets you collectively manage a group of resources. Here’s the view of it, before I chose to set “Autoscaling” to “On.”

Turning Autoscaling on results in new settings popping up. Specifically, the autoscale trigger (choices: CPU usage, HTTP load balancer usage, monitoring metric), the usage threshold, instance min/max, and cool-down period.

You can use this with HTTP or network load balanced instance groups to load balance multiple app tiers independently.

Google doesn’t offer much in the way of vertical resizing, but the horizontal auto scaling story is quickly catching up to the rest.

Microsoft Azure

How do you scale vertically?

Microsoft provides a handful of vertical scaling options. For a virtual server instance, a user can change the instance type in order to get more/less CPU and memory. It appears from my testing that this typically requires a reboot of the server.

Azure users can also add new, empty disks to a given server. It doesn’t appear as if you can resize existing disks.

How do you scale horizontally?

Microsoft, like all clouds, makes it easy to add more virtual instances manually. They also have a horizontal auto scale capability. First, you must put servers into an “availability set” together. This is accomplished by first putting them into the same “cloud service” in Azure. In the screenshot below, seroterscale is the name of my cloud service, and both the two instances are part of the same availability set.

Somewhat annoyingly, all these machines have to be the exact same size (which is the requirement in some other clouds too, minus CenturyLink). So after I resized my second server, I was able to muck with the auto scale settings. Note that Azure auto scale also works by enabling/disabling existing virtual instances versus creating or destroying instances.

Notice that you have two choices. First, you can scale based on scheduled time.

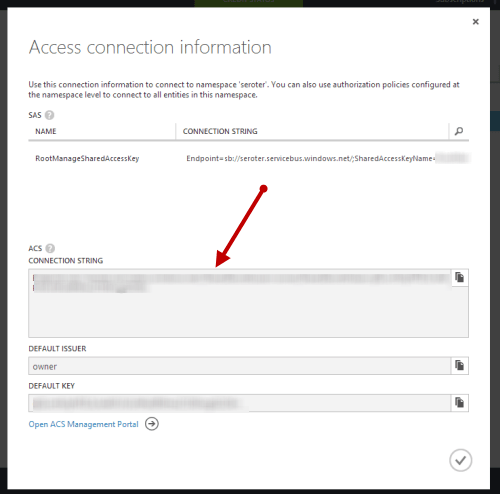

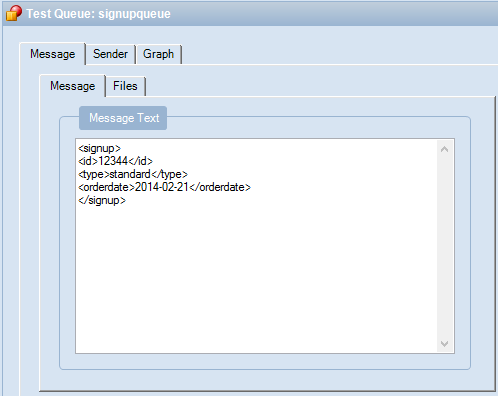

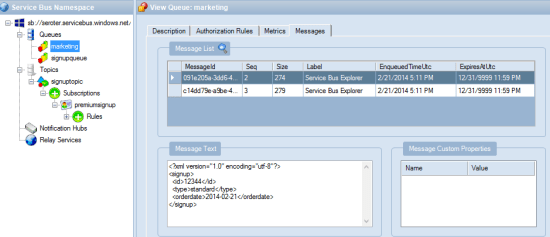

Either by schedule or by metric, you specify how many instances to turn on/off based on the upper/lower CPU threshold. It’s also possible to scale based on the queue depth of a Service Bus queue.

Microsoft gives you a few good options for bumping up the resources on existing machines, while also enabling more servers in the fleet to offset planned or unplanned demand.

Summary

As with my assessment of cloud provisioning experiences, each cloud provider’s scaling story mirrors their view of the world. Amazon has a broad, sophisticated, and complex feature set, and their manual and Auto Scaling capabilities reflects that. CenturyLink Cloud focuses on greenfield and legacy workloads, and thus has a scaling story that’s focused on supporting both modern scale-out systems as well as traditional systems that prefer to scale up. Digital Ocean is all about fast acquisition of resources and an API centric management story, and their basic scaling options demonstrate that. Google focuses a lot on quickly getting lots of immutable resources, and their limited vertical scaling shows that. Their new horizontal scaling service complements their perspective. Finally, Microsoft’s experience for vertical scaling mirrors AWS, while their horizontal scaling is a bit complicated, but functional.

Unless you’re only working with modern applications, it’s likely your scaling needs will differ by application. Hopefully this look across providers gave you a sense for the different capabilities out there, and what you might want to keep in mind when designing your systems!

Many cloud providers focus on the “acquire stuff” experience and leave the “manage stuff” experience lacking. Whether your cloud resources live for 3 days or three years, there are maintenance activities. CenturyLink Cloud lets you create account hierarchies to represent your org, organize virtual servers into “groups”, act on those servers as a group, see cross-DC server health at a glance, and more. It’s a focus of this platform, and it differs from most other clouds that give you a flat list of cloud servers per data center and a limited number of UI-driven management tools. With the rise of configuration management as a mainstream toolset, platforms with limited UIs can still offer robust means for managing servers at scale. But, CenturyLink Cloud is focused on everything from account management and price transparency, to bulk server management in the platform.

Many cloud providers focus on the “acquire stuff” experience and leave the “manage stuff” experience lacking. Whether your cloud resources live for 3 days or three years, there are maintenance activities. CenturyLink Cloud lets you create account hierarchies to represent your org, organize virtual servers into “groups”, act on those servers as a group, see cross-DC server health at a glance, and more. It’s a focus of this platform, and it differs from most other clouds that give you a flat list of cloud servers per data center and a limited number of UI-driven management tools. With the rise of configuration management as a mainstream toolset, platforms with limited UIs can still offer robust means for managing servers at scale. But, CenturyLink Cloud is focused on everything from account management and price transparency, to bulk server management in the platform.