BizTalk Services is far from the most mature cloud-based integration solution, but it’s viable one for certain scenarios. I haven’t seen a whole lot of demos that show how to send data to SaaS endpoints, so I thought I’d spend some of my weekend trying to make that happen. In this blog post, I’m going to walk through the steps necessary to make BizTalk Services send a message to a Salesforce REST endpoint.

I had four major questions to answer before setting out on this adventure:

- How to authenticate? Salesforce uses an OAuth-based security model where the caller acquires a token and uses it in subsequent service calls.

- How to pass in credentials at runtime? I didn’t want to hardcode the Salesforce credentials in code.

- How to call the endpoint itself? I needed to figure out the proper endpoint binding configuration and the right way to pass in the headers.

- How to debug the damn thing. BizTalk Services – like most cloud hosted platforms without an on-premises equivalent – is a black box and decent testing tools are a must.

The answers to first two is “write a custom component.” Fortunately, BizTalk Services has an extensibility point where developers can throw custom code into a Bridge. I added a class library project and added the following class which takes in a series of credential parameters from the Bridge design surface, calls the Salesforce login endpoint, and puts the security token into a message context property for later use. I also dumped a few other values into context to help with debugging. Note that this library references the great JSON.NET NuGet package.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using Microsoft.BizTalk.Services;

using System.Net.Http;

using System.Net.Http.Headers;

using Newtonsoft.Json.Linq;

namespace SeroterDemo

{

public class SetPropertiesInspector : IMessageInspector

{

[PipelinePropertyAttribute(Name = "SfdcUserName")]

public string SfdcUserName_Value { get; set; }

[PipelinePropertyAttribute(Name = "SfdcPassword")]

public string SfdcPassword_Value { get; set; }

[PipelinePropertyAttribute(Name = "SfdcToken")]

public string SfdcToken_Value { get; set; }

[PipelinePropertyAttribute(Name = "SfdcConsumerKey")]

public string SfdcConsumerKey_Value { get; set; }

[PipelinePropertyAttribute(Name = "SfdcConsumerSecret")]

public string SfdcConsumerSecret_Value { get; set; }

private string oauthToken = "ABCDEF";

public Task Execute(IMessage message, IMessageInspectorContext context)

{

return Task.Factory.StartNew(() =>

{

if (null != message)

{

HttpClient authClient = new HttpClient();

//create login password value

string loginPassword = SfdcPassword_Value + SfdcToken_Value;

//prepare payload

HttpContent content = new FormUrlEncodedContent(new Dictionary<string, string>

{

{"grant_type","password"},

{"client_id",SfdcConsumerKey_Value},

{"client_secret",SfdcConsumerSecret_Value},

{"username",SfdcUserName_Value},

{"password",loginPassword}

}

);

//post request and make sure to wait for response

var message2 = authClient.PostAsync("https://login.salesforce.com/services/oauth2/token", content).Result;

string responseString = message2.Content.ReadAsStringAsync().Result;

//extract token

JObject obj = JObject.Parse(responseString);

oauthToken = (string)obj["access_token"];

//throw values into context to prove they made it into the class OK

message.Promote("consumerkey", SfdcConsumerKey_Value);

message.Promote("consumersecret", SfdcConsumerSecret_Value);

message.Promote("response", responseString);

//put token itself into context

string propertyName = "OAuthToken";

message.Promote(propertyName, oauthToken);

}

});

}

}

}

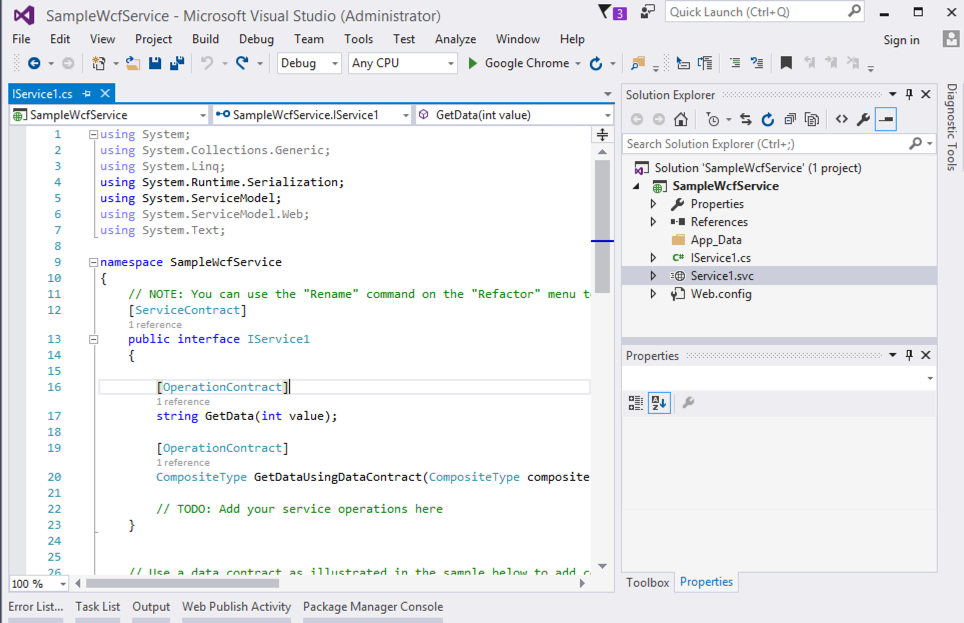

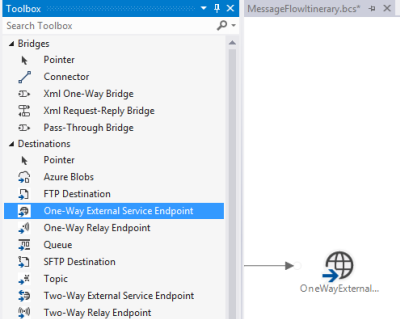

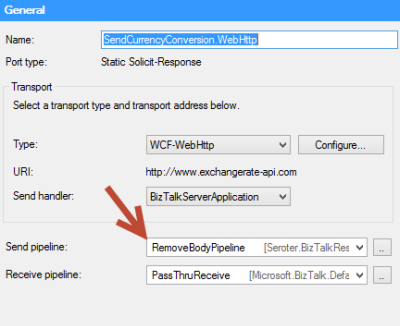

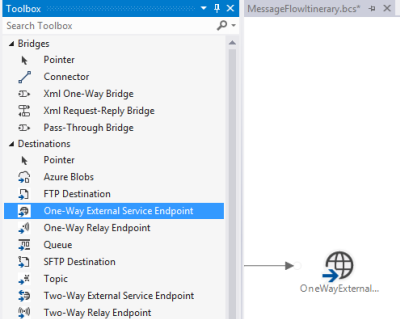

With that code in place, I focused next on getting the write endpoint definition in place to call Salesforce. I used the One Way External Service Endpoint destination, which by default, uses the BasicHttp WCF binding.

Now *ideally*, the REST endpoint is pulled from the authentication request and applied at runtime. However, I’m not exactly sure how to take the value from the authentication call and override a configured endpoint address. So, for this example, I called the Salesforce authentication endpoint from an outside application and pulled out the returned service endpoint manually. Not perfect, but good enough for this scenario. Below is the configuration file I created for this destination shape. Notice that I switched the binding to webHttp and set the security mode.

<configuration>

<system.serviceModel>

<bindings>

<webHttpBinding>

<binding name="restBinding">

<security mode="Transport" />

</binding>

</webHttpBinding>

</bindings>

<client>

<clear />

<endpoint address="https://na15.salesforce.com/services/data/v25.0/sobjects/Account"

binding="webHttpBinding" bindingConfiguration="restBinding"

contract="System.ServiceModel.Routing.ISimplexDatagramRouter"

name="OneWayExternalServiceEndpointReference1" />

</client>

</system.serviceModel>

</configuration>

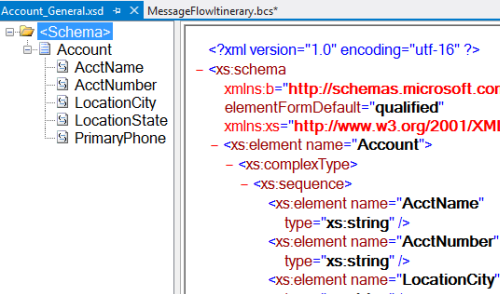

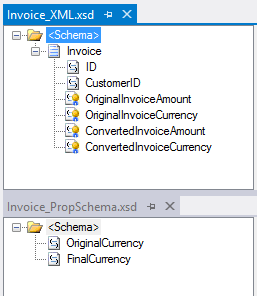

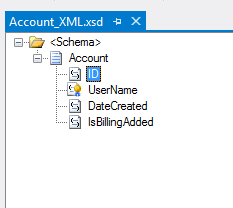

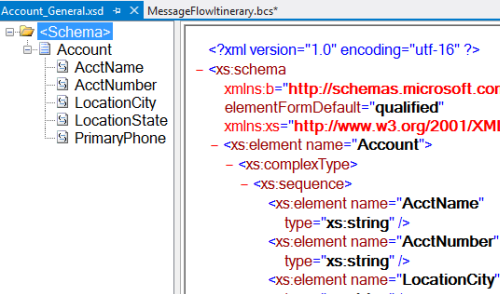

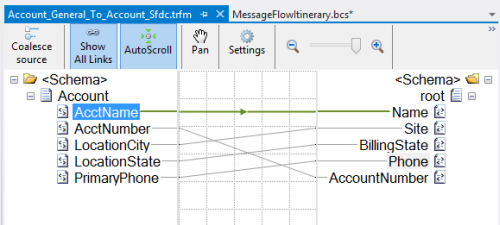

With this in place, I created a pair of XML schemas and a map. The first schema represents a generic “account” definition.

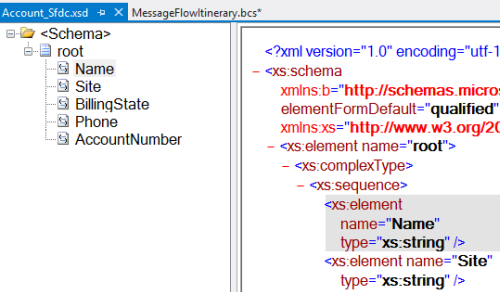

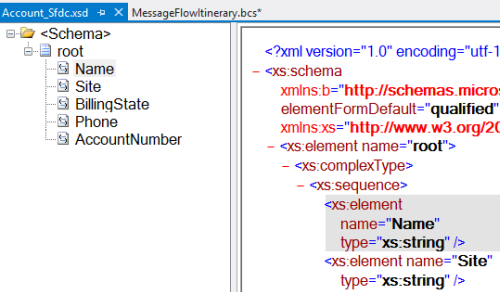

My next schema defines the format expected by the Salesforce REST endpoint. It’s basically a root node called “root” (with no namespace) and elements named after the field names in Salesforce.

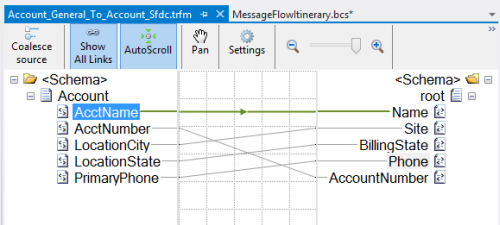

As expected, my mapping between these two is super complicated. I’ll give you a moment to study its subtle beauty.

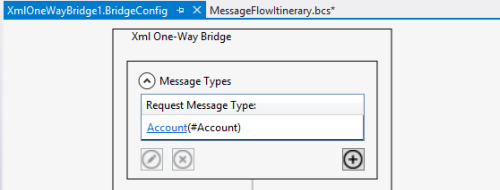

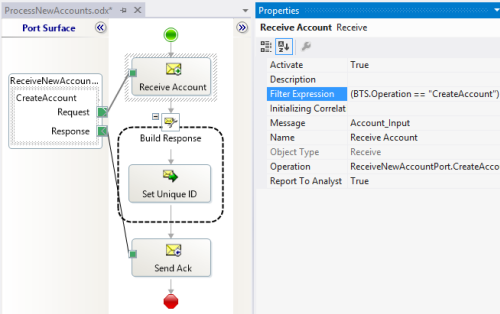

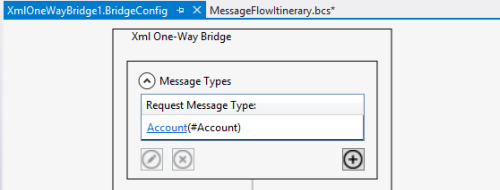

With those in place, I was ready to build out my bridge. I dragged an Xml One-Way Bridge shape to the message flow surface. There were two goals of my bridge: transform the message, and put the credentials into context. I started the bridge by defining the input message type. This is the first schema I created which describes the generic account message.

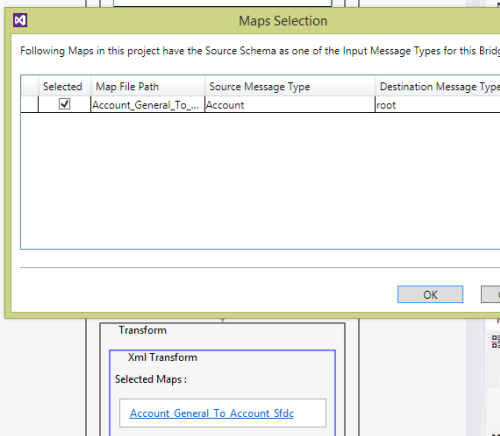

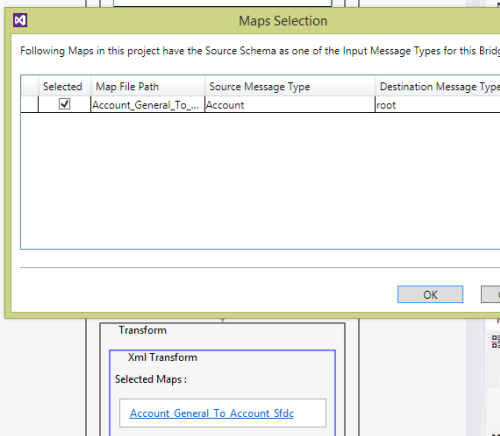

Choosing a map is easy; just add the appropriate map to the collection property on the Transform stage.

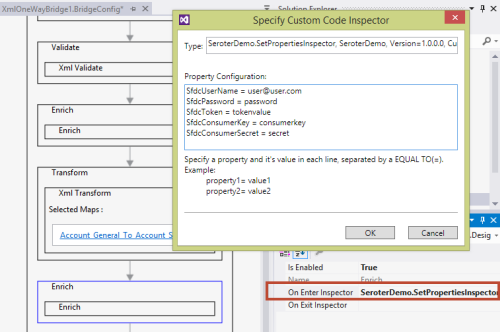

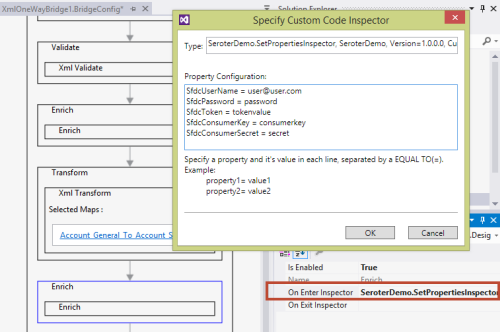

With the message transformed, I had to then get the property bag configured with the right context properties. On the final Enrich stage of the pipeline, I chose the On Enter Inspector to select the code to run when this stage gets started. I entered the fully qualified name, and then on separate lines, put the values for each (authorization) property I defined in the class above. Note that you do NOT wrap these values in quotes. I wasted an hour trying to figure out why my values weren’t working correctly!

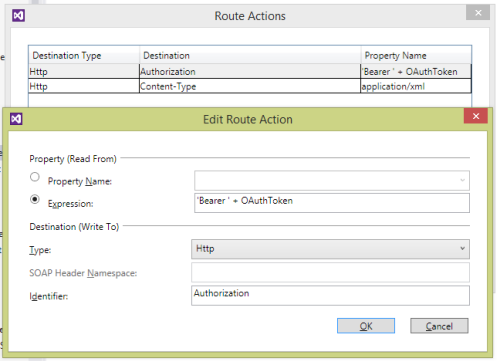

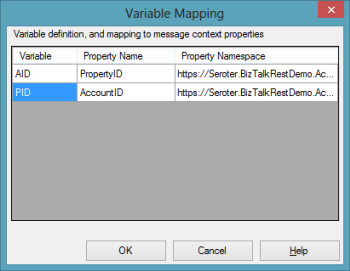

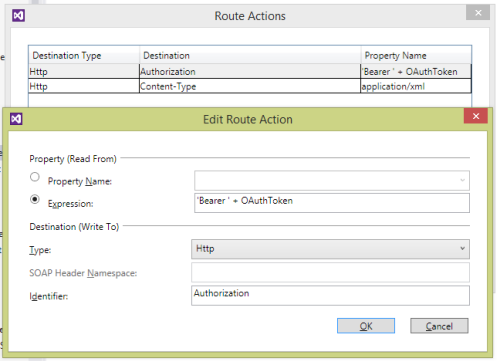

The web service endpoint was already configured above, so all that was left was to configure the connector. The connector between the bridge and destination shapes was set to route all the messages to that single destination (“Filter condition: 1=1”). The most important configuration was the headers. Clicking the Route Actions property of the connector opens up a window to set any SOAP or HTTP headers on the outbound message. I defined a pair of headers. One sets the content-type so that Salesforce knows I’m sending it an XML message, and the second defines the authorization header as a combination of the word “Bearer” (in single quotes!) and the OAuthToken context value we created above.

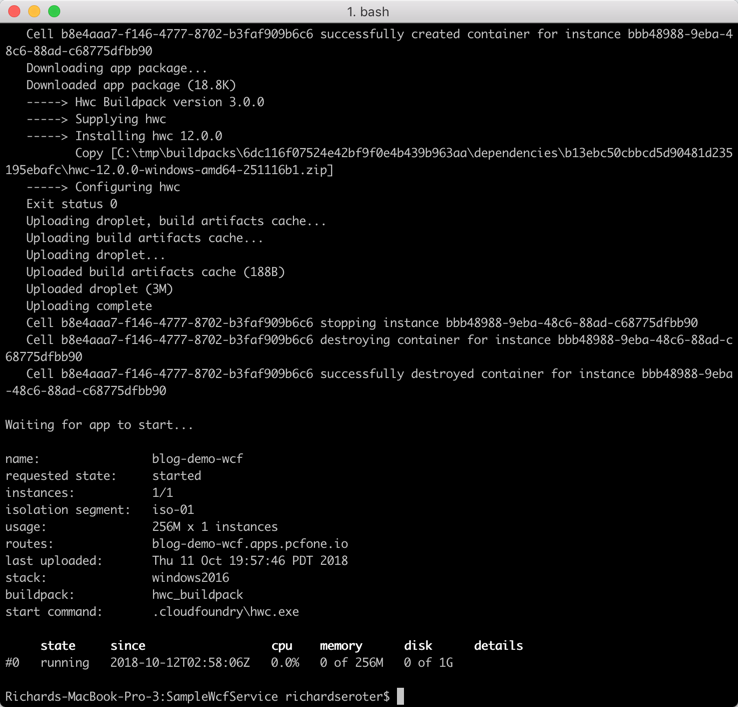

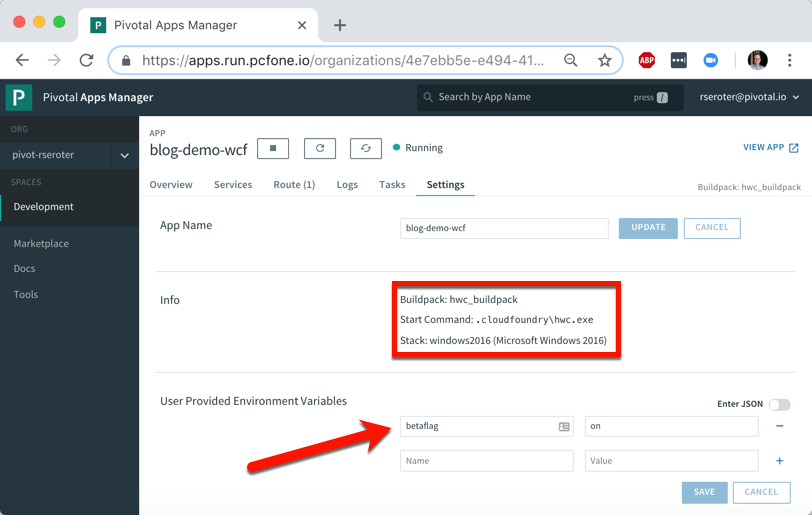

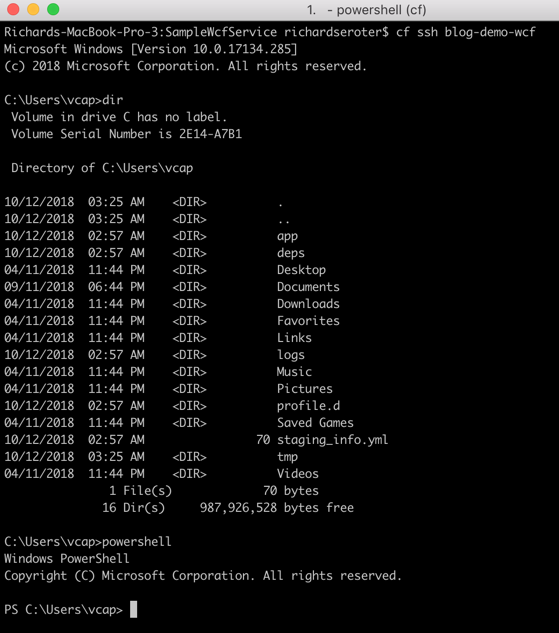

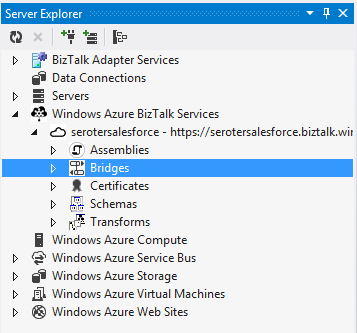

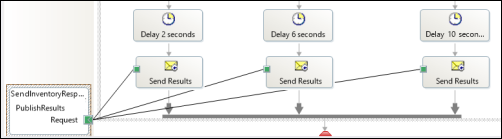

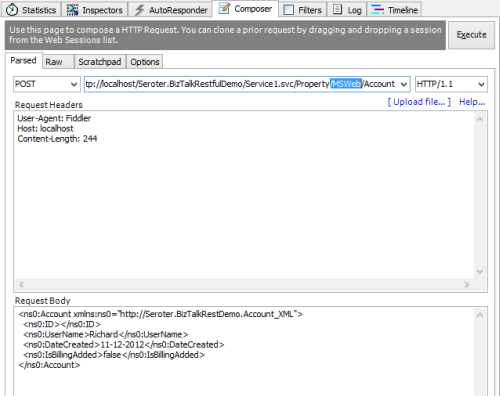

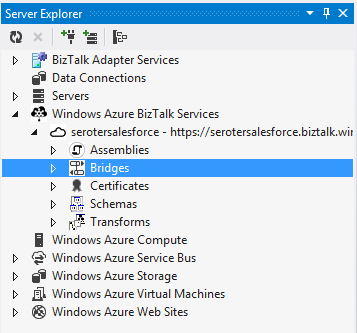

At this point, I had a finished message flow itinerary and deployed the project to a running instance of BizTalk Services. Now to test it. I first tested it by putting a Service Bus Queue at the beginning of the flow and pumping messages through. After the 20th vague error message, I decided to crack this nut open. I installed the BizTalk Services Explorer extension from the Visual Studio Gallery. This tool promises to aid in debugging and management of BizTalk Services resources and is actually pretty handy. It’s also not documented at all, but documentation is for sissies anyway.

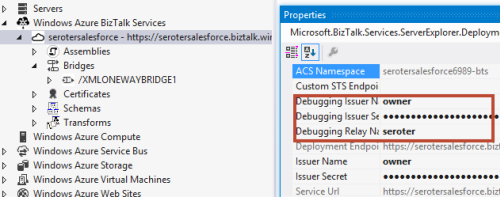

Once installed, you get a nice little management interface inside the Server Explorer view in Visual Studio.

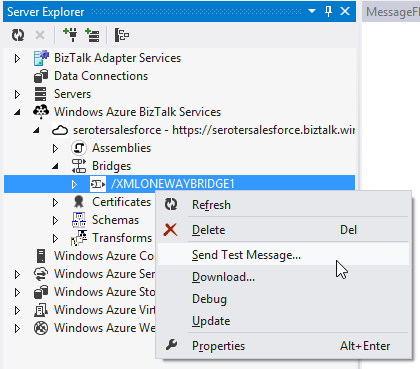

I could just send a test message in (and specify the payload myself), but that’s pretty much the same as what I was doing from my own client application.

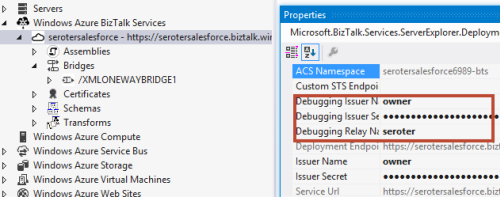

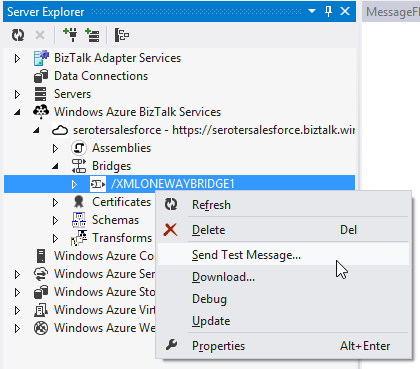

No, I wanted to see inside the process a bit. First, I set up the appropriate credentials for calling the bridge endpoint. Do NOT try and use the debugging function if you have a Queue or Topic as your input channel! It only works with Relay input.

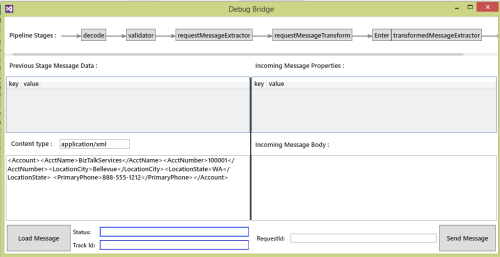

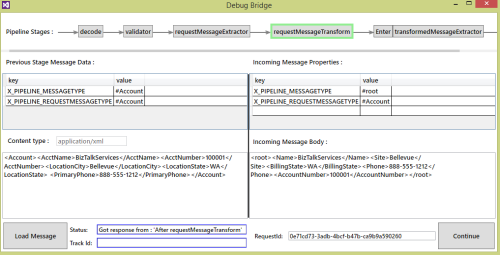

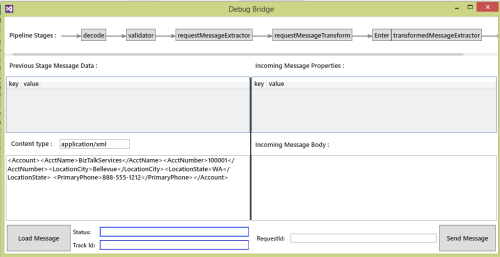

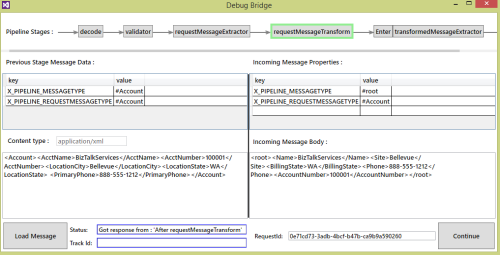

I then right-clicked the bridge and chose “Debug.” After entering my source XML, I submitted the initial message into the bridge. This tool shows you each stage of the bridge as well as the corresponding payload and context properties.

At the Transform stage, I could see that my message was being correctly mapped to the Salesforce-ready structure.

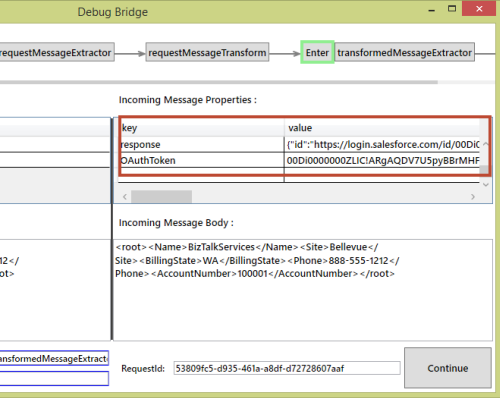

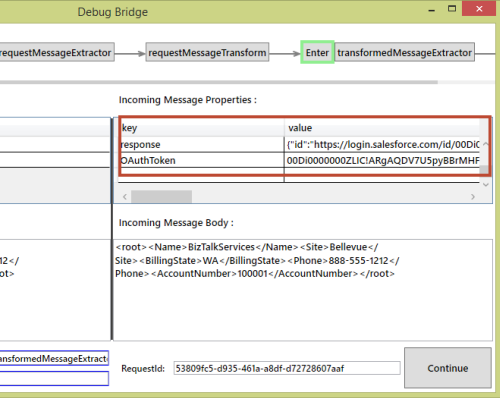

After the Enrich stage – where we had our custom code callout – I saw my new context values, including the OAuth token.

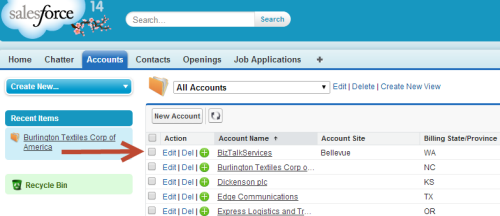

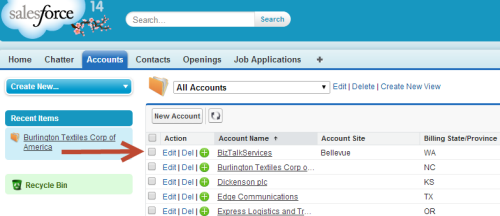

The whole process completes with an error, only because Salesforce returns an XML response and I don’t handle it. Checking Salesforce showed that my new account definitely made it across.

This took me longer than I thought, just given the general newness of the platform and lack of deep documentation. Also, my bridge occasionally flakes out because it seems to “forget” the authorization property configuration values that are part of the bridge definition. I had to redeploy my project to make it “remember” them again. I’m sure it’s a “me” problem, but there may be some best practices on custom code properties that I don’t know yet.

Now that you’ve seen how to extend BizTalk Services, hopefully you can use this same flow when sending messages to all sorts of SaaS systems.