Welcome to the 29th interview in my never-ending series of chats with thought leaders in the “connected systems” space. This month, I snagged the legendary Jon Fancey who is an instructor for Pluralsight, co-founder of UK-based consulting shop Affinus, Microsoft MVP, and a well-regarded speaker and author.

On to the questions!

Q: During the recent MVP Summit, you and I spoke about some use cases that you have seen for Windows Server AppFabric and the WCF Routing Service. How do you see companies trying to leverage these technologies?

A: I think both provide a really useful set of technologies for your toolbox. In particular I like the routing service as it can sometimes really get you out of a hole. A couple of examples to illustrate here of where its great. The first is where protocol translation is necessary, a subtle example of this is where perhaps you need your Silverlight-based app to call a back-end Web service that uses a binding Silverlight doesn’t support. Even though things improved a little in SL4, it still doesn’t support all of WCF’s bindings so you’re out of luck if you don’t own the service you need to call. Put the WCF routing service in as an intermediary however and it can happily solve this problem by binding basic http on the SL slide and anything you need for the service side. It also solves the issue of having to put files (such as the clientaccesspolicy.xml) in the IIS site’s root as this can be done on the routing Web server. Of course it won’t work in all circumstances but you’d be surprised how often it solves a problem. The second example is a common one I see where customers just want routing without all the bells and whistles of something like BizTalk. Routing services has some neat features around failures and retries as well as providing high-performance rules-based message routing. It even allows you to put your own logic in the router via filters as well if you need to.

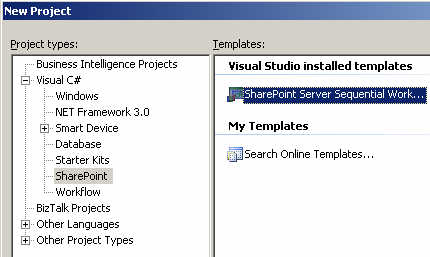

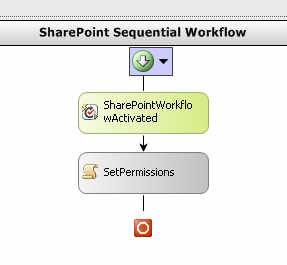

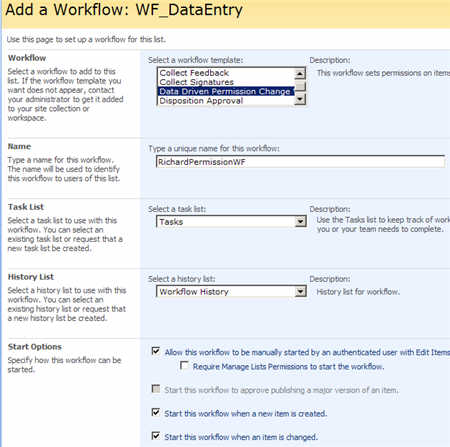

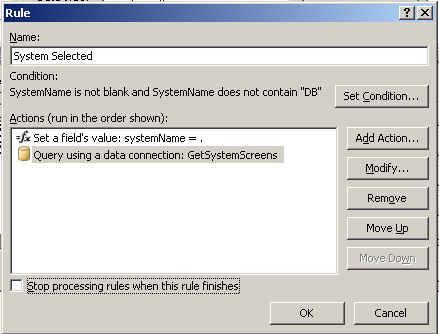

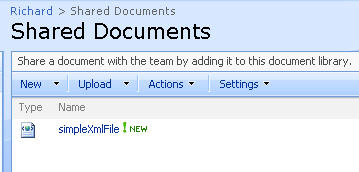

Q: You’ve been doing a fair amount of work with SharePoint in recent years. In your experience, what are some of the most common types of “integrations” that people do from a SharePoint environment? Where have you used BizTalk to accommodate these, and where do you use other technologies?

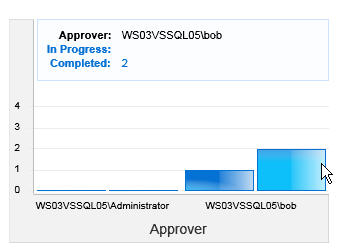

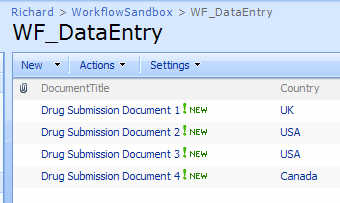

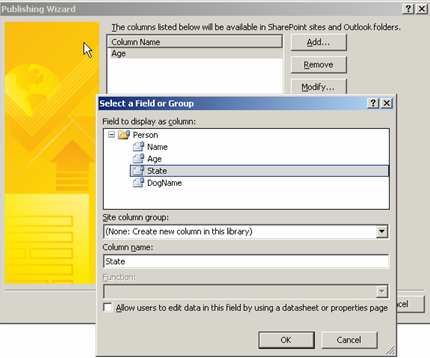

A: One great example of BizTalk and SharePoint together is with BizTalk’s BAM (Business Activity Monitoring). Although BizTalk provides its own BAM portal it doesn’t really provide the functionality most customers require. The ability to create data mash-ups using out of the box Web parts in SharePoint 2010 and the Business Connectivity Services (BCS) feature is great. Not only that but in 2010 it’s also possible now to consume the BizTalk WCF adapters from SharePoint too, making connectivity to back end systems easier than ever for both read and write scenarios, even enabling off-lining of data to Office clients such as Outlook allowing client updates and resynchronization later to the back end system or data source.

Q: In your experience as an instructor, would you say that BizTalk Server is one of the more daunting products for someone to learn? If so, why is that? Are there other products from Microsoft with a similar learning curve?

A: I’d say that nothing should be daunting to learn with the right instructor and training materials ;). Seriously though, when I starting getting into WSS3.0/MOSS2007 it reminded me a lot of my first experiences with BizTalk Server 2004, not least because it was the third version of the product where everything traditionally all comes together into a great product. I found a dearth of good resources out there to help me and knowledge really was hard won. With 2010 things have improved enormously although the size of the SharePoint feature set does make it daunting to newcomers. The key with any new technology if you really want to be effective in it is to understand it from the ground up – to understand the “why” as well as the “how”. Certainly Pluralsight’s SharePoint Fundamentals course and the On Demand content we have take this approach.

Q [stupid question]: My company recently barred people from smoking anywhere on the campus. While I applaud the effort, it caused a nefarious, capitalist idea to spring to my mind. I could purchase a small school bus to drive around our campus. For $2, people can get on and smoke their brains out. I call it the “Smoke Bus.” Ignoring logistical challenges (e.g. the driver would probably die of cancer within a week), this seems like a moral loser, but money-making winner. What ideas do you have for something that may be of questionable ethics but a sure fire success?

A: How about giving all your employees unlimited free sugary caffeinated drinks – oh, wait a minute…

Thanks for joining us, Jon!