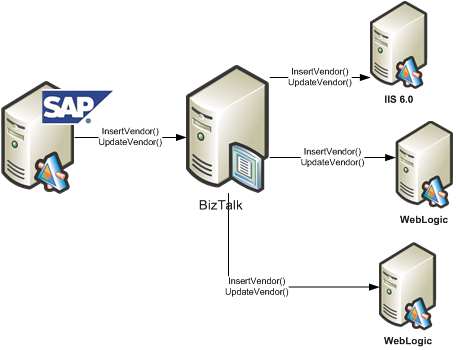

I recently showed how one could use RSSBus to generate RSS feeds for BizTalk service metrics on an application-by-application basis. The last mile, for me, was getting security applied to a given feed. I only have a single file that generates all the feeds, but, I still need to apply role-based security restraints on the data.

This was a fun exercise. First, I had to switch my RSSBus installation to use Windows authentication, vs. the Forms authentication that the default installation uses. Next I removed the “anonymous access” capabilities from the IIS web site virtual directory. I need those steps done first because I plan on checking to see if the calling user is in the Active Directory group associated with a given BizTalk application.

Now the interesting part. RSSBus allows you to generate custom “formatters” for presenting data in the feed. In my case, I have a formatter which does a security check. Their great technical folks provided me a skeleton formatter (and way too much personal assistance!) which I’ve embellished a bit.

First off, I have a class which implements the RSSBus formatter interface.

public class checksecurity : nsoftware.RSSBus.RSBFormatter

Next I need to implement the required operation, “Format” which is where I’ll check the security credentials of the caller.

public string Format(string[] value, string[] param)

{

string appname = "not_defined";

string username = "anonymous";

bool hasAccess = false;

//check inbound params for null

if (value != null && value[0] != null)

{

appname = value[0];

//grab username of RSS caller

username = HttpContext.Current.User.Identity.Name;

if (HttpContext.Current != null)

{

//check cache

if (HttpContext.Current.Cache["BizTalkAppMapping"] == null)

{

//inflate object from XML config file

BizTalkAppMappingManager appMapping = LoadBizTalkMappings();

//read role associated with input BizTalk app name

string mappedRole = appMapping.BizTalkMapping[appname];

//check access for this user

hasAccess = HttpContext.Current.User.IsInRole(mappedRole);

//pop object into cache with file dependency

System.Web.Caching.CacheDependency fileDep =

new System.Web.Caching.CacheDependency

(@"BizTalkApplicationMapping.xml");

HttpContext.Current.Cache.Insert

("BizTalkAppMapping", appMapping, fileDep);

}

else

{

//read object and allowable role from cache

string mappedRole =

((BizTalkAppMappingManager)

HttpContext.Current.Cache["BizTalkAppMapping"])

.BizTalkMapping[appname];

//check access for this user

hasAccess = HttpContext.Current.User.IsInRole(mappedRole);

}

}

}

if (hasAccess == false)

throw new RSBException("access_violation", "Access denied.");

//no need to return any value

return "";

}

A few things to note in the code above. I call a function named “LoadBizTalkMappings” which reads an XML file from disk (BizTalkApplicationMapping.xml), serializes it into an object, and returns that object. That XML file contains name/value pairs of BizTalk application names and Active Directory domain groups. Notice that I use the “IsInRole” operation on the Principal object to discover if this user can view this particular feed. Finally, see that I’m using web caching with a file dependency. After the first load, my mapping object is read from cache instead of pulled from disk. When new applications come on board, or a AD group account changes, simply changing my XML configuration file will invalidate my cache and force a reload on the next RSS request. Neato.

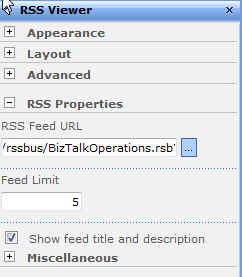

That’s all well and good, but how do I use this thing? First, in my RSSBus web directory, I created an “App_Code” directory and put my class files (formatter and BizTalkApplicationMappingManager) in there. Then they get dynamically compiled upon web request. The next step is tricky. I originally had my formatter called within my RSSBus file where my input parameters were set. However, I discovered that due to my RSS caching setup, once the feed was cached, the security check was bypassed! So, instead, I put my formatter request in the RSSBus cache statement itself. Now I’m assured that it’ll run each time.

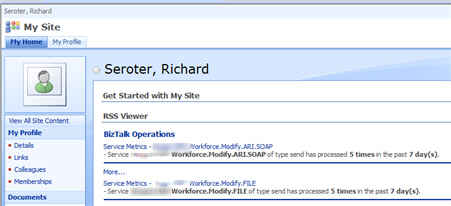

So what do I have now? I have RSS urls such as http://server/rssbus/BizTalkOperations.rsb?app=Application1 which will only return results for “Application1” if the caller is in the AD group defined in my XML configuration file. Even though I have caching turned on, the RSSBus engine checks my security formatter prior to returning the cached RSS feed. Cool.

Is this the most practical application in the world? Nah. But, RSS can play an interesting role inside enterprises when tracking operational performance and this was a fun way to demonstrate that. And now, I have a secure way of allowing business personnel to see the levels of activity through the BizTalk systems they own. That’s not a bad thing.