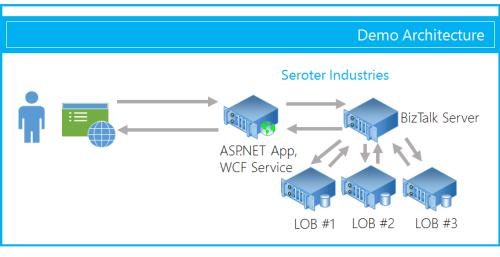

The Windows Azure EAI Bridges are dead. Long live BizTalk Services! Initially released last year as a “lab” project, the Service Bus EAI Bridges were a technology for connecting cloud and on-premises endpoints through an Azure-hosted broker. This technology has been rebranded (“Windows Azure BizTalk Services”) and upgraded and is now available as a public preview. In this blog post, I’ll give you a quick tour around the developer experience.

First off, what actually *is* Windows Azure BizTalk Services (WABS)? Is it BizTalk Server running in the cloud? Does it run on-premises? Check out the announcement blog posts from the Windows Azure and BizTalk teams, respectively, for more. But basically, it’s separate technology from BizTalk Server, but meant to be highly complementary. Even though It uses a few of the same types of artifacts such as schemas and maps, they aren’t interchangeable. For example, WABS maps don’t run in BizTalk Server, and vice versa. Also, there’s no concept of long-running workflow (i.e. orchestration), and none of the value-added services that BizTalk Server provides (e.g. Rules Engine, BAM). All that said, this is still an important technology as it makes it quick and easy to connect a variety of endpoints regardless of location. It’s a powerful way to expose line-of-business apps to cloud systems, and Windows Azure hosting model makes it possible to rapidly scale solutions. Check out the pricing FAQ page for more details on the scaling functionality, and the reasonable pricing.

Let’s get started. When you install the preview components, you’ll get a new project type in Visual Studio 2012.

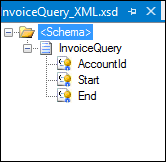

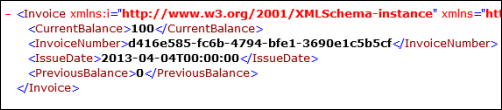

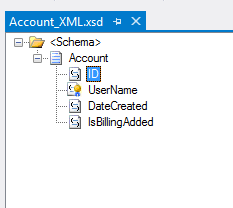

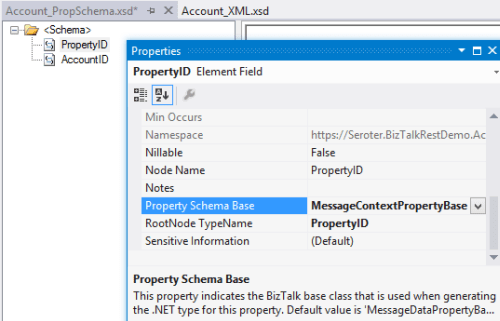

Each WABS project can contain a single “bridge configuration” file. This file defines the flow of data between source and destination endpoints. Once you have a WABS project, you can add XML schemas, flat-file schemas, and maps.

The WABS Schema Editor looks identical to the BizTalk Server Schema Editor and lets you define XML or flat file message structures. While the right-click menu promises the ability to generate and validate file instances, my pre-preview version of the bits only let me validate messages, not generate sample ones.

The WABS schema mapper is very different from the BizTalk Mapper. And that’s a good thing. The UI has subtle alterations, but the more important change is in the palette of available “functoids” (components for manipulating data). First, you’ll see more sophisticated looping and logical expression handling. This include a ForEach Loop and finally, an If-Then-Else Expression option.

The concept of “lists” are also entirely new. You can populate, persist, and query lists of data and create powerfully complex mappings between structures.

Finally, there are some “miscellaneous” operations that introduce small – but helpful – capabilities. These functoids let you grab a property from the message’s context (metadata), generate a random ID, and even embed custom C# code into a map. I seem to recall that custom code was excluded from the EAI Bridges preview, and many folks expressed concern that this would limit the usefulness of these maps for tricky, real-world scenarios. Now, it looks like this is the most powerful data mapping tool that Microsoft has ever produced. I suspect that an entire book could be written about how to properly use this Mapper.

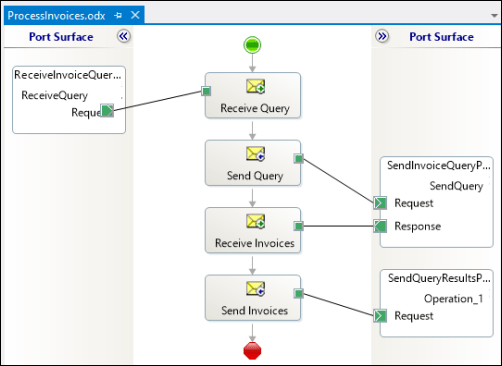

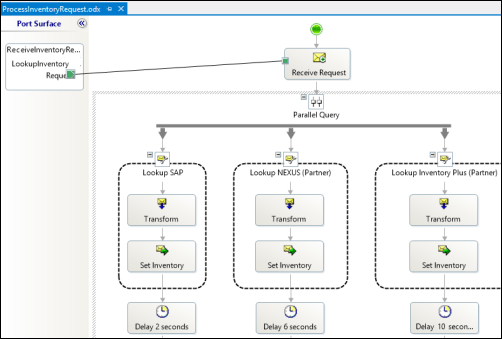

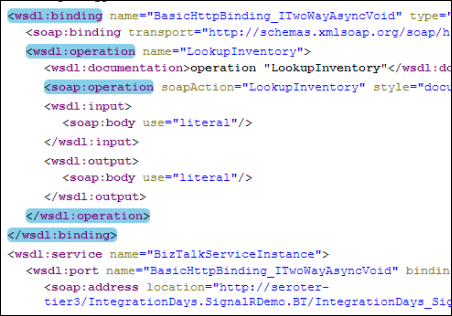

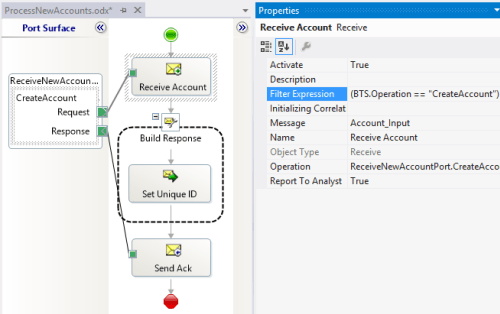

Next up, let’s take a look at the bridge configuration and what source and destination endpoints are supported. The toolbox for the bridge configuration file shows three different types of bridges: XML One-Way Bridge, XML Request-Reply Bridge, and Pass-Through Bridge.

You’d use each depending on whether you were doing synchronous or asynchronous XML messaging, or any flat file transmission. To get data into a bridge, today you can use HTTP, FTP, or SFTP. Notice that “HTTP” doesn’t show up in that list as each bridge automatically has a Windows Azure ACS-secured HTTP endpoint associated with it.

While the currently available set of sources is a bit thin, the destination options are solid. You can consume web services, Service Bus Relay endpoints, Service Bus Queues / Topics, Windows Azure Blobs, FTP and SFTP endpoints.

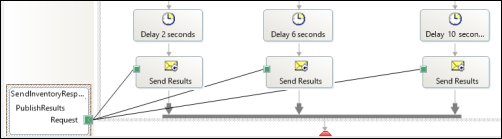

A given bridge configuration file will often contain a mix of these endpoints. For instance, consider a case where you want to route a message to one of three different endpoints based on some value in the message itself. Also imagine wanting to do a special transformation heading to one endpoint, and not the others. In the configuration below, I’m chaining together XML bridges to route to the Service Bus Queue, and directly routing to either the Service Bus Topic or Relay Service based on the message content.

An individual bridge has a number of stages that a message passes through. Double-clicking a bridge reveals steps for identifying, decoding, validating, enriching, encoding, and transforming messages.

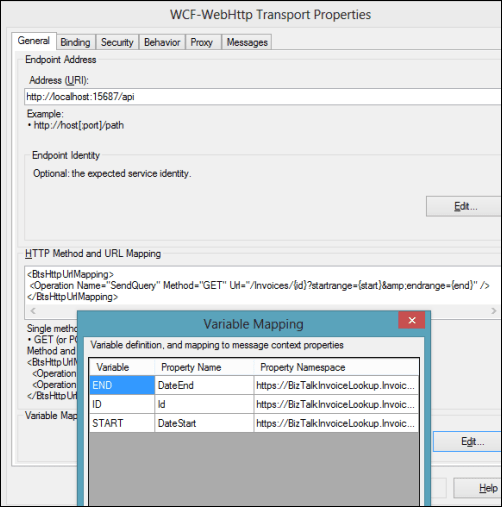

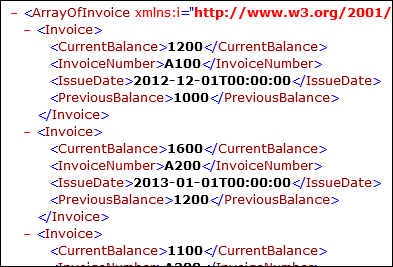

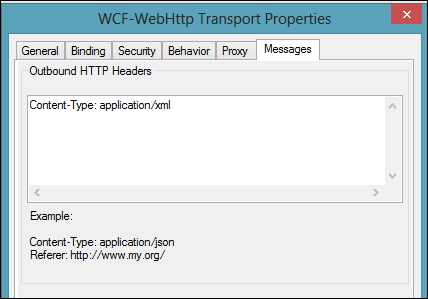

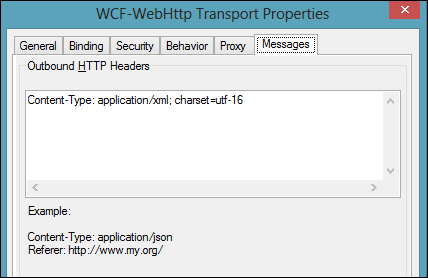

An individual step exposes relevant configuration properties. For instance, the “Enrich” stage of a bridge lets you choose a way to populate data in the outbound message’s metadata (context) properties. Options include pulling values from the source message’s SOAP or HTTP headers, XPath against the source message body, lookup to a Windows Azure SQL database, and more.

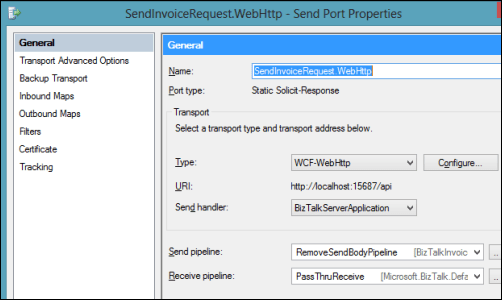

When a bridge configuration is completed and ready for deployment, simply right-click the Visual Studio project and choose Deploy and fill in valid credentials for the WABS preview.

Wrap Up

This is definitely preview software as there are a number of things we’ll likely see added before it’s ready for production use (e.g. enhanced management). However, it’s a good time to start poking around and getting a feel for when you might use this. On a broad scale, you COULD choose to use this instead of something like MuleSoft’s CloudHub to do pure cloud-to-cloud integration, but WABS is drastically less mature than what MuleSoft has to offer. Moving forward, it’d be great to see a durable workflow component added, additional sources, and Microsoft really needs to start baking JSON support into more products from the get-go.

What do you think? Plan on trying this out? Have ideas for where you could use it?