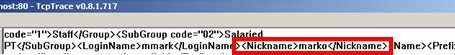

I recently answered a BizTalk newsgroup post where the fellow was asking how static objects would be shared amongst BizTalk components. I stated that a correctly built singleton should be available to all artifacts in the host’s AppDomain. However, I wasn’t 1000% sure what that looked like, so I had to build out an example.

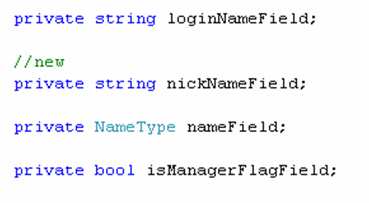

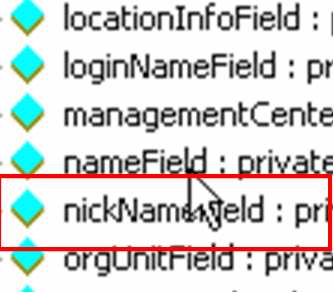

When I say a “correctly built singleton”, I mean a thread-safe static object. My particular singleton for this example looks like this:

public class CommonLogger

{

//static members are lazily initialized, but thread-safe

private static readonly CommonLogger singleton =

new CommonLogger();

private int Id;

private string appDomainName;

//Explicit static constructor

static CommonLogger() { }

private CommonLogger()

{

appDomainName = AppDomain.CurrentDomain.FriendlyName;

System.Random r = new Random();

//set "unique" id

Id = r.Next(0, 100);

//trace

System.Diagnostics.Debug.WriteLine

("[AppDomain: " + appDomainName + ", ID: " +

Id.ToString() + "] Logger started up ... ");

}

//Accessor

public static CommonLogger Instance

{

get

{

return singleton;

}

}

public void LogMessage(string msg)

{

System.Diagnostics.Debug.WriteLine

("[AppDomain: " + appDomainName + "; ID: " +

Id.ToString() + "] Message logged ... " + msg);

}

}

I also built a “wrapper” class which retrieves the “Instance” object for BizTalk artifacts that couldn’t access the Instance directly (e.g. maps, orchestration).

Next, I built a custom pipeline component (send or receive) where the “Execute” operation makes a call to my CommonLogger component. That code is fairly straightforward and looks like this …

public IBaseMessage Execute(IPipelineContext pc, IBaseMessage inmsg)

{

//call singleton logger

CommonLogger.Instance.LogMessage

("calling from " + _PipelineType + " component");

// this way, it's a passthrough pipeline component

return inmsg;

}

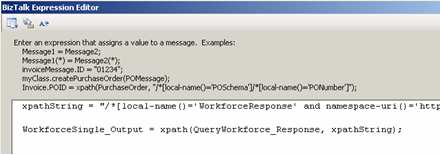

Next, I created a simple map containing a Scripting functoid that called out to my CommonLogger. Because the Scripting functoid can’t call an operation on my Instance property, I used the “Wrapper” class which executes the operation on the Instance.

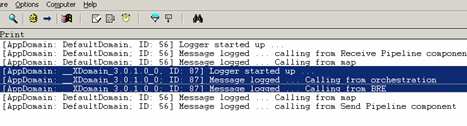

Then I went ahead and created both a send and receive pipeline, each with my custom “logging” component built in. After deploying the BizTalk projects, I added my map and pipeline to both the receive and send port. So, there are four places that should be interacting with my CommonLogger object. What’s the expected result of this run? I should see “Logger started up” (constructor) once, and then a bunch of logged messages using the same instance. Sure enough …

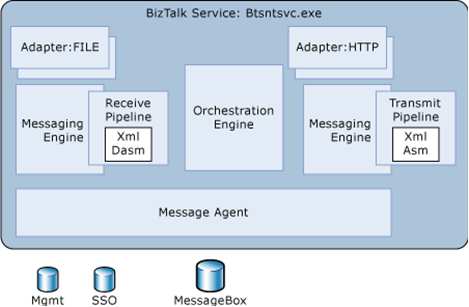

Nice. What happens if I throw orchestration into the mix? Would it share the object instantiated by the End Point Manager (EPM)? If you read Saravana’s post, you get the impression that every object in a single host should share an AppDomain, and thus static objects. I wasn’t convinced that this was the case.

I’ve also added a business rule to the equation, to see how that plays with the orchestration.

I bounced my host instance (thus flushing any cached objects), and reran my initial scenario (with orchestration/rules included):

Very interesting. The orchestration ran in a different AppDomain, and thus created its own CommonLogger instance. Given that XLANG is a separate subsystem within the BizTalk service, its not impossible to believe that it runs in a separate AppDomain.

If I run my scenario again, without bouncing the host instance, I would expect to see no new instantiations, and objects being reused. Image below is the same as the previous one (first run), with the subsequent run in the same window.

Indeed, the maps/pipeline reused their singleton instance, and the orchestration/rules reused their particular instance. Now you can read all about creating your own named AppDomains for orchestrations in this MSDN documentation, and maybe, because I don’t have a named instance for this orchestration to run in, an ad-hoc one is being created and used. Either way, it seems that the EPM and XLANG subsystems run in different AppDomains within a given host instance.

I also experimented with moving my artifacts into different hosts. In this scenario, I moved my send port out of the shared host, and into a new host. Given that we’re now in an entirely different Windows service, I’d hardly expect any object sharing. Sure enough …

As you can see, now three different instances of my singleton object exist and are actively being used within their given AppDomain. Does this matter much? In most cases, not really. I’m still getting the value of caching and using a thread-safe singleton object. There just happen to be more than one instance being used by the BizTalk subsystems. That doesn’t negate the value of the pattern. But, still valuable to know.

Technorati Tags: BizTalk