One tricky aspect of consuming a web service managed by SOA Software is that the credentials used in calling the service must be explicitly identified in the calling code. So, I came up with a solution to securely and efficiently manage many credentials using a single password stored in Enterprise Single Sign On

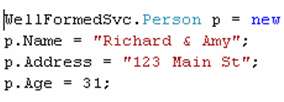

A web service managed by SOA Software may have many different policies attached. There are options for authentication, authorization, encryption, monitoring and much more. To ease the confusion on the developers calling such services, SOA Software provides a clean API that abstracts away the underlying policy requirements. This API speaks to the Gateway, which attaches all the headers needed to comply with the policy and then forwards the call to the service itself. The code that a service client would implement might look like this …

Credential soaCredential =

new Credential("soa user", "soa password");

//Bridge is not required if we are not load balancing

SDKBridgeLBHAMgr lbhamgr = new SDKBridgeLBHAMgr();

lbhamgr.AddAddress("http://server:9999");

//pass in credential and boolean indicating whether to

//encrypt content being passed to Gateway

WSClient wscl = new WSClient(soaCredential, false);

WSClientRequest wsreq = wscl.CreateRequest();

//This credential is for requesting (domain) user.

Credential requestCredential =

new Credential("DOMAIN\user", "domain password");

wsreq.BindToServiceAutoConfigureNoHALB("unique service key",

WSClientConstants.QOS_HTTP, requestCredential);

The “Credential” object here doesn’t accept a Principal object or anything similar, but rather, needs specific values entered. Hence my problem. Clearly, I’m not going to store clear text values here. Given that I will have dozens of these service consumers, I hesitate to use Single Sign On to store all of these individual sets of credentials (even though my tool makes it much simpler to do so).

My solution? I decided to generate a single key (and salt) that will be used to hash the username and password values. We originally were going to store these hashed values in the code base, but realized that the credentials kept changing between environments. So, I’ve created a database that stores the secure values. At no point are the credentials stored in clear text in the database, configuration files, or source code.

Let’s walk through each component of the solution.

Step #1

Create an SSO application to store the single password and salt used to encrypt/decrypt all the individual credential components. I used the SSO Configuration Store Application Manager tool to whip something up. Then upon instantiation of my “CryptoManager”, I retrieve those values from SSO and cache them in the singleton (thus saving the SSO roundtrip upon each service call).

Step #2

I need a strong encryption mechanism to take the SOA Software service passwords and turn them into gibberish to the snooping eye. So, I built a class that encrypts a string (for design time), and then decrypts the string (for runtime). You’ll notice my usage of the ssoPassword and ssoSalt values retrieved from SSO. The encryption operation looks like this …

/// <summary>

/// Symmetric encryption algorithm which uses a single key and salt

/// securely stored in Enterprise Single Sign On. There are four

/// possible symmetric algorithms available in the .NET Framework

/// (including DES, Triple-DES, RC2, Rijndael/AES). Rijndael offers

/// the greatest key length of .NET encryption algorithms (256 bit)

/// and is currently the most secure encryption method.

/// For more on the Rijndael algorithm, see

/// http://en.wikipedia.org/wiki/Rijndael

/// </summary>

/// <param name="clearString"></param>

/// <returns></returns>

public string EncryptStringValue(string clearString)

{

//create instance of Rijndael class

RijndaelManaged RijnadaelCipher = new RijndaelManaged();

//let add padding to ensure no problems with encrypted data

//not being an even multiple of block size

//ISO10126 adds random padding bytes, vs. PKCS7 which does an

//identical sequence of bytes

RijnadaelCipher.Padding = PaddingMode.ISO10126;

//convert input string to a byte array

byte[] inputBytes = Encoding.Unicode.GetBytes(clearString);

//using a salt makes it harder to guess the password.

byte[] saltBytes = Encoding.Unicode.GetBytes(ssoSalt);

//Derives a key from a password

PasswordDeriveBytes secretKey =

new PasswordDeriveBytes(ssoPassword, saltBytes);

//create encryptor which converts blocks of text to cipher value

//use 32 bytes for secret key

//and 16 bytes for initialization vector (IV)

ICryptoTransform Encryptor =

RijnadaelCipher.CreateEncryptor(secretKey.GetBytes(32),

secretKey.GetBytes(16));

//stream to hold the response of the encryption process

MemoryStream ms = new MemoryStream();

//process data through CryptoStream and fill MemoryStream

CryptoStream cryptoStream =

new CryptoStream(ms, Encryptor, CryptoStreamMode.Write);

cryptoStream.Write(inputBytes, 0, inputBytes.Length);

//flush encrypted bytes

cryptoStream.FlushFinalBlock();

//convert value into byte array from MemoryStream

byte[] cipherByte = ms.ToArray();

//cleanup

//technically closing the CryptoStream also flushes

cryptoStream.Close();

cryptoStream.Dispose();

ms.Close();

ms.Dispose();

//put value into base64 encoded string

string encryptedValue =

System.Convert.ToBase64String(cipherByte);

//return string to caller

return encryptedValue;

}

For decryption, it looks pretty similar to the encryption operation …

public string DecryptStringValue(string hashString)

{

//create instance of Rijndael class

RijndaelManaged RijnadaelCipher = new RijndaelManaged();

RijnadaelCipher.Padding = PaddingMode.ISO10126;

//convert input (hashed) string to a byte array

byte[] encryptedBytes = Convert.FromBase64String(hashString);

//convert salt value to byte array

byte[] saltBytes = Encoding.Unicode.GetBytes(ssoSalt);

//Derives a key from a password

PasswordDeriveBytes secretKey =

new PasswordDeriveBytes(ssoPassword, saltBytes);

//create decryptor which converts blocks of text to cipher value

//use 32 bytes for secret key

//and 16 bytes for initialization vector (IV)

ICryptoTransform Decryptor =

RijnadaelCipher.CreateDecryptor(secretKey.GetBytes(32),

secretKey.GetBytes(16));

MemoryStream ms = new MemoryStream(encryptedBytes);

//process data through CryptoStream and fill MemoryStream

CryptoStream cryptoStream =

new CryptoStream(ms, Decryptor, CryptoStreamMode.Read);

//leave enough room for plain text byte array by using length of

//encrypted value (which won't ever be longer than clear text)

byte[] plainText = new byte[encryptedBytes.Length];

//do decryption

int decryptedCount =

cryptoStream.Read(plainText, 0, plainText.Length);

//cleanup

ms.Close();

ms.Dispose();

cryptoStream.Close();

cryptoStream.Dispose();

//convert byte array of characters back to Unicode string

string decryptedValue =

Encoding.Unicode.GetString(plainText, 0, decryptedCount);

//return plain text value to caller

return decryptedValue;

}

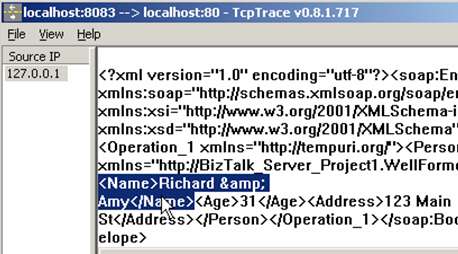

Step #3

All right. Now I have an object that BizTalk will call to decrypt credentials at runtime. However, I don’t want these (hashed) credentials stored in the source code itself. This would force the team to rebuild the components for each deployment environment. So, I created a small database (SOAServiceUserDb) that stores the service destination URL (as the primary key) and credentials for each service.

Step #4

Now I built a “DatabaseManager” singleton object which upon instantiation, queries my SOAServiceUserDb database for all the web service entries, and loads them into a member Dictionary object. The “value” of my dictionary’s name/value pair is a ServiceUser object that stores the two sets of credentials that SOA Software needs.

Finally, I have my actual implementation object that ties it all together. The web service proxy class first talks to the DatabaseManager to get back a loaded “ServiceUser” object containing the hashed credentials for the service endpoint about to be called.

//read the URL used in the web service proxy; call DatabaseManager

ServiceUser svcUser =

DatabaseManager.Instance.GetServiceUserAccountByUrl(this.Url);

I then call into my CrytoManager class to take these hashed member values and convert them back to clear text.

string bridgeUser =

CryptoManager.Instance.DecryptStringValue(svcUser.BridgeUserHash);

string bridgePw =

CryptoManager.Instance.DecryptStringValue(svcUser.BridgePwHash);

string reqUser =

CryptoManager.Instance.DecryptStringValue(svcUser.RequestUserHash);

string reqPw =

CryptoManager.Instance.DecryptStringValue(svcUser.RequestPwHash);

Now the SOA Software gateway API uses these variables instead of hard coded text.

So, when a new service comes online, we take the required credentials and pass them through my encryption algorithm to get a hash value, then add a record in the SOAServiceUserDb to store the hash value, and that’s about it. As we migrate between environments, we simply have to keep our database in sync. Given that my only real risk in this solution is using a single password/salt to hash all my values, I feel much better knowing that the critical password is securely stored in Single Sign On.

I would think that this strategy stretches well beyond my use case here. Thoughts as to how this could apply in other “single password” scenarios?

Technorati Tags: BizTalk