Those of you who read Charles’ blog regularly know that he is famous for his articles of staggering depth which leave the reader both exhausted and noticeably smarter. That’s a fair trade off to me.

Let’s see how Charles fares as he tackles my Four Questions.

Q: I was thrilled that you were a technical reviewer of my recent book on applying SOA patterns to BizTalk solutions. Was there anything new that you learned during the read of my drafts? Related to the book’s topic, how do you convince EAI-oriented BizTalk developers to think in a more “service bus” sort of way?

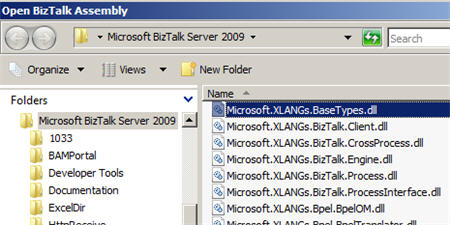

A: Well, actually, it was very useful to read the book. I haven’t really had as much real-world experience as I would like of using the WCF features introduced in BTS 2006 R2. The book has a lot of really useful tips and potential pitfalls that are, I assume, drawn from real life experience. That kind of information is hugely valuable to readers…and reviewers.

With regard to service buses, developers tend to be very wary of TLAs like ‘ESB’. My experience has been that IT management are often quicker to understand the potential benefits of implementing service bus patterns, and that it is the developers who take some convincing. IT managers and architects are thinking about overall strategy, whereas the developers are wondering how they are going to deliver on the requirements of the current project I generally emphasise that ‘ESB’ is about two things –first, it is about looking at the bigger picture, understanding how you can exploit BizTalk effectively alongside other technologies like WCF and WF to get synergy between these different technologies, and second, it is about first-class exploitation of the more dynamic capabilities of BizTalk Server. If the BizTalk developer is experienced, they will understand that the more straight-forward approaches they use often fail to eliminate some of the more subtle coupling that may exist between different parts of their BizTalk solution. Relating ESB to previously-experienced pain is often a good way to go.

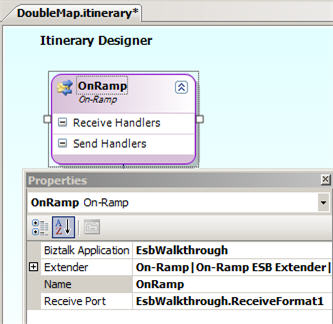

Another consideration is that, although BizTalk has very powerful dynamic capabilities, the basic product hasn’t previously provided the kind of additional tooling and metaphors that make it easy to ‘think’ and implement ESB patterns. Developers have enough on their plates already without having to hand-craft additional code to do things like endpoint resolution. That’s why the ESB Toolkit (due for a new release in a few weeks) is so important to BizTalk, and why, although it’s open source, Microsoft are treating it as part of the product. You need these kinds of frameworks if you are going to convince BizTalk developers to ‘think’ ESB.

Q: You’ve written extensively on the fundamentals of business rules and recently published a thorough assessment of complex event processing (CEP) principles. These are two areas that a Microsoft-centric manager/developer/architect may be relatively unfamiliar given Microsoft’s limited investment in these spaces (so far). Including these, if you’d like, what are some industry technologies that interest you but don’t have much mind share in the Microsoft world yet? How do you describe these to others?

A: Well, I’ve had something of a focus on rules for some time, and more recently I’ve got very interested in CEP, which is, in part, a rules-based approach. Rule processing is a huge subject. People get lost in the detail of different types of rules and different applications of rule processing. There is also a degree of cynicism about using specialised tooling to handle rules. The point, though, is that the ability to automate business processes makes little sense unless you have a first-class capability to externalise business and technical policies and cleanly separate them from your process models, workflows and integration layers. Failure to separate policy leads directly to the kind of coupling that plagues so may solutions. When a policy changes, huge expense is incurred in having to amend and change the implemented business processes, even though the process model may not have changed at all. So, with my technical architect’s hat on, rule processing technology is about effective separation of concerns.

If readers remain unconvinced about the importance of rules processing, consider that BizTalk Server is built four-square on a rules engine – we call it the ‘pub-sub’ subscription model which is exploited via the message agent. It is fundamental to decoupling of services and systems in BizTalk. Subscription rules are externalised and held in a set of database tables. BizTalk Server provides a wide range of facilities via its development and administrative tools for configuring and managing subscription rules. A really interesting feature is that way that BizTalk Server injects subscription rules dynamically into the run-time environment to handle things like correlation onto existing orchestration instances.

Externalisation of rules is enabled through the use of good frameworks, repositories and tooling. There is a sense in which rule engine technology itself is of secondary importance. Unfortunately, no one has yet quite worked out how to fully separate the representation of rules from the technology that is used to process and apply rules. MS BRE uses the Rete algorithm. WF Rules adopts a sequential approach with optional forward chaining. My argument has been that there is little point in Microsoft investing in a rules processing technology (say WF Rules) unless they are also prepared to invest in the frameworks, tooling and repositories that enable effective use of rules engines.

As far as CEP is concerned, I can’t do justice to that subject here. CEP is all about the value bound up in the inferences we can draw from analysis of diverse events. Events, themselves, are fundamental to human experience, locked as we are in time and space. Today, CEP is chiefly associated with distinct verticals – algorithmic trading systems in investment banks, RFID-based manufacturing processes, etc. Tomorrow, I expect it will have increasingly wider application alongside various forms of analytics, knowledge-based systems and advanced processing. Ironically, this will only happen if we figure how to make it really simple to deal with complexity. If we do that, then with the massive amount of cheap computing resource that will be available in the next few years all kinds of approaches that used to be niche interests, or which were pursed only in academia, will begin to come together and enter the mainstream. When customers start clamouring for CEP facilities and advanced analytics in order to remain competitive, companies like Microsoft will start to deliver. It’s already beginning to happen.

Q: If we assume that good architects (like yourself) do not live in a world of uncompromising absolutes, but rather understand that the answer to most technical questions contain “it depends”, what is an example of a BizTalk solution you’ve built that might raise the eyebrows of those without proper context, but make total sense given the client scenario.

A: It would have been easier to answer the opposite question. I can think of one or two BizTalk applications where I wish I had designed things differently, but where no one has ever raised an eyebrow. If it works, no one tends to complain!

To answer your question, though, one of the more complex designs I worked on was for a scenario where the BizTalk system had only to handle a few hundred distinct activities a day, but where an individual message might represent a transaction worth many millions of pounds (I’m UK-based). The complexity lay in the many different processes and sub-processes that were involved in handling different transactions and business lines, the fact that each business activity involved a redemption period that might extend for a few days, or as long as a year, and the likelihood that parts of the process would change during that period, requiring dynamic decisions to be made as to exactly which version of which sub-process must be invoked in any given situation. The process design was labyrinthine, but we needed to ensure that the implementation of the automated processes was entirely conformant to the detailed process designs provided by the business analysts. That meant traceability, not just in terms of runtime messages and processing, but also in terms of mapping orchestration implementation directly back to the higher-level process definitions. I therefore took the view that the best design was a deeply layered approach in which top-level orchestrations were constructed with little more that Group and Send orchestration shapes, together with some Decision and Loop shapes, in order to mirror the highest-level process definition diagrams as closely as possible. These top-level orchestrations would then call into the next layer of orchestrations which again closely resembled process definition diagrams at the next level of detail. This pattern was repeated to create a relatively deep tree structure of orchestrations that had to be navigated in order to get to the finest-level of functional granularity. Because the non-functional requirements were so light-weight (a very low volume of messages with no need for sub-second responses, or anything like that), and because the emphasis was on correctness and strict conformance process definition and policy, I traded the complexity of this deep structure against the ability to trace very precisely from requirements and design through to implementation and the facility to dynamically resolve exactly which version of which sub-process would be invoked in any given situation using business rules.

I’ve never designed any other BizTalk application in quite the same way, and I think anyone taking a casual look at it would wonder which planet I hail from. I’m the first to admit the design looked horribly over-engineered, but I would strongly maintain that it was the most appropriate approach given the requirements. Actually, thinking about it, there was one other project where I initially came up with something like a mini-version of that design. In the end, we discovered that the true requirements were not as complex as the organisation had originally believed, and the design was therefore greatly simplified…by a colleague of mine…who never lets me forget!

Q [stupid question]: While I’ve followed Twitter’s progress since the beginning, I’ve resisted signing up for as long as I can. You on the other hand have taken the plunge. While there is value to be extracted by this type of service, it’s also ripe for the surreal and ridiculous (e.g. Tweets sent from toilets, a cat with 500,000 followers). Provide an example of a made-up silly use of a Twitter account.

A: I resisted Twitter for ages. Now I’m hooked. It’s a benign form of telepathy – you listen in on other people’s thoughts, but only on their terms. My suggestion for a Twitter application? Well, that would have to be marrying Wolfram|Alpha to Twitter, using CEP and rules engine technologies, of course. Instead of waiting for Wolfram and his team to manually add enough sources of general knowledge to make his system in any way useful to the average person, I envisage a radical departure in which knowledge is derived by direct inference drawn from the vast number of Twitter ‘events’ that are available. Each tweet represents a discrete happening in the domain of human consciousness, allowing us to tap directly into the very heart of the global cerebral cortex. All Wolfram’s team need to so is spend their days composing as many Twitter searches as they can think of and plugging them into a CEP engine together with some clever inferencing rules. The result will be a vast stream of knowledge that will emerge ready for direct delivery via Wolfram’s computation engine. Instead of being limited to comparative analysis of the average height of people in different countries whose second name starts with “P”, this vastly expanded knowledge base will draw only on information that has proven relevance to the human race – tweet epigrams, amusing web sites to visit, ‘succinct’ ideas for politicians to ponder and endless insight into the lives of celebrities.

Thanks Charles. Insightful as always.