I recently wrote about my trip to Stockholm where I demonstrated some scenarios showing how I could leverage my onsite ESB in a cloud-focused solution. The first scenario I demonstrated was using BizTalk Server 2009 to call a series of cloud services and return the result of that orchestrated execution back to a web application hosted in the Amazon.com EC2 cloud. This series of blog posts will show how I put each piece of this particular demonstration together.

In this first post, I’ll show how I created a Python web application in the Google App Engine which allows me to both add/delete data via a web UI and provides a POX web service for querying data. I’ll then call this application from BizTalk Server to extract relevant data.

As you’d expect, the initial step was to build the Google App Engine web app. First, you need to sign up for a (free) Google App Engine account. Then, if you’re like me and building a Python app (vs. Java) you can go here and yank all the necessary SDKs. You get a local version of the development sandbox so that you can fully test your application before deploying it to the Google cloud.

Let’s walk through the code I built. As a disclaimer, I learned Python solely for this exercise, and I’m sure that my code reflects the language maturity of a fetus. Whatever, it works. Don’t judge me. But either way, note that there are probably better ways to do what I’ve done, but I couldn’t find them.

First off, I have some import statements to libraries I’ll use within my code.

import cgi

from google.appengine.ext import webapp

from google.appengine.ext.webapp.util import run_wsgi_app

from google.appengine.ext import db

from xml.dom import minidom

from xml.sax.saxutils import unescape

Next I defined a “customer” object which represents the data I wish to stash in the Datastore.

#customer object definition

class Customer(db.Model):

userid = db.StringProperty()

firstname = db.StringProperty()

lastname = db.StringProperty()

currentbeta = db.StringProperty()

betastatus = db.StringProperty()

dateregistered = db.StringProperty()

At this point, I’m ready for the primary class which is responsible for drawing the HTML page where I can add/delete new records to my application. First I define the class and write out the header of the page.

#main class

class MainPage(webapp.RequestHandler):

def get(self):

#header HTML

self.response.out.write('<html><head><title>Vandelay Industries Beta Signup Application</title>')

self.response.out.write('<link type=\"text/css\" rel=\"stylesheet\" href=\"stylesheets/appengine.css\" /></head>')

self.response.out.write('<body>')

self.response.out.write('<table class=\"masterTable\">')

self.response.out.write('<tr><td rowspan=2><img src=\"images/vandsmall.png\"></td>')

self.response.out.write('<td class=\"appTitle\">Beta Technology Sign Up Application</td></tr>')

self.response.out.write('<tr><td class=\"poweredBy\">Powered by Google App Engine<img src=\"images/appengine_small.gif\"></td></tr>')

Now I want to show any existing customers stored in my system. Before I do my Data Store query, I write the table header.

#show existing customer section

self.response.out.write('<tr><td colspan=2>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<span class=\"sectionHeader\">Customer List</span>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<table class=\"customerListTable\">')

self.response.out.write('<tr>')

self.response.out.write('<td class=\"customerListHeader\">ID</td>')

self.response.out.write('<td class=\"customerListHeader\">First Name</td>')

self.response.out.write('<td class=\"customerListHeader\">Last Name</td>')

self.response.out.write('<td class=\"customerListHeader\">Current Beta</td>')

self.response.out.write('<td class=\"customerListHeader\">Beta Status</td>')

self.response.out.write('<td class=\"customerListHeader\">Date Registered</td>')

self.response.out.write('</tr>')

Here’s the good stuff. Relatively. I query the Datastore using a SQL-like syntax called GQL and then loop through the results and print each returned record.

#query customers from database

customers = db.GqlQuery('SELECT * FROM Customer')

#add each customer to page

for customer in customers:

self.response.out.write('<tr>')

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.userid)

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.firstname)

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.lastname)

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.currentbeta)

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.betastatus)

self.response.out.write('<td class=\"customerListCell\">%s</td>' % customer.dateregistered)

self.response.out.write('</tr>')

self.response.out.write('</table><br/><br />')

self.response.out.write('</td></tr>')

I then need a way to add new records to the application, so here’s a block that defines the HTML form and input fields that capture a new customer. Note that my form’s “action” is is set to “/Add”.

#add customer entry section

self.response.out.write('<tr><td colspan=2>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<span class=\"sectionHeader\">Add New Customer</span>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<form action="/Add" method="post">')

self.response.out.write('<table class=\"customerAddTable\">')

self.response.out.write('<tr><td class=\"customerAddHeader\">ID:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="userid"></td></tr>')

self.response.out.write('<tr><td class=\"customerAddHeader\">First Name:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="firstname"></td></tr>')

self.response.out.write('<tr><td class=\"customerAddHeader\">Last Name:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="lastname"></td></tr>')

self.response.out.write('<tr><td class=\"customerAddHeader\">Current Beta:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="currentbeta"></td></tr>')

self.response.out.write('<tr><td class=\"customerAddHeader\">Beta Status:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="betastatus"></td></tr>')

self.response.out.write('<tr><td class=\"customerAddHeader\">Date Registered:</td>')

self.response.out.write('<td class=\"customerListCell\"><input type="text" name="dateregistered"></td></tr>')

self.response.out.write('</table>')

self.response.out.write('<input type="submit" value="Add Customer">')

self.response.out.write('</form><br/>')

self.response.out.write('</td></tr>')

Finally, I have an HTML form for a delete behavior which has an action of “/Delete.”

#delete all section

self.response.out.write('<tr><td colspan=2>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<span class=\"sectionHeader\">Delete All Customer</span>')

self.response.out.write('<hr width=\"75%\" align=\"left\">')

self.response.out.write('<form action="/Delete" method="post"><div><input type="submit" value="Delete All Customers"></div></form>')

self.response.out.write('</td></tr>')

self.response.out.write('</table>')

#self.response.out.write('')

#write footer

self.response.out.write('</body></html>')

The bottom of my “.py” file has the necessary setup declarations to fire up my default class and register behaviors.

#setup

application = webapp.WSGIApplication([('/', MainPage)],debug=True)

def main():

run_wsgi_app(application)

if __name__ == "__main__":

main()

If I open a DOS prompt, navigate to the parent folder of my solution (and assuming I have a valid app.yaml file that points at my .py file), I can run the dev_appserver.py serotercustomer/ command and see a local, running instance of my web app.

Cool. Of course I still need to wire the events up for adding, deleting and getting a customer. For the “Add” operation, I create a new “customer” object, and populate it with values from the form submitted on the default page. After calling the “put” operation on the object (which adds it to the Datastore), I jump back to the default HTML page.

#add customer action class

class AddCustomer(webapp.RequestHandler):

def post(self):

customer = Customer()

customer.firstname = self.request.get('firstname')

customer.lastname = self.request.get('lastname')

customer.userid = self.request.get('userid')

customer.currentbeta = self.request.get('currentbeta')

customer.betastatus = self.request.get('betastatus')

customer.dateregistered = self.request.get('dateregistered')

#store customer

customer.put()

self.redirect('/')

My “Delete” is pretty coarse as all it does is delete every customer object from the Datastore.

#delete customer action class

class DeleteCustomer(webapp.RequestHandler):

def post(self):

customers = db.GqlQuery('SELECT * FROM Customer')

for customer in customers:

customer.delete()

self.redirect('/')

The “Get” operation is where I earn my paycheck. This “Get” is called via a system (i.e. not the user interface) so it needs to accept XML in, and return XML back. So what I do is take the XML I received into the HTTP POST command, unescape it, load it into an XML DOM, and pull out the “customer ID” node value. I then execute some GQL using that customer ID and retrieve the corresponding record from the Datastore. I inflate an XML string, load it back into a DOM object, and return that to the caller.

#get customer action class

class GetCustomer(webapp.RequestHandler):

def post(self):

#read inbound xml

xmlstring = self.request.body

#unescape to XML

xmlstring2 = unescape(xmlstring)

#load into XML DOM

xmldoc = minidom.parseString(xmlstring)

#yank out value

idnode = xmldoc.getElementsByTagName("userid")

userid = idnode[0].firstChild.nodeValue

#find customer

customers = db.GqlQuery('SELECT * FROM Customer WHERE userid=:1', userid)

customer = customers.get()

lastname = customer.lastname

firstname = customer.firstname

currentbeta = customer.currentbeta

betastatus = customer.betastatus

dateregistered = customer.dateregistered

#build result

responsestring = """"" % (userid, firstname, lastname, currentbeta, betastatus, dateregistered)

<CustomerDetails>

<ID>%s</ID>

<FirstName>%s</FirstName>

<LastName>%s</LastName>

<CurrentBeta>%s</CurrentBeta>

<BetaStatus>%s</BetaStatus>

<DateRegistered>%s</DateRegistered>

</CustomerDetails>

"

#parse result

xmlresponse = minidom.parseString(responsestring)

self.response.headers['Content-type'] = 'text/xml'

#return result

self.response.out.write(xmlresponse.toxml())

Before running the solution again, I need to update my “setup” statement to register the new commands (“/Add”, “/Delete”, “/Get”).

#setup

application = webapp.WSGIApplication([('/', MainPage),

('/Add', AddCustomer),

('/Delete', DeleteCustomer),

('/Get', GetCustomer)],

debug=True)

Coolio. If I run my web application now, I can add and delete records and any records in the store show up in the page. Now I can deploy my app to the Google cloud using the the console or the new deployment application. I then added a few sample records that I could use BizTalk to lookup later.

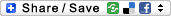

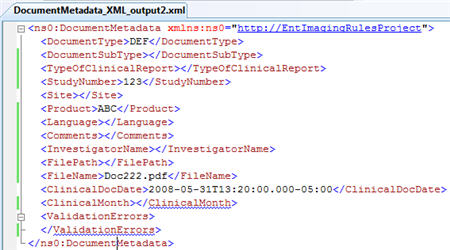

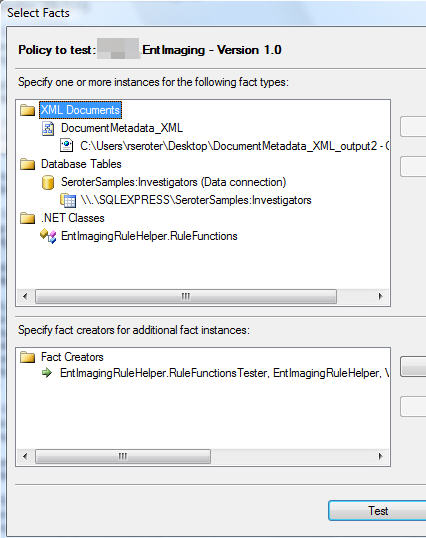

The final thing to do is have BizTalk call my POX web service. In my new BizTalk project, I built a schema for the service request. Remember that all it needs to contain is a customer ID. Also note that my Google App Engine XML is simplistic and contains no namespaces. That’s no problem for a BizTalk schema. Neither of my hand-built Google App Engine XSDs have namespaces defined. Here is my service request schema:

The POX service response schema reflects the XML structure that my service returns.

Now that I have this, I decided to use a solicit-response BizTalk HTTP adapter to invoke my service. The URL of my service was: http://<my app name>.appspot.com/Get which leverages the “Get” operation that will accepts the HTTP post request.

Since I don’t have an orchestration yet, I can just use a messaging scenario and have a FILE send port that subscribes on the response from the solicit-response HTTP port. When I send in a file with a valid customer ID, I end up with a full response back from my POX web service.

So there you go. Creating a POX web service in the Google App Engine and using BizTalk Server to call it. Next up, using BizTalk to extract data from a SalesForce.com instance.