Trying to significantly improve your company’s ability to build and run good software? Forget Docker, public cloud, Kubernetes, service meshes, Cloud Foundry, serverless, and the rest of it. Over the years, I’ve learned the most important place you should start: continuous integration and delivery pipelines. Arguably, “apps on pipeline” is the most important “transformation” metric to track. Not “deploys per day” or “number of microservices.” It’s about how many apps you’ve lit up for repeatable, automated deployment. That’s a legit measure of how serious you are about being responsive and secure.

All this means I needed to get smarter with Concourse, one of my favorite tools for CI (and a little CD). I decided to build an ASP.NET Core app, and continuously integrate and deliver it to a Cloud Foundry environment running in AWS. Let’s go!

First off, I needed an app. I spun up a new ASP.NET Core Web API project with a couple REST endpoints. You can grab the source code here. Most of my code demos don’t include tests because I’m in marketing now, so YOLO, but a trustworthy pipeline needs testable code. If you’re a .NET dev, xUnit is your friend. It’s maintained by my friend Brad, so I basically chose it because of peer pressure. My .csproj file included a few references to bring xUnit into my project:

- “Microsoft.NET.Test.Sdk” Version=”15.7.0″

- “xunit” Version=”2.3.1″

- “xunit.runner.visualstudio” Version=”2.3.1″

Then, I created a class to hold the tests for my web controller. I included one test with a basic assertion, and another “theory” with an input data set. These are comically simple, but prove the point!

public class TestClass {

private ValuesController _vc;

public TestClass() {

_vc = new ValuesController();

}

[Fact]

public void Test1(){

Assert.Equal("pivotal", _vc.Get(1));

}

[Theory]

[InlineData(1)]

[InlineData(3)]

[InlineData(20)]

public void Test2(int value) {

Assert.Equal("public", _vc.GetPublicStatus(value));

}

}

When I ran dotnet test against the above app, I got an expected error because the third inline data source led to a test failure, since my controller only returns “public” companies when the input value is between 1 and 10. Commenting the offending inline data source led to a successful test run.

Ok, the app was done. Now, to put it on a pipeline. If you’ve ever used shameful swear words when wrangling your CI server, maybe it’s worth joining all the folks who switched to Concourse. It’s a pretty straightforward OSS tool that uses a declarative model and containers for defining and running pipelines, respectively. Getting started is super simple. If you’re running Docker on your desktop, that’s your easiest route. Just grab this Docker Compose file from the Concourse GitHub repo. I renamed mine to docker-compose.yml, jumped into a Terminal session, switched to the folder holding this YAML file, and ran docker-compose up -d. After a second or two, I had a PostgreSQL server (for state) and a Concourse server. PROVE IT, you say. Hit localhost:8080, and you’ll see the Concourse dashboard.

Besides this UX, we interface with Concourse via a CLI tool called fly. I downloaded it from here. I then used fly to add my local environment as a “target” to manage. Instead of plugging in the whole URL every time I interacted with Concourse, I created an alias (“rs”) using fly -t rs login -c http://localhost:8080. If you get a warning to sync your version of fly with your version of Concourse, just enter fly -t rs sync and it gets updated. Neato.

Next up? The pipeline. Pipelines are defined in YAML and are made up of resources and jobs. One of the great things about a declarative model, is that I can run my CI tests against any Concourse by just passing in this (source-controlled) pipeline definition. No point-and-ciick configurations, no prerequisite components to install. Love it. First up, I defined a couple resources. One was my GitHub repo, the second was my target Cloud Foundry environment. In the real world, you’d externalize the Cloud Foundry credentials, and call out to files to build the app, etc. For your benefit, I compressed to a single YAML file.

resources:

- name: seroter-source

type: git

source:

uri: https://github.com/rseroter/xunit-tested-dotnetcore

branch: master

- name: pcf-on-aws

type: cf

source:

api: https://api.run.pivotal.io

skip_cert_check: false

username: XXXXX

password: XXXXX

organization: seroter-dev

space: development

Those resources tell Concourse where to get the stuff it needs to run the jobs. The first job used the GitHub resource to grab the source code. Then it used the Microsoft-provided Docker image to run the dotnet test command.

jobs:

- name: aspnetcore-unit-tests

plan:

- get: seroter-source

trigger: true

- task: run-tests

privileged: true

config:

platform: linux

inputs:

- name: seroter-source

image_resource:

type: docker-image

source:

repository: microsoft/aspnetcore-build

run:

path: sh

args:

- -exc

- |

cd ./seroter-source

dotnet restore

dotnet test

Concourse isn’t really a CD tool, but it does a nice basic job of getting code to a defined destination. The second job deploys the code to Cloud Foundry. It also uses the source code resource and only fires if the test job succeeds. This ensures that only fully-tested code makes its way to the hosting environment. If I were being more responsible, I’d take the results of the test job, drop it into an artifact repo, and then use that artifact for deployment. But hey, you get the idea!

jobs:

- name: aspnetcore-unit-tests

[...]

- name: deploy-to-prod

plan:

- get: seroter-source

trigger: true

passed: [aspnetcore-unit-tests]

- put: pcf-on-aws

params:

manifest: seroter-source/manifest.yml

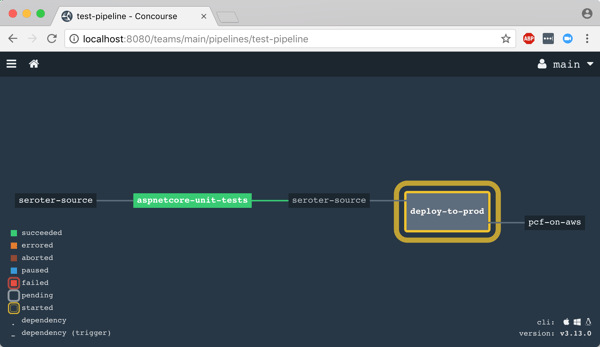

That was it! I was ready to deploy the pipeline (pipeline.yml) to Concourse. From the Terminal, I executed fly -t rs set-pipeline -p test-pipeline -c pipeline.yml. Immediately, I saw my pipeline show up in the Concourse Dashboard.

After I unpaused my pipeline, it fired up automatically.

Remember, my job specified a Microsoft-provided container for building the app. Concourse started this job by downloading the Docker image.

After downloading the image, the job kicked off the dotnet test command and confirmed that all my tests passed.

Terrific. Since my next job was set to trigger when the first one succeeded, I immediately saw the “deploy” job spin up.

This job knew how to publish content to Cloud Foundry, and used the provided parameters to deploy the app in a few seconds. Note that there are other resource types if you’re not a Cloud Foundry user. Nobody’s perfect!

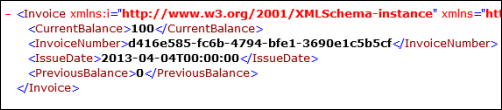

The pipeline run was finished, and I confirmed that the app was actually deployed.

Finished? Yes, but I wanted to see a failure in my pipeline! So, I changed my xUnit tests and defined inline data that wouldn’t pass. After committing code to GitHub, my pipeline kicked off automatically. Once again it was tested in the pipeline, and this time, failed. Because it failed, the next step (deployment) didn’t happen. Perfect.

If you’re looking for a CI tool that people actually like using, check out Concourse. Regardless of what you use, focus your energy on getting (all?) apps on pipelines. You don’t do it because you have to ship software every hour, as most apps don’t need it. It’s about shipping whenever you need to, with no drama. Whether you’re adding features or patching vulnerabilities, having pipelines for your apps means you’re actually becoming a customer-centric, software-driven company.