I like being exposed to new technologies, so I reached out to the folks at SnapLogic and asked to take their platform for a spin. SnapLogic is part of this new class of “integration platform as a service” providers that take a modern approach to application/data integration. In this first blog post (of a few where I poke around the platform), I’ll give you a sense of what SnapLogic is, how it works, and show a simple solution.

What Is It?

With more and more SaaS applications in use, a company needs to rethink how they integrate their application portfolio. SnapLogic offers a scalable, AWS-hosted platform that streams data between endpoints that exist in the cloud or on-premises. Integration jobs can be invoked programmatically, via the web interface, or on a schedule. The platform supports more than traditional ETL operations. Instead, I can use SnapLogic to do BOTH batch and real-time integration. It runs as a multi-tenant cloud service and has tools for building, managing, and monitoring integration flows.

The platform offers a modern, mobile-friendly interface, and offers many of the capabilities you expect from a traditional integration stack: audit trails, bulk data support, guaranteed delivery, and security controls. However, it differs from classic stacks in that it offers geo-redundancy, self-updating software, support for hierarchical/relational data, and elastic scale. That’s pretty compelling stuff if you’re faced with trying to integrate with new cloud apps from legacy integration tools.

How Does It Work?

The agent that runs SnapLogic workflows is called a Snaplex. While the SnapLogic cloud itself is multi-tenant, each customer gets their own elastic Snaplex. What if you have data behind the corporate firewall that a cloud-hosted Snaplex can’t access? Fortunately, SnapLogic lets you deploy an on-premises Snaplex that can talk to local systems. This helps you design integration solutions that secure span environments.

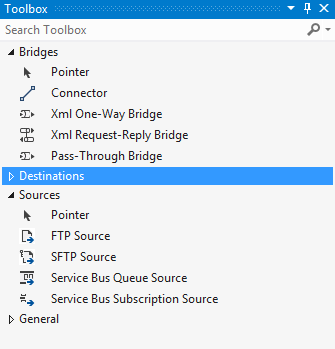

SnapLogic workflows are called pipelines and the tasks within a pipeline are called snaps. With over 160+ snaps available (and an SDK to add more), integration developers can put together a pipeline pretty quickly. Pipelines are built in a web-based design surface where snaps are connected to form simple or complex workflows.

It’s easy to drag snaps to the pipeline designer, set properties, and connect snaps together.

The platform offers a dashboard view where you can see the health of your environment, pipeline run history, and details about what’s running in the Snaplex.

The “manager” screens let you do things like create users, add groups, browse pipelines, and more.

Show Me An Example!

Ok, let’s try something out. In this basic scenario, I’m going to do a file transfer/translation process. I want to take in a JSON file, and output a CSV file. The source JSON contains some sample device reads:

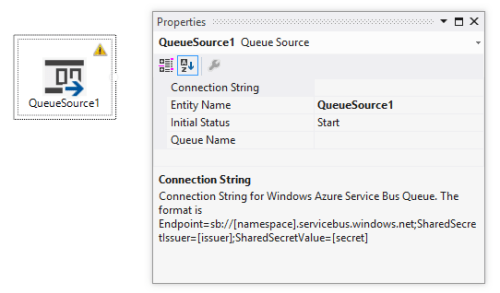

I sketched out a flow that reads a JSON file that I uploaded to the SnapLogic file system, parses it, and then splits it into individual documents for processing. There are lots of nice usability touches, such as interpreting my JSON format and helping me choose a place to split the array up.

Then I used a CSV Formatter snap to export each record to a CSV file. Finally, I wrote the results to a file. I could have also used the File Writer snap to publish the results to Amazon S3, FTP, S/FTP, FTP/S, HTTP, or HDFS.

It’s easy to run a pipeline within this interface. That’s the most manual way of kicking off a pipeline, but it’s handy for debugging or irregular execution intervals.

The result? A nicely formatted CSV file that some existing system can easily consume.

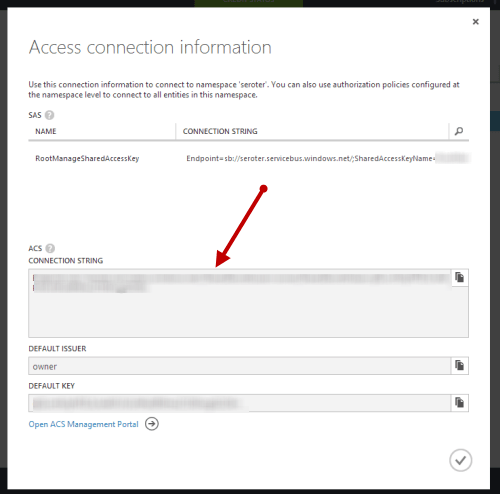

Do you want to run this on a schedule? Imagine pulling data from a source every night and updating a related system. It’s pretty easy with SnapLogic as all you have to do is define a task and point to which pipeline to execute.

Notice in that image above that you can also set the “Run With” value to “Triggered” which gives you a URL for external invocation. If I pulled the last snap off my pipeline, then the CSV results would get returned to the HTTP caller. If I pulled the first snap off my pipeline, then I could send an HTTP POST request and send a JSON message into the pipeline. Pretty cool!

Summary

It’s good to be aware of what technologies are out there, and SnapLogic is definitely one to keep an eye on. It provides a very cloud-native integration suite that can satisfy both ETL and ESB scenarios in an easy-to-use way. I’ll do another post or two that shows how to connect cloud endpoints together, so watch out for that.

What do you think? Have you used SnapLogic before or think that this sort of integration platform is the future?