I recently had the situation where I wanted to reuse a web service proxy class for multiple BizTalk send ports but I required a unique code snippet specific to each send port.

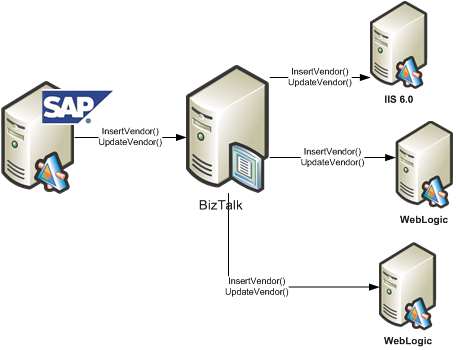

We use SAP XI to send data to BizTalk which in turn, fans out the data to interested systems. Let’s say that one of those SAP objects pertains to each of our external Vendors. Each consumer of the Vendor data (i.e. BizTalk, and then each downstream system) consumes the same WSDL. That is, each subscriber of Vendor data receives the same object type and has the same service operations.

So, I can generate a single proxy class using WSDL.exe and my “Vendor” WSDL, and use that proxy class for each BizTalk send port. It doesn’t matter the technology platform of my destination system, as this proxy should work fine whether the downstream service is Java, .NET, Unix, Windows, whatever.

Now the challenge. We use SOA Software Service Manager to manage and secure our web services. As I pointed out during my posts about SOA Software and BizTalk, each caller of a service managed by Service Manager needs to add the appropriate headers to conform to the service policy. That is, if the web service operation requires a SAML token, then the service caller must inject that. Instead of forcing the developer to figure out how to correctly add the required headers, SOA Software provides an SDK which does this logic for you. However, each service may have different policies with different credentials required. So, how do I use the same proxy class, but inject subscriber-specific code at runtime in the send port?

What I wanted was to do a basic Inversion of Control (IOC) pattern and inject code at runtime. At its base, an IOC pattern is simply really, really, really late binding. That’s all there is to it. So, the key is to find an easy to use framework that exploits this pattern. We are fairly regular uses of Spring (for Java), so I thought I’d utilize Spring.NET in my adventures here.

I need four things to make this solution work:

- A simple interface created that is implemented by the subscribing service team and contains the code specific to their Service Manager policy settings.

- A Spring.NET configuration file which references these implemented interfaces

- A singleton object which reads the configuration file once and provides BizTalk with pointers to these objects

A modified web service proxy class that consumes the correct Service Manager code for a given send port

First, I need an interface defined. Mine is comically simple.

public interface IExecServiceManager

{

bool PrepareServiceCall();

}

Each web service subscriber can build a .NET component library that implements that interface. The “PrepareServiceCall” operation contains the code necessary to apply Service Manager policies.

Next I need a valid Spring.NET configuration file. Now, I could have extended the standard btsntsvc.exe.config BizTalk configuration file (ala Enterprise Library), but, I actually PREFER keeping this separate. Easier to maintain, less clutter in the BizTalk configuration file. My Spring.NET configuration looks like this …

<object name=”

http://localhost/ERP.Vendor.Subscriber2

/SubscriberService.asmx”

type=”Demonstration.IOC.SystemBServiceSetup.ServiceSetup, Demonstration.IOC.SystemBServiceSetup” singleton=”false”/>

</objects>

I created two classes which implemented the previously defined interface and referenced them in that configuration file.

Next I wanted a singleton object to load the configuration file and keep in memory. This is what trigger my research into BizTalk and singletons a while back. My singleton has a primary operation called LoadFactory during the initial constructor …

using Spring.Context;

using Spring.Objects.Factory.Xml;

using Spring.Core.IO;

…

private void LoadFactory()

{

IResource objectList = new FileSystemResource

(@”C:\BizTalk\Projects\Demonstration.IOC\ServiceSetupObjects.xml”);

//set private static value

xmlFactory = new XmlObjectFactory(objectList);}

Finally, I modified the auto-generated web service proxy class to utilize Spring.NET and load my Service Manager implementation class at runtime.

using Spring.Context;

using Spring.Objects.Factory.Xml;

using Spring.Core.IO;

using Demonstration.IOC.InterfaceObject;

…

public void ProcessNewVendor(NewVendorType NewVendor)

{//get WS URL, which can be used as our Spring config key

string factoryKey = this.Url;

//get pointer to factory

XmlObjectFactory xmlFactory =

XmlObjectFactorySingleton.Instance.GetFactory();

//get the implementation object as an interface

IExecServiceManager serviceSetup =

xmlFactory.GetObject(factoryKey) as IExecServiceManager;

//execute send port-specific code

bool responseValue = serviceSetup.PrepareServiceCall();

this.Invoke(“ProcessNewVendor”, new object[] {

NewVendor});

}

Now, when a new subscriber comes online, all we do is create an implementation of IExecServiceManager, GAC it, and update the Spring.NET configuration file. The other option would have been to create separate web service proxy classes for each downstream subscriber, which would be a mess to maintain.

I’m sure we’ll come up with many other ways to use Spring.NET and IOC patterns within BizTalk. However, you can easily go overboard with this dependency injection stuff and end up with an academically brilliant, but practically stupid architecture. I’m a big fan of maintainable simplicity.

Technorati Tags: BizTalk