I’ve spent the better part of this week wrestling with Oracle connectivity issues, and figured I’d share a few things I’ve discovered.

A recent BizTalk application deployment included an orchestration that does a simple update to an Oracle table. Instead of using the Oracle adapter, I used .NET component and the objects in the System.Data.OracleClient .NET framework namespace. As usual, everything worked fine in the development and test environments.

Upon moving to production, all of a sudden I was seeing the following error with some frequency:

Logging failure … System.InvalidOperationException: Timeout expired. The timeout period elapsed prior to obtaining a connection from the pool. This may have occurred because all pooled connections were in use and max pool size was reached.

at System.Data.ProviderBase.DbConnectionFactory.GetConnection(DbConnection owningConnection)

…

Yowza. The most common reason for this occuring is failing to properly close/dispose a database connection. After scouring the code, I was positive that this wasn’t the case. After a bit of research, I came across the following two Microsoft .NET Framework hotfixes:

So in a nutshell, bad database connections are by default, returned to the connection pool. Nice. I went ahead and applied this hotfix in production, but still saw intermittent (but less frequent) occurences of the error above.

Next, I decided to turn on the SQL/Oracle performance counters so that I could actually see the pooling going on. There are a few counters that are “off” by default (including NumberOfActiveConnections and NumberOfFreeConnections) and require a flag in the application configuration file. To add these counters, go to the BTSNTSvc.exe.config file, and add the following section …

<system.diagnostics>

<switches>

<add name="ConnectionPoolPerformanceCounterDetail"

value="4"/>

</switches>

</system.diagnostics>

Now, on my BizTalk server, I can add performance counters for the .NET Data Provider for Oracle and see exactly what’s going on.

For my error above, the most important counter to initially review is NumberofReclaimedConnections which indicates how many database connections were cleaned up by the .NET Garbage Collector and not closed properly. If this number was greater than 0, or increasing over time, then clearly I’d have a connection leak problem. In my case, even under intense load, this value stayed at 0.

When reviewing the NumberOfFreeConnections counter, I noticed that this was usually 0. Because my database connection string didn’t include any pooling details, I wasn’t sure how many connections the pool allocated automatically. As desperation set in, I decided to tweak my connection string to explicitly set pooling conditions (new part in bold):

User Id=useracct1;Password=secretpassword;

Data Source=prod_system.company.com;

Pooling=yes;Max Pool Size=100;Min Pool Size=5;

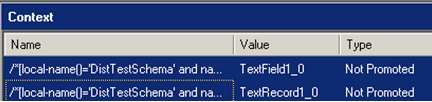

Once I did this, my counters looked like my picture above, with a minimum of 5 connections available in the pool. As I type this (2 days after applying this “fix”), the problem has yet to resurface. I’m not declaring victory yet since it’s too small of a sample size.

However, given the grief that this has caused me, I’m tempted to switch from the System.Data.OracleClient to the System.Data.Odbc objects where I’ve had previous success and never seen this error in production. My other choice is give up my dream of using the API altogether and use the BizTalk Oracle adapter instead. Thoughts?

To add insult to my week of Oracle connectivity hell, I’ve noticed that the Oracle adapter for a DIFFERENT application has been spitting this message out with greater occasion …

Failed to send notification : System.AccessViolationException: Attempted to read or write protected memory. This is often an indication that other memory is corrupt.

Naturally the message in the Event Log doesn’t tell me which send/receive port this is associated with because that would make troubleshooting less exciting. Anyone else see this rascal when using the Microsoft Biztalk Adapters for Enterprise Applications? I’ve also see it on occasion with my .NET code solution.

All of this is the reason I missed the Los Angeles BizTalk Server 2006 R2 launch event this week. I’m still bitter. However, I’m told that bets were made at the event as to whether I’d blog more or less while out on paternity leave in a week or two, so it’s nice to know they were thinking of me! Stay tuned.

Technorati Tags: BizTalk, Oracle