One of the glaring omissions from InfoPath 2007 is integration with non SQL Server data repositories. For retrieving reference data (query data), your only choice is SQL Server. That’s lousy. For submitting data, your only choice is SQL Server or Microsoft Access, and, you can only submit to a database when using the thick client version of InfoPath. That is, you can’t do a direct database submission on the InfoPath Forms Services version of a form. This also sucks. SharePoint isn’t much better, as the SharePoint Designer integration with ODBC data sources (such as Oracle) is clumsy at best, and in our experience, not really functional.

But, complaining gets me nowhere. So, how can I cleanly interface between InfoPath and Oracle, which is the overwhelming database preference in my current environment? What I’ll show here is how to use the BizTalk Adapter Pack to generate the service stubs I need to query and publish to Oracle databases. I’ll be publishing another article on TopXML.com that digs deeply into the BizTalk Adapter Pack, so I won’t go into great depth here on what it is. But the short description is: the BizTalk Adapter Pack contains Line of Business (LOB) adapters that use a common WCF infrastructure which can be consumed by WCF clients, ADO.NET clients (for ETL scenarios), BizTalk clients (for robust workflow, EAI scenarios), or basic SOAP clients (for single operation scenarios). I’m building an example for the last option.

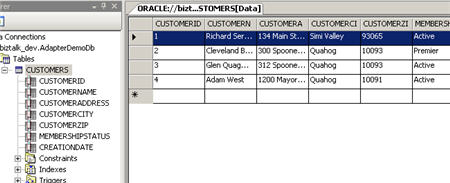

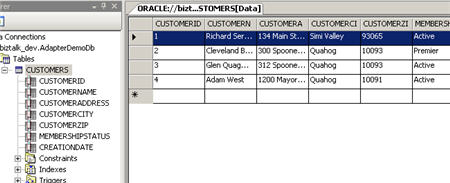

First off, I need data in my Oracle database tables. If you are a regular Visual Studio.NET user, you know that you can access ODBC data sources (such as Oracle) using the built-in Server Explorer. This works fine for data entry, but if you want true control over the database, you need more. One really nice option (from Oracle itself) is the Oracle Developer Tools for Visual Studio. This is a nice plug in for VS.NET that gives you most of what you need for Oracle design tasks.

Lots of great stuff in there. For my purposes, I needed a new table to start with.

Then I had to fill in some initial data.

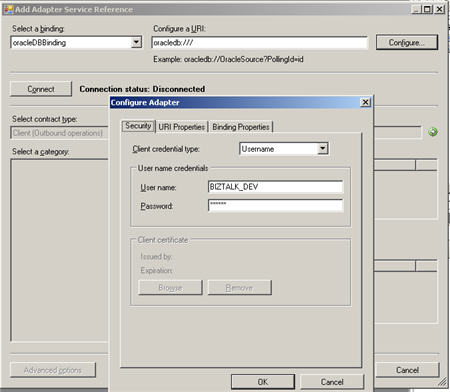

Now we can actually build the service which interacts with this table. First, I created a new “WCF Service Library” project in Visual Studio.NET. From there, once the BizTalk Adapter Pack is installed, I can select Add Adapter Service Reference from the “Add” menu.

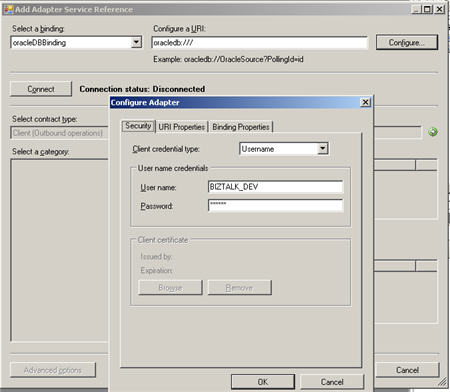

This opens a window where I can select my connection criteria, browse objects in my repository, and select the operations I want. The first thing to do is configure the URI. After choosing which binding to use (in my case, the OracleDbBinding), I have to set the DataSourceName value. This is the identifier in my Oracle tnsnames.ora file. We no longer use System DSN entries to connect to Oracle and instead put database details in the tnsnames.ora file. After setting the data source, I have to put the username and password for my Oracle database.

Once all my connection details are set, I can connect to my database. Now, I can search (*new feature alert*) and browse the database tables, views, functions, packages and procedures.

After choosing my database table operation (“Select”) and closing out the window, I get an auto-generated application configuration file, and, a class containing all the interfaces, contracts and proxy code needed to consume this database service. Now, as stated earlier, I want to take this WCF service, and expose it out for basic SOAP clients (i.e. InfoPath/SharePoint). Before building the web service proxy around this WCF client, I want to add a brand new service interface. I don’t want to directly expose the Adapter Pack interface, but rather, put a simpler interface out to my SOAP clients.

So, in this WCF Service Library project, I added a new class containing a WCF ServiceContract and OperationContract, as well as the service which implements those contracts and calls my auto-generated Adapter Pack proxy.

So I have a friendly service interface which abstracts some of the BizTalk Adapter Pack details, but calls the auto-generated Oracle Adapter bits when it’s operation is invoked.

Now, I need to put this service into a web-ready container. All I have now is the library project. So, I created a new “Web Site” project of type “WCF Service.” I don’t need much from this service, so the underlying “cs” file can be deleted. Then, after adding a reference to my WCF Service Library project, I have to modify the Service.svc file so that it points at my implementation class (“Seroter.Demo.BizTalkAdapterPack.CustomerSvcLibrary.CustomerQuery”) for it’s “Service” attribute. After this, all that remains is to set up the web.config file to both see my Oracle Adapter endpoint (as a service) and expose new endpoints for my SOAP clients. After copying the information from the auto-generated app.config file (that should still be in the WCF Service Library project) into my web.config, I can launch the Service Configuration Editor to make the remaining web.config changes. Specifically, I need to add a new service (and endpoint), and provide a metadata behavior so that clients can query the service metadata.

So now I have a client endpoint (which uses the BizTalk Adapter Pack Oracle binding), and a new service with an HTTP endpoint that basic SOAP clients can use. After creating a new virtual directory in IIS 6.0, and pointing to the WCF Service project, I now have a ready-to-go database access service.

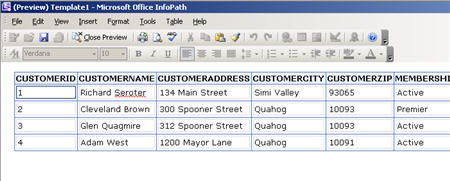

From InfoPath 2007, I can now call and consume this service to pull reference data from Oracle. Specifically, I created a new data connection, pointed to the IIS service, and chose the operation I needed.

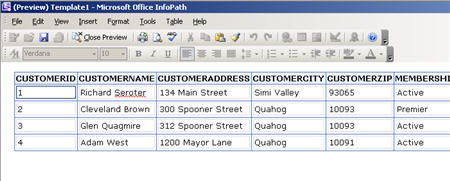

Now I can drag the repeating results node to the InfoPath form and create a table. If I preview the form, the service gets called, and the results are displayed.

That was easy! Took about a dozen lines of code and a configuration file setup. In a later post, I’ll show how to use this service to populate data forms in SharePoint as well.

Technorati Tags: WCF, InfoPath