To mark the just-released BizTalk Server 2009 product, I thought my ongoing series of interviews should engage one of Microsoft’s senior leadership figures on the BizTalk team. I’m delighted that Ofer Ashkenazi, Senior Technical Product Manager with Enterprise Application Platform Marketing at Microsoft, and the guy in charge of product planning for future releases of BizTalk, decided to take me up on my offer.

Because I can, I’ve decided to up this particular interview to FIVE questions instead of the standard four. This does not mean that I asked two stupid questions instead of one (although this month’s question is arguable twice as stupid). No, rather, I wanted the chance to pepper Ofer on a range of topics and didn’t feel like trimming my question list. Enjoy.

Q: Congrats on new version of BizTalk Server. At my company, we just deployed BTS 2006 R2 into production. I’m sure many other BizTalk customers are fairly satisfied with their existing 2006 installation. Give me two good reasons that I should consider upgrading from BizTalk 2006 (R2) to BizTalk 2009.

A: Thank you Richard for the opportunity to answer your questions, which I’m sure are relevant for many existing BizTalk customers.

I’ll be more generous with you (J) and I’ll give you three reasons why you may want to upgrade to BizTalk Server 2009: to reduce costs, to improve productivity and to promote agile innovation. Let me elaborate on these reasons that are very more important in the current economic climate:

- Reduce Costs – through servers virtualization and consolidation and integration with existing systems. BizTalk Server 2009 supports Windows Server 2008 with Hyper-v and SQL Server 2008. Customers can completely virtualize their development, test and even production environments. Using less physical servers to host BizTalk solutions can reduce costs associated with purchasing and maintaining the hardware. With BizTalk Enterprise Edition you can also dramatically save on the software cost by running an unlimited number of virtual machines with BizTalk instances on a single licensed physical server. With new and enhanced adapters, BizTalk Server 2009 lets you re-use existing applications and minimize the costs involved in modernizing and leveraging existing legacy code. This BizTalk release provides new adapters for Oracle eBusiness Suite and for SQL Server and includes enhancements especially in the Line of Business (LOB) adapters and in connectivity to IBM’s mainframe and midrange systems.

- Improve Productivity – for developers and IT professionals using Visual Studio 2008 and Visual Studio Team System 2008 that are now supported by BizTalk. For developers, being able to use Visual Studio version 2008 means that they can be more productive while developing BizTalk solutions. They can leverage new map debugging and unit testing options but even more importantly they can experience a truly connected application life cycle experience. Collaborating with testers, project managers and IT Pros through Visual Studio Team System 2008 and Team Foundation Server (TFS) and leveraging capabilities such as: source control, bug tracking, automated testing , continuous integration and automated build (with MSBuild) can make the process of developing BizTalk solutions much more efficient. Project managers can also gain better visibility to code completion and test converge with MS project integration and project reporting features. Enhancements in BizTalk B2B (specifically EDI and AS2) capabilities allow for faster customization for specific B2B solutions requirements.

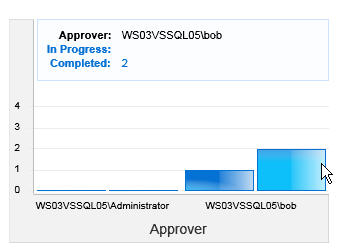

- Promote Agile Innovation – specific improvements in service oriented capabilities, RFID and BAM capabilities will help you drive innovation for the business. BizTalk Server 2009 includes UDDI Services v3 that can be used to provide agility to your service oriented solution with run-time resolution of service endpoint URI and configuration. ESB Guidance v2 based on BizTalk Server 2009 will help make your solutions more loosely coupled and easier to modify and adjust over time to cope with changing business needs. BizTalk RFID in this release, features support for Windows Mobile and Windows CE and for emerging RFID standards. Including RFID mobility scenarios for asset tracking or for doing retail inventories for example, will make your business more competitive. Business Activity Monitoring (BAM) in BizTalk Server 2009 have been enhanced to support the latest format of Analysis Services UDM cubes and the latest Office BI tools. These enhancements will help decision makers in your organization gain better visibility to operational metrics and to business KPI in real-time. User-friendly SharePoint solutions that visualize BAM data will help monitor your business execution ensure its performance.

Q: Walk us through the process of identifying new product features. Do such features come from (a) direct customer requests, (b) comparisons against competition and realizing that you need a particular feature to keep up with others, (c) product team suggestions of features they think are interesting, (d) somewhere else or some combination of all of these?.

A: It really is a combination of all of the above. We do emphasize customer feedback and embrace an approach that captures experience gained from engagements with our customers to make sure we address their needs. At the same time we take a wider and more forward looking view to make sure we can meet the challenges that our customers will face in the near term future (a few year ahead). As you personally know, we try to involve MVPs from the BizTalk customer and partner community to make sure our plans resonate with them. We have various other programs that let us get such feedback from customers as well as internal and external advisors at different stages of the planning process. Trying to weave together all of these inputs is a fine balancing act which makes product planning both very interesting and challenging…

Q: Microsoft has the (sometimes deserved) reputation for sitting on the sidelines of a particular software solution until the buzz, resulting products and the overall market have hit a particular maturation point. We saw aspects of this with BizTalk Server as the terms SOA, BPM and ESB were attached to it well after the establishment of those concepts in the industry. That said, what are the technologies, trends or buzz-worthy ideas that you keep an eye on and influence your thinking about future versions of BizTalk Server?

A: Unlike many of our competitors that try to align with the market hype by frequently acquiring technologies and thus burdening their customers with the challenge of integrating technologies that were never even meant to work together, we tend to take a take a different approach. We make sure that our application platform is well integrated and includes the right foundation to ease and commoditize software development and reduce complexities. Obviously it take more time to build an such an integrated platform based on rationalized capabilities as services rather than patch it together with foreign technologies. When you consider the fact that Microsoft has spearheaded service orientation with WS-* standards adoption as well as with very significant investments in WCF – you realize that such commitment have a large and long lasting impact on the way you build and deliver software.

With regard to BizTalk you can expect to see future versions that provide more ESB enhancements and better support for S+S solutions. We are going to showcase some of these capabilities even with BizTalk Server 2009 in the coming conferences.

Q: We often hear from enlightened Connected Systems folks that the WF/WCF/Dublin/Oslo collection of tools is complimentary to BizTalk and not in direct competition. Prove it to us! Give me a practical example of where BizTalk would work alongside those previously mentioned technologies to form a useful software solution.

A: Indeed BizTalk does already work alongside some of these technologies to deliver better value for customers. Take for example WCF that was integrated with BizTalk in the 2006 R2 release: the WCF adapter that contains 7 flavors of bindings can be used to expose BizTalk solutions as WS-* compliant web services and also to interface with LOB applications using adapters in the BizTalk Adapter Pack (which are based on the WCF LOB adapter SDK).

With enhanced integration between WF and WCF in .NET 3.5 you can experience more synergies with BizTalk Server 2009. You should soon see a new demo from Microsoft that highlights such WF and BizTalk integration. This demo, which we will unveil within a few weeks at TechEd North America, features a human workflow solution hosted in SharePoint implemented with WF (.NET 3.5) that invokes a system workflow solution implemented with BizTalk Server 2009 though the BizTalk WCF adapter.

When the “Dublin” and “Oslo” technologies will be released, you can expect to see practical examples of BizTalk solutions that leverage these. We already see some partners, MVPs and Microsoft experts that are experimenting with harnessing Oslo modeling capabilities for BizTalk solution (good examples are Yossi Dahan’s Oslo based solution for deploying BizTalk applications and Dana Kaufman’s A BizTalk DSL using “Oslo”). Future releases of BizTalk will provide better out-of the-box alignment with innovations in the Microsoft Application Platform technologies.

Q [stupid question]: You wear red glasses which give you a distinctive look. That’s an example of a good distinction. There are naturally BAD distinctions someone could have as well (e.g. “That guy always smells like kielbasa.”, “That guy never stops humming ‘Rock Me Amadeus’ from Falco.”, or “That guy wears pants so tight that I can see his heartbeat.”). Give me a distinction you would NOT want attached to yourself.

A: I’m sorry to disappoint you Richard but my red-rimmed glasses have broken down – you will have to get accustomed to seeing me in a brand new frame of a different color… J

A distinction I would NOT want to attach myself to would be “that unapproachable guy from Redmond who is unresponsive to my email”. Even as my workload increases I want to make sure I can still interact in a very informal manner with anybody on both professional and non-professional topics…

Thanks Ofer for a good chat. The BizTalk team is fairly good about soliciting feedback and listening to what they receive in return, and hopefully they continue this trend as the product continues to adapt to the maturing of the application platform.

Technorati Tags: BizTalk