In my last post, I looked at how BizTalk Server 2009 could send messages to the Azure .NET Services Service Bus. It’s only logical that I would also try and demonstrate integration in the other direction: can I send a message to a BizTalk receive location through the cloud service bus?

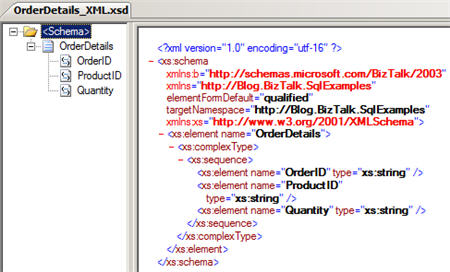

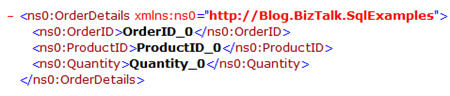

Let’s get started. First, I need to define the XSD schema which reflects the message I want routed through BizTalk Server. This is a painfully simple “customer” schema.

Next, I want to build a custom WSDL which outlines the message and operation that BizTalk will receive. I could walk through the wizards and the like, but all I really want is the WSDL file since I’ll pass this off to my service client later on. My WSDL references the previously built schema, and uses a single message, single port and single service.

<?xml version="1.0" encoding="utf-8"?>

<wsdl:definitions name="CustomerService"

targetNamespace="http://Seroter.Blog.BusSubscriber"

xmlns:wsdl="http://schemas.xmlsoap.org/wsdl/"

xmlns:soap="http://schemas.xmlsoap.org/wsdl/soap/"

xmlns:tns="http://Seroter.Blog.BusSubscriber"

xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<!-- declare types-->

<wsdl:types>

<xsd:schema targetNamespace="http://Seroter.Blog.BusSubscriber">

<xsd:import

schemaLocation="http://rseroter08:80/Customer_XML.xsd"

namespace="http://Seroter.Blog.BusSubscriber" />

</xsd:schema>

</wsdl:types>

<!-- declare messages-->

<wsdl:message name="CustomerMessage">

<wsdl:part name="part" element="tns:Customer" />

</wsdl:message>

<wsdl:message name="EmptyResponse" />

<!-- decare port types-->

<wsdl:portType name="PublishCustomer_PortType">

<wsdl:operation name="PublishCustomer">

<wsdl:input message="tns:CustomerMessage" />

<wsdl:output message="tns:EmptyResponse" />

</wsdl:operation>

</wsdl:portType>

<!-- declare binding-->

<wsdl:binding

name="PublishCustomer_Binding"

type="tns:PublishCustomer_PortType">

<soap:binding transport="http://schemas.xmlsoap.org/soap/http"/>

<wsdl:operation name="PublishCustomer">

<soap:operation soapAction="PublishCustomer" style="document"/>

<wsdl:input>

<soap:body use ="literal"/>

</wsdl:input>

<wsdl:output>

<soap:body use ="literal"/>

</wsdl:output>

</wsdl:operation>

</wsdl:binding>

<!-- declare service-->

<wsdl:service name="PublishCustomerService">

<wsdl:port

binding="PublishCustomer_Binding"

name="PublishCustomerPort">

<soap:address

location="http://localhost/Seroter.Blog.BusSubscriber"/>

</wsdl:port>

</wsdl:service>

</wsdl:definitions>

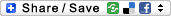

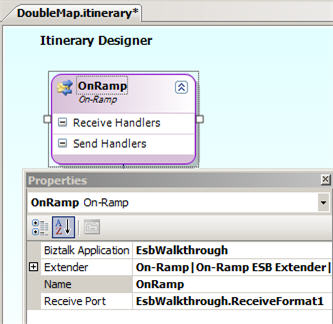

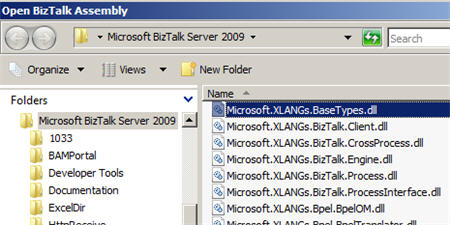

Note that the URL in the service address above doesn’t matter. We’ll be replacing this with our service bus address. Next (after deploying our BizTalk schema), we should configure the service-bus-connected receive location. We can take advantage of the WCF-Custom adapter here.

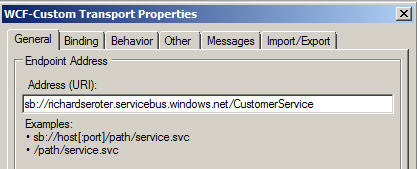

First, we set the Azure cloud address we wish to establish.

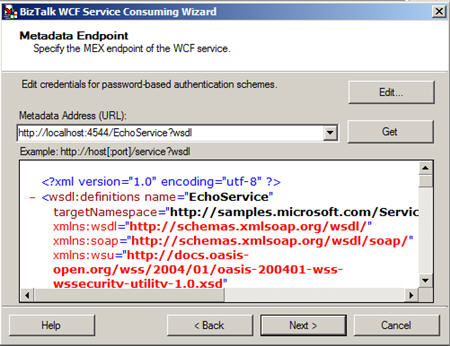

Next we set the binding, which in our case is the NetTcpRelayBinding. I’ve also explicitly set it up to use Transport security.

In order to authenticate with our Azure cloud service endpoint, we have to define our security scheme. I added an TransportClientEndpointBehavior and set it to use UserNamePassword credentials. Then, don’t forget to click the UserNamePassword node and enter your actual service bus credentials.

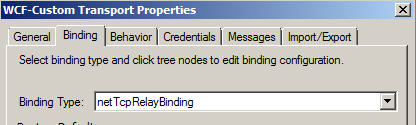

After creating a send port that subscribes on messages to this port and emits them to disk, we’re done with BizTalk. For good measure, you should start the receive location and monitor the event log to ensure that a successful connection is established.

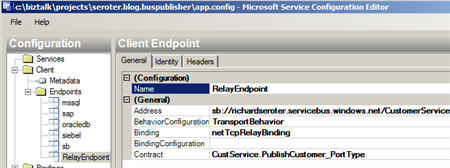

Now let’s turn our attention to the service client. I added a service reference to our hand-crafted WSDL and got the proxy classes and serializable types I was after. I didn’t get much added to my application configuration, so I went and added a new service bus endpoint whose address matches the cloud address I set in the BizTalk receive location.

You can see that I’ve also chosen a matching binding and was able to browse the contract by interrogating the client executable. In order to handle security to the cloud, I added the same TransportClientEndpointBehavior to this configuration file and associated it with my service.

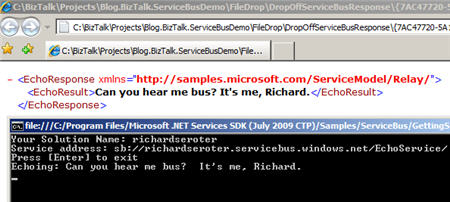

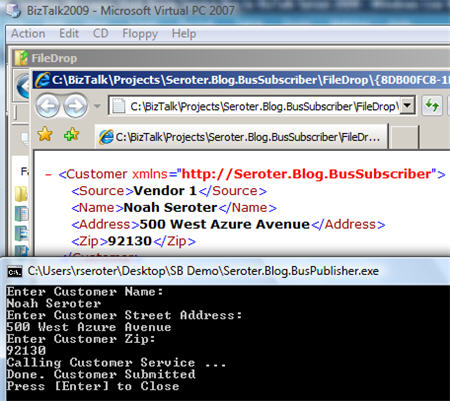

All that’s left is to test it. To better simulate the cloud experience, I gone ahead and copied the service client to my desktop computer and left my BizTalk Server running in its own virtual machine. If all works right, my service client should successfully connect to the cloud, transmit a message, and the .NET Service Bus will redirect (relay) that message, securely, to the BizTalk Server running in my virtual machine. I can see here that my console app has produced a message in the file folder connected to BizTalk.

And opening the message shows the same values entered in the service client’s console application.

Sweet. I honestly thought connecting BizTalk bi-directionally to Azure services was going to be more difficult. But the WCF adapters in BizTalk are pretty darn extensible and easily consume these new bindings. More importantly, we are beginning to see a new set of patterns emerge for integrating on-premises applications through the cloud. BizTalk may play a key role in receive from, sending to, and orchestrating cloud services in this new paradigm.

Technorati Tags: BizTalk, WCF, Azure, .NET Services